Prologika Newsletter Winter 2016

Designing an Operational Data Store (ODS)

I hope you’re enjoying the holiday season. I wish you all the best in 2017! The subject of this newsletter came from a Planning and Strategy assessment for a large organization. Before I get to it and speaking of planning, don’t forget to use your Microsoft planning days as they will expire at the end of your fiscal year. This is free money that Microsoft gives you to engage Microsoft Gold partners, such as Prologika, to help you plan your SQL Server and BI initiatives. Learn how the process works here.

I hope you’re enjoying the holiday season. I wish you all the best in 2017! The subject of this newsletter came from a Planning and Strategy assessment for a large organization. Before I get to it and speaking of planning, don’t forget to use your Microsoft planning days as they will expire at the end of your fiscal year. This is free money that Microsoft gives you to engage Microsoft Gold partners, such as Prologika, to help you plan your SQL Server and BI initiatives. Learn how the process works here.

Just like a data warehouse, Operational Data Store (ODS) can mean different things for different people. Do you remember the time when ODS and DW were conflicting methodologies and each one claimed to be superior than the other? Since then the scholars buried the hatchet and reached a consensus that you need both. I agree.

To me, ODS is nothing more than a staging database on steroids that sits between the source systems and DW in the BI architectural stack.

What’s Operational Data Store?

According to Wikipedia “an operational data store (or “ODS”) is a database designed to integrate data from multiple sources for additional operations on the data…The general purpose of an ODS is to integrate data from disparate source systems in a single structure, using data integration technologies like data virtualization, data federation, or extract, transform, and load. This will allow operational access to the data for operational reporting, master data or reference data management. An ODS is not a replacement or substitute for a data warehouse but in turn could become a source.”

OK, this is a good starting point. See also the “Operational Data Source (ODS) Defined” blog by James Serra. But how do you design an ODS? In general, I’ve seen two implementation patterns but the design approach you take would really depends on how you plan to use the data in the ODS and what downstream systems would need that data.

One to One Pull

ODS is typically implemented as 1:1 data pull from the source systems, where ETL stages all source tables required for operational reporting and downstream systems, such loading the data warehouse. ETL typically runs daily but it could run more often to meet low-latency reporting needs. The ETL process is typically just Extract and Load (it doesn’t do any transformations), except for keeping a history of changes (more on this in a moment). This results in a highly normalized schema that’s the same is the original source schema. Then when data is loaded in DW, it’s denormalized to conform to the star schema. Let’s summarize the pros and cons of the One:one Data Pull design pattern.

| Pros | Cons | |

| Table schema | Highly normalized and identical to the source system | The number of tables increase |

| Operational reporting | Users can query the source data as it’s stored in the original source. This offloads reporting from the source systems | No consolidated reporting if multiple source systems process same information, e.g. multiple systems to process claims |

| Changes to source schema | Source schema is preserved | Additional ETL is required to transform to star schema |

| ETL | Extraction and load from source systems (no transformations) | As source systems change, ETL needs to change |

Common Format

This design is preferred when the same business data is sourced from multiple source systems, such as when the source systems might change or be replaced over time. For example, an insurance company might have several systems to process claims. Instead of ending up with three sets of tables (one for each source system), the ODS schema is standardized and the feeds from the source systems are loaded into a shared table. For example, a common Claim table stores claim “feeds” from the three systems. As long as the source endpoint (table, view, or stored procedure) returns the data according to an agreed “contract” for the feed, ODS is abstracted from source system changes. This design is much less normalized. In fact, for the most part it should mimic the DW schema so that DW tables can piggy back on the ODS tables with no or minimum ETL.

| Pros | Cons | |

| Table schema | Denormalized and suitable for reporting | The original schema is lost |

| Operational reporting | Relevant information is consolidated and stored in one table | Schema is denormalized and reports might not reflect how the data is stored in the source systems |

| Schema changes to source systems | As long as the source endpoints adhere to the contract, ODS is abstracted from schema changes | A design contract needs to be prepared and sources systems need to provide the data in the agreed format |

| ETL | Less, or even no ETL to transform data from ODS to DW | ETL needs to follow the contract specification so upfront design effort is required |

Further Recommendations

Despite which design pattern you choose, here are some additional recommendations to take the most of your ODS:

- Store data at its most atomic level – No aggregations and summaries. Your DW would need the data at its lowest level anyway.

- Keep all the historical data or as long as required by your retention policy – This is great for auditing and allows you to reload the DW from ODS since it’s unlikely that source systems will keep historical data.

- Apply minimum to no ETL transformations in ODS – You would want the staged data to keep the same parity with the source data so that you can apply data quality and auditing checks.

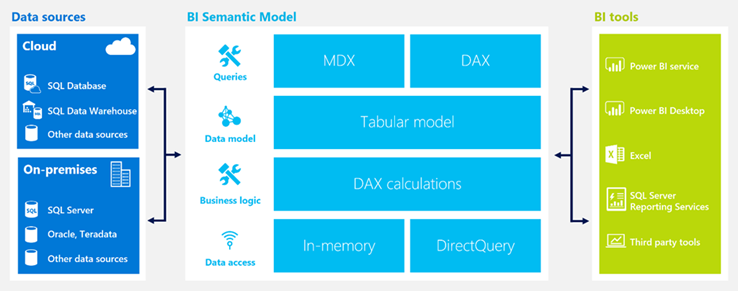

- Avoid business calculations in ODS – Business calculations, such as YTD, QTD, variances, etc., have no place in ODS. They should be defined in the semantic layer, e.g. Analysis Services model. If you attempt to do so in ODS, it will surely impact performance, forcing to you to pre-aggregate data. The only permissible type of reporting in ODS is operational reporting, such as to produce the same reports as the original systems (without giving users access to the source) or to validate that the DW results match the source systems.

- Maintain column changes to most columns – I kept the best for last. Treat most columns as Type 2 so that you now when a given row was changed in the source. This is great for auditing.

Here is a hypothetical Policy table that keeps Type 2 changes. In this example, the policy rate has changed on 5/8/2010. If you follow this design, you don’t have to maintain Type 2 in your DW (if you follow the Common Format pattern) and you don’t have to pick which columns are Type 2 (all of them are). It might be extreme but it’s good for auditing. Tip: use SQL Server 2016 temporal tables to simplify Type 2 date tracking.

| RowStartDate | RowEndDate | SourceID | RowIsCurrent | RowIsDeleted | ETLExecutionID | PolicyKey | PolicyID | PremiumRate | … |

| 5/2/2010 | 5/8/2010 | 1 | 0 | 0 | 0BB76521-AA63-… | 1 | 496961 | 0.45 | |

| 5/9/2010 | 12/31/9999 | 1 | 1 | 0 | CD348258-42ED-.. | 2 | 496961 | 0.50 |

MS BI Events in Atlanta

- Atlanta MS BI Group: “Master Data Management with SQL Server 2016 MDS” presentation by Neal Waterstreet on 1/31

- Atlanta MS BI Group: Presentation and sponsorship by SolidQ on 2/27.

Regards,

Teo Lachev

Prologika, LLC | Making Sense of Data

Microsoft Partner | Gold Data Analytics