3 Techniques to Save BI Implementation Effort

Everyone wants to press a button and have the entire BI system generated and ready to go. But things are not that simple. You know it and I know it. Nevertheless, BI automation tools are emerging with growing promises that propelled them to the Top 10 BI Trends according to the Information Management magazine. As a side note, it was interesting that the same article put Big Data in the No 1 spot despite that Gartner deemphasized the Big Data hype (based on my experience and polling attendees to our BI group meetings, many don’t even know what Big Data is). While I don’t dismiss the BI auto-generators can bring some value, such as impact analysis and native support of popular systems, such as ERP systems, there are also well known cautions, include vendor lock-in, a new toolset to learn, suboptimal performance, supporting the lowest feature denominator of targeted database, etc. However, you don’t have to purchase a tool to save redundant implementation effort. Instead consider the following techniques:

- Use the ELT (extraction, load, and transform) pattern instead or ETL (extract, transform, and load). While different solutions require different patterns, the ETL pattern is especially useful for data warehouse implementation because of the following advantages:

- Performance – Set-based processing for complicated lookups should be more efficient than row-by-row processing. In SQL Server, the cornerstone of the ETL pattern is the T-SQL MERGE statement which resolves inserts, updates, and deletes very efficiently.

- Features – Why ignore over two decades of SQL Server evolution? Many things are much easier in T-SQL and some don’t have SSIS counterparts.

- Maintenance – It’s much easier to fix a bug in a stored procedure and give the DBA the script than asking to redeploy a package (or even worse a project now that we’ve embraced the project model in SSIS 2012).

- Upgrading – As we’ve seen, SQL Server new releases bring new definitions that in many cases require significant testing effort. Not so with stored procedures.

- Scalability – if you need to load fact tables, you’d probably need to do partitions switching. This is yet another reason to stage data first before you apply transformations.

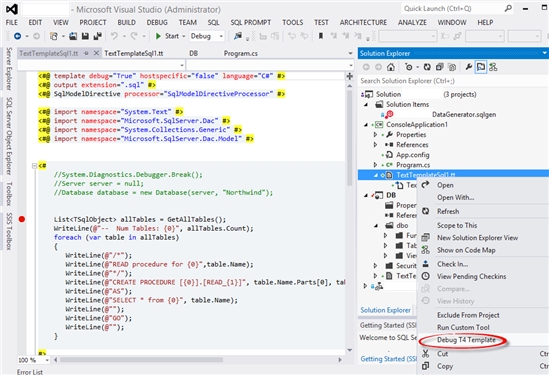

- Automate the generation of stored procedure. The ELT pattern relies heavily on stored procedures. If you have to populate a lot of tables, consider investing some upfront development effort to auto-generate stored procedures, such as by using the Visual Studio Text Templates (also known as T4) templates. Note that to be able to debug them in Visual Studio 2012, you would need to add the template to a .NET project, such as a console application.

3. Automate the generation of SSIS packages. If you embrace the ELT pattern, your SSIS packages will be simple. That’s because most of the ELT code will be externalized to stored procedures and you would only use SSIS to orchestrate the package execution. Yet, if you have to create a lot of SSIS packages, such as to load an ODS database, consider automating the processing by using the Business Intelligence Markup Language (BIML) Script. BIML is gaining momentum. The popular BIDS Helper tool supports BIML. And, if you want to take BIMS to the next level, my friends from Varigence has a product offering that should help.