Virtual Adventures

Scenario: Mount base and differencing VHD disks using Windows Virtual PC on Windows 7 x64.

Dude, did you run out of BI stuff to talk about? I didn’t but I think other folks may benefit from my experience especially MCTs who have discovered that Windows 7 hasn’t happened yet to the Microsoft Official Curriculum and resorted to all sorts of hacks to get Virtual Server 2005 or Lab Launcher working with Windows 7. In the process, I’ve learned a lot about the virtualization technology that goes beyond just mounting disks. OK, I have a hidden agenda to write this blog as well – I want to document these steps.

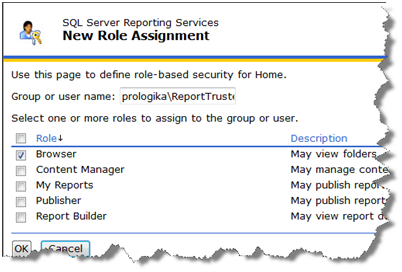

So, I am preparing to teach 6326A – Implementing and Maintaining Microsoft SQL Server 2008 Reporting Services in an instructor-led environment where I have to install the required software on the student’s machine. After downloading the trainer resources, I have discovered that Microsoft provides 6236A-NY-SQL-01.vhd disk which requires a base disk Base08A.vhd. Reading the setup guide included in the course revealed that Microsoft wants me to install Virtual Server 2005 on both the instructor and students machines.

Gotcha 1: Virtual Server 2005 doesn’t run on Windows 7. Browsing the MCT private groups I came across posts discussing a hack around this limitation but I didn’t want go that way. Not to mention that Microsoft Lab Launcher, which apparently is intended to simplify the VHD deployment to student machines, doesn’t run on Win 7 as well. A double-gotcha which picked up my interest and stirred my hacking instincts to get the whole thing working with Virtual PC. After all, I can’t control what OS the students run or the training center has installed.

OK, but this is just VHD disk, isn’t it? So, it should run on Windows Virtual PC, right? So, I created a new virtual machine and attempted to mount 6236A-NY-SQL-01.vhd but I was greeted with the following informative error:

Cannot attach the virtual hard disk to the virtual machine. Check the values provided and try again.

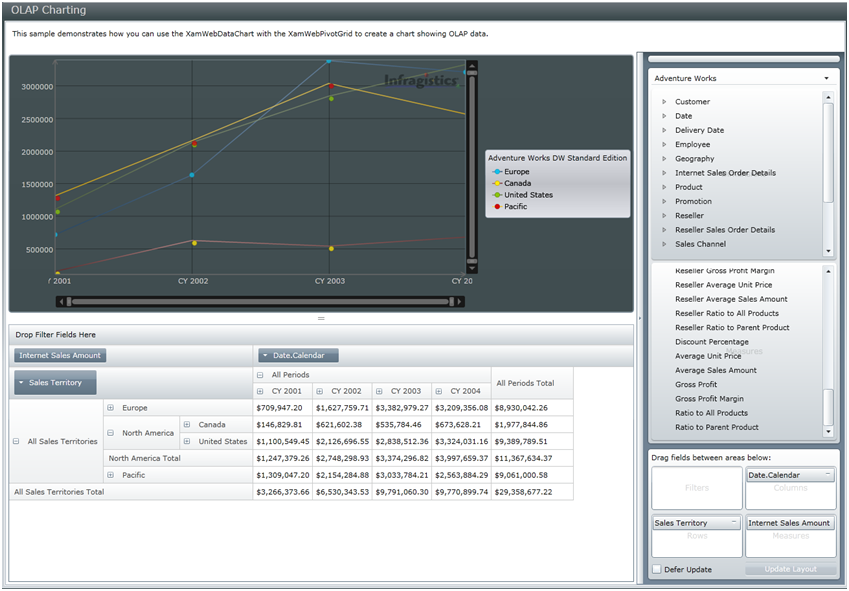

Then I mounted Base08A.vhd and I was able to boot the VM only to find out that it includes the Windows Server 2008 OS only. Where is the cool SQL Server BI stuff? This is the part where I realized that my virtual knowledge needs brushing up. Enter differencing disks. This of course is not explained in the setup document, so don’t try to find it there. I figured that Base08A.vhd is the base disk that has the OS only while the 6236A-NY-SQL-01.vhd is the differencing disk that has the rest (SQL Server 2008 + Reporting Services samples and Adventure Works database) installed. To prove my hypothesis, I used the Sun’s VirtualBox. I use VirtualBox for my personal VM work because it supports x64 guess OS, which Virtual PC doesn’t support (shame). I used the Disk Manager to add both the base and difference images and … lo and behold, the VM booted up and I was able to verify that SQL Server 2008 and rest of the goodies are installed.

But I didn’t want to force the students to install VirtualBox so I continued hacking my way to Windows Virtual PC. In a Eureka moment, I examined the Windows Event log and saw the following message:

The parent virtual hard disk ‘C:\Program Files\Microsoft Learning\Base\Base08A.vhd’ for the differencing virtual hard disk ‘C:\VPC\3263\6236A-NY-SQL-01.vhd’ does not exist. Please reconnect the differencing virtual hard disk to the correct parent virtual hard disk.

So, the base disk path is hardcoded in the differencing image. The following commands confirmed this.

- Went to command prompt and entered (the actual commands are in bold):

C:\>Diskpart

DISKPART> select vdisk file=”c:\vpc\6236\6236A-NY-SQL-01.vhd”

DiskPart successfully selected the virtual disk file.

DISKPART> detail vdisk

Device type ID: 2 (VHD)

Vendor ID: {EC984AEC-A0F9-47E9-901F-71415A66345B} (Microsoft Corporation)

State: Added

Virtual size: 64 GB

Physical size: 9 GB

Filename: c:\vpc\6236\6236A-NY-SQL-01.vhd

Is Child: Yes

Parent Filename: C:\Program Files\Microsoft Learning\Base\Base08A.vhd

Associated disk#: Not found.

- I moved the based image (Base08A.vhd) to C:\Program Files\Microsoft Learning\Base. With a baited breath, I repeated the process of creating a new virtual machine in Windows Virtual PC pointing to the existing 6236A-NY-SQL-01.vhd but I got the first error:

Cannot attach the virtual hard disk to the virtual machine. Check the values provided and try again.

No more details in the Windows event log. At this point, I posted a question to the Windows 7 Virtualization discussion forum and I was clued in the disk virtual size (not physical size) could be the issue since Virtual PC doesn’t support disks larger than 127 GB. Although after attaching the 6236A-NY-SQL-01.vhd using the Windows 7 Disk Manager I could see that the virtual size is 64 GB, I compacted the base disk using these steps. That didn’t help and the hour was getting late. So I threw the white towel on the differencing disk approach (for now) and decided to merge the differencing disk into the base disk.

Assuming the commands in step 1), I executed the following commands:

DISKPART>detach vdisk

DiskPart successfully detached the virtual disk file.

DISKPART>merge vdisk depth=1

100 percent complete

(after a long wait …)

DiskPart successfully merged the differencing chain.

Now that I had the disks merged I was able to create a new virtual machine successfully using the base disk image (Base08A.vhd) which combined both the original and differencing disks. Since the differencing disk was merged, I could delete 6236A-NY-SQL-01.vhd.

Gotcha 2: After booting the virtual machine, I was able to log in using the pre-defined Student account. However, attempting to run the SQL Server Management Studio, I got an error that the SQL Server 2008 evaluation period has expired. I attempted to reset the Windows Server 2008 (guest OS) system date, but after a few seconds the date would revert to the present date. More binging the Internet solved the issue.

- Shut down the VM.

- Open the vmc file in Notepad.

- Chang the last six digits to a year ago (070109)

<time_bytes type=”bytes”>57002400060004070109</time_bytes> - Further down the file, disable guest-to-host OS time synchronization:

<host_time_sync><enabled type=”boolean”>false</enabled>

<frequency type=”integer”>15</frequency>

<threshold type=”integer”>10</threshold>

</host_time_sync>

- Boot VM. Now change the system date to 07-01-09.

Success!

Now that I’ve got my copy and read it, I can say a few things about the

Now that I’ve got my copy and read it, I can say a few things about the