Web API CORS Adventures

I’ve been doing some work with ASP.NET Web API and I’m setting a demo service in the cloud (more on this in a future post). The service could be potentially accessed by any user. For demo purposes, I wanted to show how jQuery script in a web page can invoke the service. Of course, this requires a cross-domain Javascript call. If you have experience with web services programming, you might recall that a few years ago this scenario was notoriously difficult to implement because the browsers would just drop the call. However, with the recent interest in cloud deployments, things are getting much easier although traps await you.

I settled on ASP.NET Web API because of its invocation simplicity and gaining popularity. If you are new to Web API, check the Your First Web API tutorial. To execute safely, Javascript cross-domain calls need to adhere to the Cross-origin resource sharing (CORS) mechanism. To update your Web API service to support CORS, you need to update your ASP.NET MVC 4 project as described here. This involves installing the Microsoft ASP.NET Cross-origin Support package (currently in a prerelease state) and its dependencies. You need also the latest version of System.Web.Helpers, System.Web.MVC, and other packages. Once all dependencies are updated, add the following line to the end of the Register method of WebApiConfig.cs

config.EnableCors(new EnableCorsAttribute(“*”, “*”, “*”));

After testing locally, I’ve deployed the service to a Virtual Machine running on Azure (no surprises here). For the client, I changed the index.cshtml view to use jQuery to make the call via HTTP POST. I decided on POST because I didn’t want to deal with JSONP complexity and because the payload of the complex object I’m passing might exceed 1,024 bytes. The most important code section in the client-side script is:

var DTO = JSON.stringify(payload);

jQuery.support.cors = true;

$.ajax({

url: ‘http://<server URL>’, //calling Web API controller

cache: false,

crossDomain: true,

type: ‘POST’,

contentType: ‘application/json; charset=utf-8’,

data: DTO,

dataType: “json”,

success: function (payload) {…}

.fail(function (xhr, textStatus, err) {..}

Now, this is where you might have some fun. As it turned out, Chrome would execute the call successfully right off the bat. On the other hand, IE 10 barked with Access Denied. This predicament and the ensuing research threw me in a wrong direction and let me to believe that IE doesn’t support CORS and I had to use a script, such as jQuery.XDomainRequest.js, as a workaround. As a result, the call would now go out to the server but the server would return “415 Unsupported Media Type”. Many more hours lost in research…(Fiddler does wonders here). The reason was that the script delegates the call to the XDomainRequest object which doesn’t support custom request headers. Consequently, the POST request won’t include the Content-Type: ‘application/json’ header and the server drops the call because it can’t find a formatter to deserialize the payload.

As it turns out, you don’t need scripts. The reason why IE drops the call with Access Denied is because its default security settings disallow cross-domain calls. To change this:

- Add the service URL to the Trusted Sites in IE. This is not needed but it’s a good idea anyway.

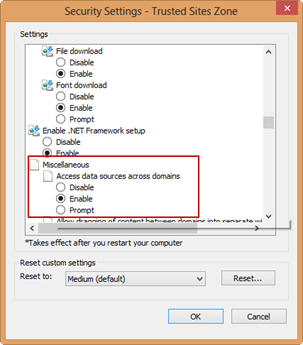

- Open IE Internet Options and select the Security tab. Then, select Trusted Sites and click the Custom Level button.

- In the Security Settings dialog box, scroll down to the Miscellaneous section and set “Access data sources across domains”. Restart your computer.

Apparently, Microsoft learned their lesson from all the security exploits and decided to shut the cross-domain door. IMO, a better option that would have prevented many hours of debugging and tracing would have been to detect that Javascript attempts CORS (jQuery.support.cors=true) and explain the steps to change the default settings or, better yet, implement CORS preflight as the other browsers do (Chrome submits OPTIONS to ask the server if the operation is allowed before the actual POST).

UPDATE 9/10/2013

When using the web server built in Visual Studio and you debug/test the browser opens the page with localhost:portnumber. However, the browser (I tested this with IE and Chrome) does not consider the port to be a part of the Security Identifier (origin) used for Same Origin Policy enforcement and the call will fail.