Atlanta MS BI and Power BI Group Meeting on August 1st (Power BI Automation)

Please join us online for the next Atlanta MS BI and Power BI Group meeting on Monday, August 1st, at 6:30 PM ET. We’ll have two presentations by 3Cloud. For more details and sign up, visit our group page.

| Presentation: | 1. “Power BI Meets Programmability – TOM, XMLA, and C#” by Kristyna Hughes 2. “Automation Using Tabular Editor Advanced Scripting” by Tim Keeler |

| Date: | August 1st |

| Time: | 6:30 – 8:30 PM ET |

| Place: | Click here to join the meeting |

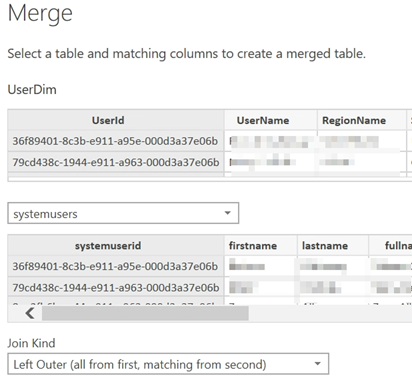

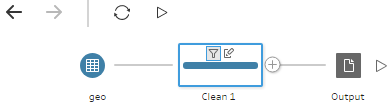

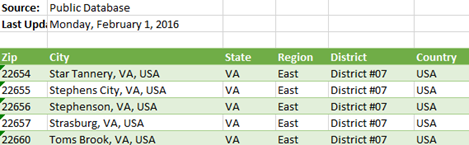

| Overview: | Power BI Meets Programmability – TOM, XMLA, and C# Tune in to learn how to programmatically add columns and measures to Power BI data models using TOM, XMLA, and C#! XMLA is a powerful tool available in the online Power BI service that allows report developers to connect to their data model and adjust a variety of entities outside the Power BI Desktop application. Combined with a .NET application, this can be a powerful tool in deploying changes to your Power BI data models programmatically. Automation Using Tabular Editor Advanced Scripting See examples of how advanced scripting in Tabular Editor can be used to automate the creation of DAX measures, calculation groups, and provide insights into your model while reducing development time and manual effort. |

| Speaker: | Kristyna Hughes’s experience includes implementing and managing enterprise-level Power BI instance, training teams on reporting best practices, and building templates for scalable analytics. Currently, Kristina is a data & analytics consultant at 3Cloud and enjoy answering qualitative questions with quantitative answers. Check out my blog at https://dataonwheels.wordpress.com/ and connect on LinkedIn https://www.linkedin.com/in/kristyna-hughes-dataonwheels/ Tim Keeler is a data analytics professional with over 17 years of experience developing cost-to-serve models, business intelligence solutions, and managing teams of other data professionals to help organizations achieve their strategic objectives. Check my blog at https://www.linkedin.com/in/tim-keeler-32631912/ |

| Prototypes without Pizza | Power BI Latest |