Now that the official word got out during the Ted Kummert’s keynote today at SQL PASS, I can open my mouth about Crescent – the code name of an ad-hoc reporting layer that will be released in the next version of SQL Server – Denali. Crescent is a major enhancement to Reporting Services and Microsoft Self-Service BI strategy. Up to now, SSRS didn’t have a web-based report designer. Denali will change that by adding a brand new report authoring tool that will be powered by Silverlight. So, this will be the fifth report designer after BIDS, Report Builder 1.0 (not sure if RB 1.0 will survive SQL 11), Report Builder 3.0, Visual Studio Report Designer.

Besides brining report authoring to the web, what’s interesting about Crescent is that it will redefine the report authoring experience and even what a report is. Traditionally, Reporting Services reports (as well as reports from other vendors) have been “canned”, that is, once you publish the report, its layout becomes fixed. True, you could implement interactive features to jazz up the report a bit but changes to the original design, such as adding new columns or switching from a tabular layout to a crosstab layout, requires opening the report in a report designer, making the changes, and viewing/republishing the report. As you would recall, each of the previous report designers would have separate design and preview modes.

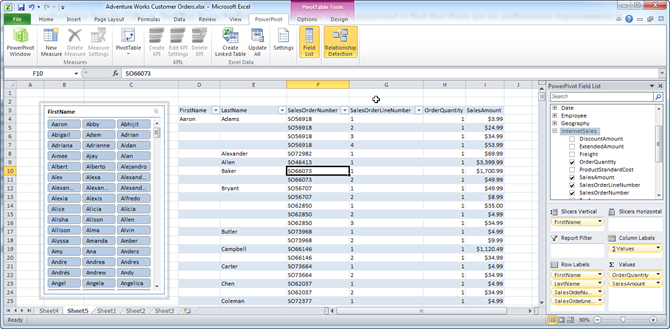

Crescent will change all of this and it will make the reporting experience more interactive and similar to Excel PivotTable and tools from other vendors, such as Tableau. Those of you who saw the keynote today got a sneak preview of Crescent and its capabilities. You saw how the end user can quickly create an interactive report by dragging metadata, a-la Microsoft Excel, and then with a few mouse clicks change the report layout without switching to design mode. In fact, Crescent doesn’t have a formal design mode.

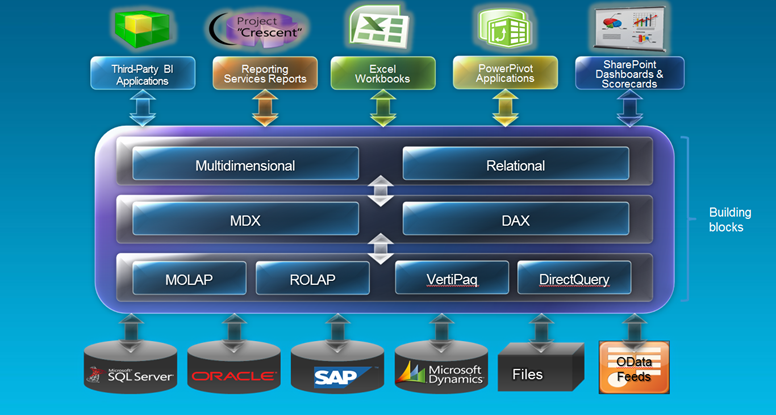

How will this magic happen? As it turns out, Crescent will be powered by a new ad-hoc model called Business Intelligence Semantic Model (BISM) that probably will be a fusion between SMDL (think Report Builder models) and PowerPivot, with the latter now supporting also relational data sources. The Amir’s demo showed an impressive response time when querying billion rows from a relational database. I still need to wrap my head around the new model as more details become available (stay tuned) but I am excited about it and the new BI scenarios it will make possible besides traditional standard reporting. It’s great to see the Reporting Services and Analysis Services teams working together and I am sure good things will happen to those who wait. Following the trend toward SharePoint as a BI hub, Crescent unfurtantely will be available only in SharePoint mode. At this point, we don’t know what Reporting Services and RDL features it will support but one can expect tradeoffs given its first release, brand new architecture and self-service BI focus.

So, Crescent is a code name for a new web-based fusion between SSRS and BISM (to be more accurate Analysis Services in VertiPaq mode). I won’t be surprised if its official name will be PowerReport. Now that I picked your interest, where is Crescent? Crescent is not included in CTP1. More than likely, it will be in the next CTP which is expected around January timeframe.