Processing AAS Models Asynchronously

Analysis Services normally requires a live connection between the client and server. If the connection is interrupted, the server considers that the client has lost interest and cancels the running operation.Strictly speaking, there is a ‘long running operations’ (LRO) protocol built over the HTTP XMLA messaging protocol which mitigates the issue of disconnects by attempting to keep alive the request/responses. This helps avoid the HTTP disconnection problem for the client libraries and improve the reliability of client applications. But in the end, your mileage will vary depending on the reliability of the networks in between the client machine and the service endpoint.

You don’t have to worry about the connection quality when you send queries as they usually execute fast. Processing, however, is a different story as it might take very long. As a reference point, a 4 billion database can take easily half a day to refresh. Babysitting processing and hoping that the client doesn’t lose the connection, or that SSMS (or whatever management client you use) doesn’t crash is not fun. Not to mention that such issues might not be immediately evident. For example, a few times when we attempted to process the afore mentioned database, SSMS either hung or crashed at the end of the processing task with errors such as “The operation has been cancelled”. You can easily waste hours and even days to restart failed processing and this can get frustrating.

Is it possible to fire and forget processing tasks? If you use Azure Analysis Services (AAS), you’re in luck.

AAS supports processing tasks asynchronously with REST APIs. The difference is that the service component (REST API) maintains the connectivity to the server – thus reducing the chances of HTTP disconnections from the external application. Microsoft has provided a RestAPISample console app to help you get started. As with any REST API invocation, you’d need to register the app in the Azure Portal so that you can authenticate successfully. Other than that, it’s simple to invoke the REST API and Microsoft has provided step-by-step instructions.

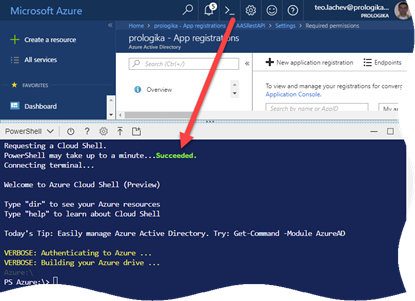

Another, although synchronous, option is to run a PowerShell script in the Azure Cloud Shell environment. You can upload the script as a file. The script can ask you to provide credentials interactively (Get-Credentials method) or you can hardcode the credentials. Here is an example of a PowerShell script that processes a specific table.

#prerequisites

#Install-Module -Name SqlServer -AllowClobber

#Install-Module -Name Azure.AnalysisServices

Import-Module SqlServer

Import-Module Azure.AnalysisServices

$ServerName = “asazure://aspaaseastus2.asazure.windows.net/prologika”

$DatabaseName = “AdventureWorks Tabular Model SQL 2012″

$TableName =”Reseller Sales”

$Credential = Get-Credential

# —or —

# $secPwd = ConvertTo-SecureString “PlainTextPassword” -AsPlainText -Force

# $Credential = New-Object System.Management.Automation.PSCredential (“username”, $secPwd)

$result = Invoke-ProcessTable -Server $ServerName -Database $DatabaseName -TableName $TableName –RefreshType Full -Credential $Credential

Of course, the PowerShell option is not immune to connection interruptions but the hope is that because you’re running it on Azure, the connection would be more stable compared to initiating it on your laptop. And you’re offloading the task to Azure so you don’t have to install or run anything locally.