How Much Are You Really Saving?

I helped an ISV a while back with their BI model design. They purchased a data transformation tool for $5,200/year because it’s “simple to use” (the tool was designed for self-service data transformation tasks by business analysists) and “relatively inexpensive”. The idea was to run the tool manually every time they have new data, import data from text files, transform, and output the data in another set of files, and then load the transformed data in Power BI. However, as it typically happens, the data transformation complexity quickly outgrew this approach. What did the ISV learn along the way?

- Stage the data – Although it’s tempting to do transformations on the fly, data typically must be staged so you can query and manipulate it. This is also required to compare the input dataset with the target dataset and take care of things like Type 2 slowly changing dimensions that may sneak in unexpected.

- Get a relational database – At much lower price point of purchasing a “user-friendly” ETL tool that does transforms only, the ISV could have bought a scalable SQL Server Standard Edition priced at $1,859 per core (or $7,500 for four cores). Mind you that this is a perpetual license that will typically last you 2-3 years until it’s time for upgrade. And they must pay only for production use (remember that on-prem SQL Server is free for dev and test). Or, they could have opted for an Azure SQL Database and pay as they go (Microsoft gives away $120,000 as Azure Cloud credits for qualifying startups for two years). Or, if they are really under budget and don’t have large data volumes, they could have gone with the free SQL Server Express edition.

- Use the ELT pattern – What happens when you outgrow the capabilities of the “user-friendly” tool and you must migrate to a professional tool, like SSIS or ADF? Start over. You don’t want to do this, trust me, because more than 60% of the effort to build a BI solution typically goes into data prep. Personally, I’m a big fan of the ELT pattern (see my blog “3 Techniques to Save BI Implementation Effort” for more info on this pattern) that relies heavily on T-SQL and stored procedures. If you go ELT, the tool choice becomes irrelevant as you use it only to orchestrate data tasks. For example, you use Control Flow and very simple Data Flow (just a simple source-destination data flow to stage data) in SSIS. And as a bonus, if one day you migrate to Azure Synapse, you’ll find that it recommends ELT too. Since they wanted to run ETL occasionally, they could have used Visual Studio Community Edition and the SSIS extension. Or, they could have gone completely free by using an open-source ETL tool, like Talend.

When evaluating tools, cost is not the only factor. Your design must be flexible and capable of accommodating changes which are sure to happen.

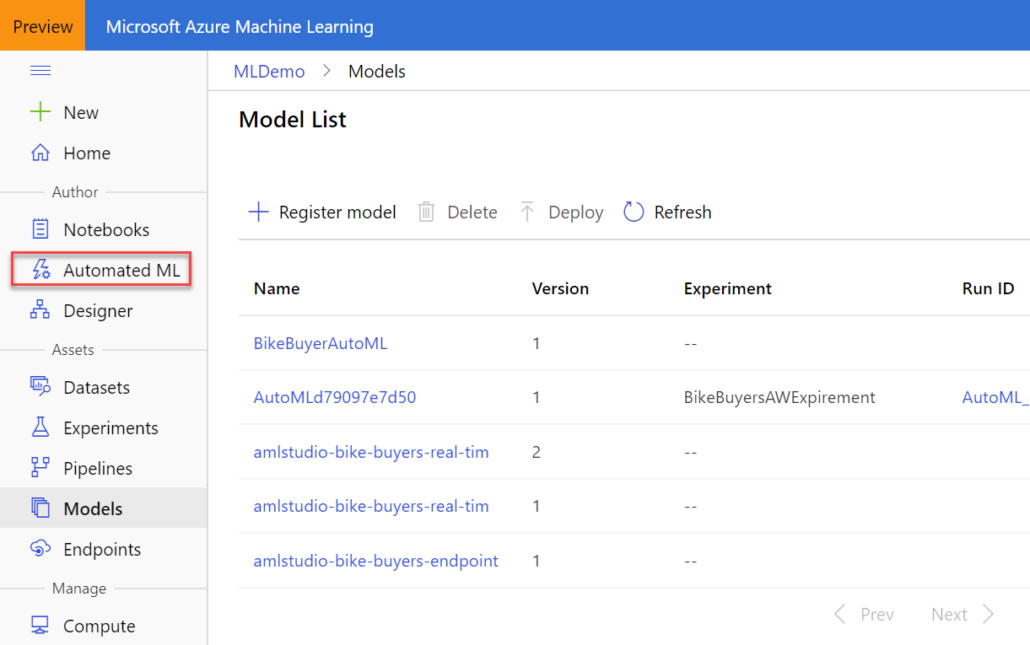

Do you know that according to Gartner, at least five of the top 10 technology trends for 2020 will involve predictive analytics? And the third on the list is “democratization” to deliver it to non-specialists. With the growing demand for predictive analytics, Automated Machine Learning (AutoML) aims to simplify and democratize predictive analytics so business users can create their own predictive models. The promise of AutoML is to bring predictive analytics to business users, just like Power BI democratizes data analytics, Power Apps democratizes app dev, and Power Query democratizes data shaping and transformation.

Do you know that according to Gartner, at least five of the top 10 technology trends for 2020 will involve predictive analytics? And the third on the list is “democratization” to deliver it to non-specialists. With the growing demand for predictive analytics, Automated Machine Learning (AutoML) aims to simplify and democratize predictive analytics so business users can create their own predictive models. The promise of AutoML is to bring predictive analytics to business users, just like Power BI democratizes data analytics, Power Apps democratizes app dev, and Power Query democratizes data shaping and transformation.