Azure Synapse: The Good, The Bad, and The Ugly

Cloud deployments are the norm nowadays for new software projects, including BI. And Azure Synapse shows a great potential for modern cloud-based data analytics. Here are some high-level pros and cons to keep in mind for implementing Azure Synapse-centered solutions that I harvested from my real-life projects and workshops.

The Good

There is plenty to like in Azure Synapse which is the evaluation of Azure SQL DW. If you’re tasked to implement a cloud-based data warehouse, you have a choice among three Azure SQL Server-based PaaS offerings, including Azure SQL Database, Azure SQL Managed Instance, and Azure Synapse. In a nutshell, Azure SQL Database and Azure SQL MI are optimized for OLTP workloads. For example, they have full logging enabled and replicate each transaction across replicas. Full logging is usually a no-no for decent size DW workloads because of the massive ETL changes involved.

In addition, to achieve good performance, you’ll find yourself moving up the performance tiers and toward the price point of the lower Azure Synapse SKUs. Further, unlike Azure SQL Database, Azure Synapse can be paused, such as when reports hit a semantic layer instead of DW, and this may offer additional cost cutting options.

At the heart of Azure Synapse is the SQL Pool (previously known as Azure SQL DW) which hosts your DW. As an MPP system, it can scale to petabytes of data with proper sizing and good design. The obvious benefit is that for the most part (see the Ugly section discussing exclusions) you can carry your SQL Server skills to Azure Synapse.

As a general rule of thumb, Azure Synapse should be at the forefront of your modern cloud DW with data loads starting with 500 GB.

I liked the integration with Azure Data Lake Storage (ADLS). Previously, you had to set up external tables to access files in ADLS via PolyBase. This is now unnecessary thanks to the serverless (on-demand) endpoint which also replaces the Gen1 U-SQL with T-SQL. All you have to do is link your ADLS storage accounts and use the T-SQL OPENROWSET construct to query your ADLS files and exposed them as SQL datasets. Not only does this opens new opportunities for ad hoc reporting directly on top of the data lake, but it also can help you save tons of ETL effort to avoid staging the ADLS data while loading your DW.

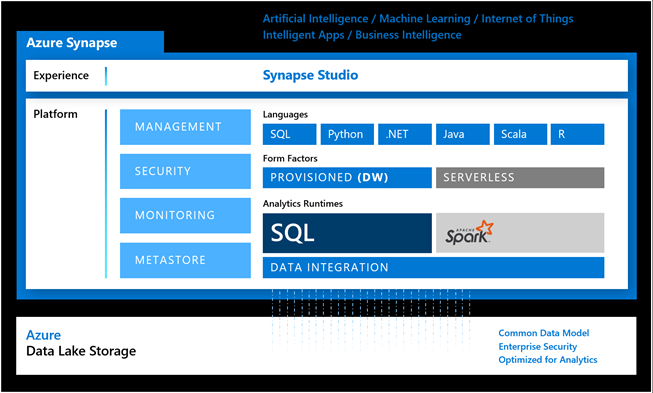

As the diagram shows, you can also provision an Azure Spark runtime under a Synapse workspace. This opens the possibility for using Azure Spark/Databricks for data transformation and data science. For example, a data scientist can create notebooks in Synapse Studio that use a programming language of their choice (SQL, Python, .NET, Java, Scala, and R are currently supported).

The Bad

You have two coding options with Synapse.

- You can use the respective development environment, such as Azure Data Factory Studio for ADF development and Power BI Desktop for Power BI report development.

- You can use Synapse Studio which attempts to centralize coding in one place.

Besides the ADLS integration and notebooks, I find no advantages in the Synapse Studio “unified experience”, which is currently in preview. In fact, I found it confusing and limiting. The idea here is to offer an online development environment for coding and accessing in one place all artifacts surrounding your DW project. Currently, these are limited to T-SQL scripts, notebooks, Azure Data Factory pipelines, and Power BI datasets and reports.

However, I see no good reason to abandon the ADF Studio and do ETL coding in Synapse Studio. In fact, I can only see reasons against doing so. For example, Synapse Studio lags in ADF features, such as source code integration and wrangling workflows. For some obscure reason, Synapse Studio separates data flows from ADF pipelines in its own Data Flows section in the Development Hub. This is like putting the SSIS data flow outside the package. Not to mention that inline data flows should be deemphasized since ELT (not ETL) is the preferred pattern for loading Azure Synapse. To make things worse, once you decide to embrace Synapse Studio for developing ADF artifacts, you can use only Synapse Studio because the ADF pipelines are not available outside Synapse.

On the Power BI side of things, you can create a Power BI dataset in Synapse Studio. All this saves is the effort to go through two Get Data steps in Power BI Desktop to connect to your Azure Synapse endpoint. You can also create Power BI reports using Power BI Embedded in Azure Synapse. Why would I do this outside Power BI Desktop is beyond me. Perhaps, I’m missing the big picture and more features might be coming as Synapse evolves. Speaking of evolution, as of this time, only SQL pools are generally available. Everything else is in preview.

The Ugly

Developers moving from on-prem BI implementations to Azure Synapse, would naturally expect all SQL Server features to be supported, just like moving from on-prem SSAS to Azure AS. However, they will quickly find that Azure Synapse is “one of a kind” SQL Server. The nice user interface in SSMS for carrying out admin tasks, such as creating logins and assigning logins to roles, doesn’t work with Azure Synapse (all changes require T-SQL). SQL Profiler doesn’t work (tip: use the Profiler extension in Azure Data Studio for tracing queries in real time).

Because Azure Synapse uses Azure SQL Database, it’s limited to one physical database, so you must rely on using schemas to separate your staging and DW tables. There are also T-SQL limitations, such as my favorite MERGE for ELT-based UPSERTs is not yet supported (currently in preview for hash and round-robin distributed tables but not for replicated tables, such as dimension tables). So, don’t assume that you can seamlessly migrate your on-prem DW databases to Synapse. Also, don’t assume that your SQL Server knowledge will suffice. Prepare to seriously study Azure Synapse!