Power BI Dynamic M Query Parameters – Another Opportunity Missed for DirectQuery Users

UPDATE 17-Feb-2022: Dynamic query parameters now support popular relational data sources, and I posted an updated blog.

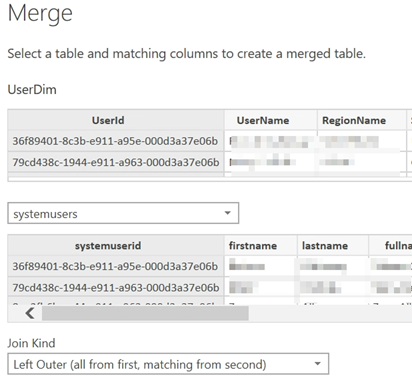

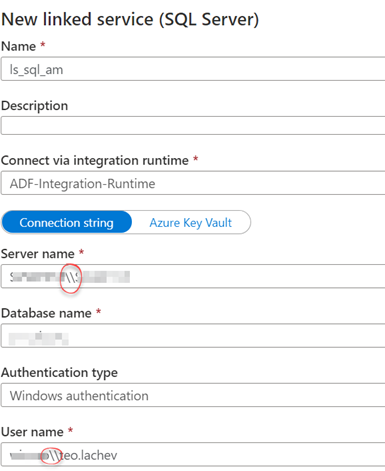

I had recently a Power BI advisory engagement for a large ISV. They had many customers. and each customer data was hosted in its own database for security reasons, with all databases having an identical schema. Power BI reports had to connect using DirectQuery to avoid refreshing data (real-time BI). Naturally, the customer envisioned a single set of reports with the ability to switch the dataset connection to the respective database depending on the user identity and company association. In addition, they wanted to piggyback on the existing database security policies by having each visual call a stored procedure and pass the user identity as a dynamic parameter.

Can Power BI do this? Unfortunately, no. The main architectural issue is that although the model (VertiPaq) and query (Power Query) are bundled in Power BI Desktop (and in a single dataset when published), they are like two separate universes. Naturally, this is not an issue when data is imported, but it becomes important with DirectQuery. As it stands, the only communication between these two layers is when Power Query passes the data back to the model. You can define query parameters, but they are static, meaning that they must be specified on the dataset settings page in Power BI Service. In other words, query parameter can’t reference DAX constructs, such as USERPRINCIPALNAME(), and therefore can’t reference runtime conditions.

That’s why I initially rejoiced when Microsoft announced Dynamic M Query Parameters, a feature that just went into public preview in the Power BI Desktop October 2020 release. Unfortunately, a better name of this feature would have been Kusto Dynamic Parameters since the primary design intent was to support Azure Data Explorer (internally named Kusto). It doesn’t apply to DirectQuery connections to most relational databases.

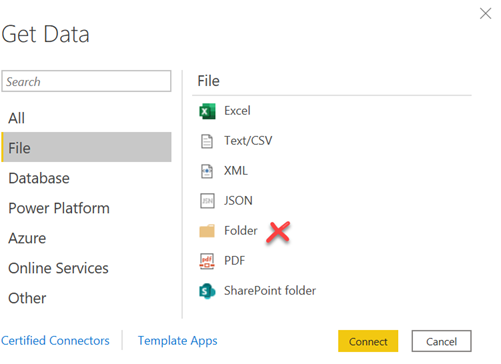

Although there is no indication, Power BI has two types of connectors: native and M-based. Native connectors target most popular relational data sources: TSQL (Azure Database, SQL Server, Synapse), PLSQL (Oracle), Teradata and relational SAP Hana. Dynamic query parameters won’t work with native connectors. The rest (Microsoft provided and custom) are M-based. If an M connector supports DirectQuery, it should support dynamic query parameters too. For example, besides Azure Data Explorer, other M-based data source that supports DirectQuery and therefore dynamic parameters are Amazon Redshift, Showflake, and Google BigQuery.

I hope Microsoft will revamp this feature to support the popular relational databases and DAX functions, so we can address more involved DirectQuery scenarios and make parameters really dynamics.