| Capability | My Rank | Teo’s Comments |

| Infrastructure | BI Platform Administration, Security and Architecture

Capabilities that enable platform security, administering users, auditing platform access and utilization, optimizing performance and ensuring high availability and disaster recovery. | Medium to High | There are a lot of things here:

Security – More work is required to support external users in Power BI, Power BI Embedded, and SSRS

Administering users – Power BI supports a progressive deployment depending on the desired level of integration with Office 365 (unmanaged tenant, managing tenant, and federated AD)

Auditing: Power BI supports auditing access and utilization but more work is required for proactive monitoring and improving its data governance capabilities

Performance and HA: Highly-redundant and scalable backend, consisting of SSAS Tabular farm and Azure services. |

Cloud BI

Platform-as-a-service and analytic-application-as-a-service capabilities for building, deploying and managing analytics and analytic applications in the cloud, based on data both in the cloud and on-premises | High | Power BI supports both pure cloud and hybrid architectures depending on data volumes and security requirements. No other vendor offers both architectures. |

Data Source Connectivity and Ingestion

Capabilities that allow users to connect to the structured and unstructured data contained within various types of storage platforms, both on-premises and in the cloud. | High | As of this time, Power BI supports close to 70 connectors to let you connect to cloud and on-premises data sources. No scripting required. |

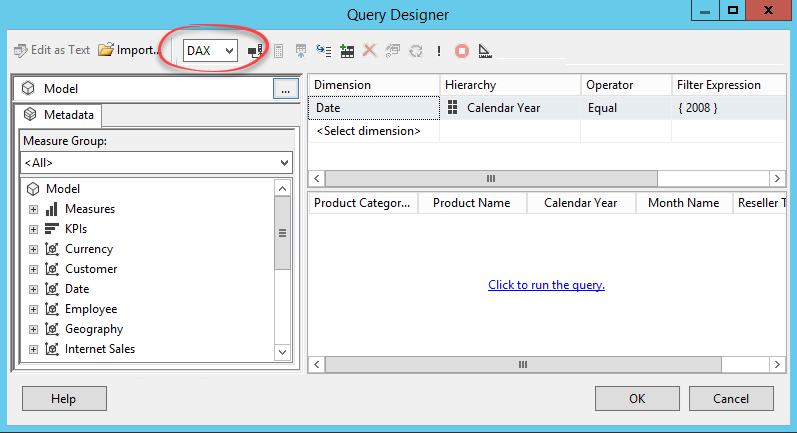

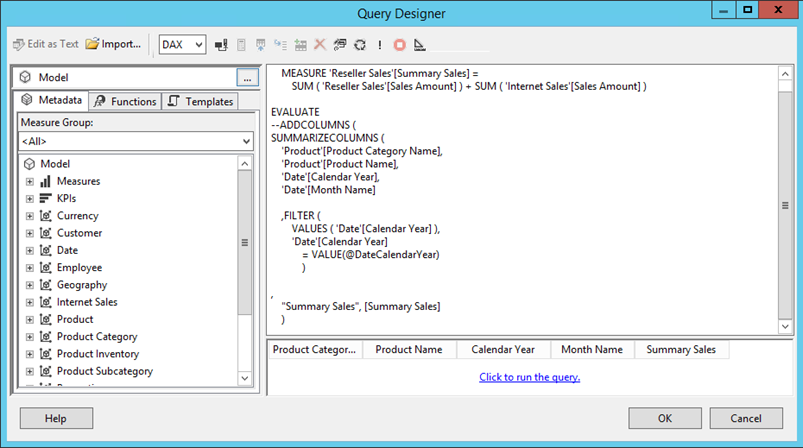

| Data Management | Metadata Management

Tools for enabling users to leverage a common SOR semantic model and metadata. These should provide a robust and centralized way for administrators to search, capture, store, reuse and publish metadata objects such as dimensions, hierarchies, measures, performance metrics/key performance indicators (KPIs), and report layout objects, parameters and so on. Administrators should have the ability to promote a business-user-defined data mashup and metadata to the SOR metadata. | Medium to High | Microsoft offers an organizational BI Semantic Model (BISM) which is typically implemented as an Analysis Services Tabular or Multidimensional models. On the self-service side of things, users can create Excel or Power BI Desktop models. IT can promote a self-service Excel model to a Tabular model. Although not officially supported because Power BI Desktop is changing every month, IT can upgrade a Power BI Desktop model to a Tabular model. |

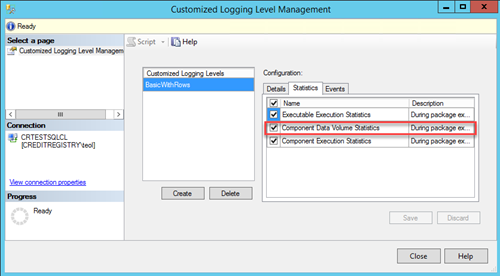

Self-Contained Extraction, Transformation and Loading (ETL) and Data Storage

Platform capabilities for accessing, integrating, transforming and loading data into a self-contained storage layer, with the ability to index data and manage data loads and refresh scheduling. | Medium | SSIS is the most popular on-premises ETL tool. More work is required to bring similar capabilities in the cloud (I think Azure Data Factory is a step backwards) |

Self-Service Data Preparation

The drag-and-drop, user-driven data combination of different sources, and the creation of analytic models such as user-defined measures, sets, groups and hierarchies. Advanced capabilities include semantic autodiscovery, intelligent joins, intelligent profiling, hierarchy generation, data lineage and data blending on varied data sources, including multistructured data | High | Power BI Desktop and Excel has a fantastic query editor (originated from Power Query) that scores big with business users. Other vendors that score high in the Magic Quadrant don’t have such native capabilities. Power BI and Excel have best of class self-modeling capabilities (much better than Tableau). Azure Query Catalog can be used for dataset autodiscovery. |

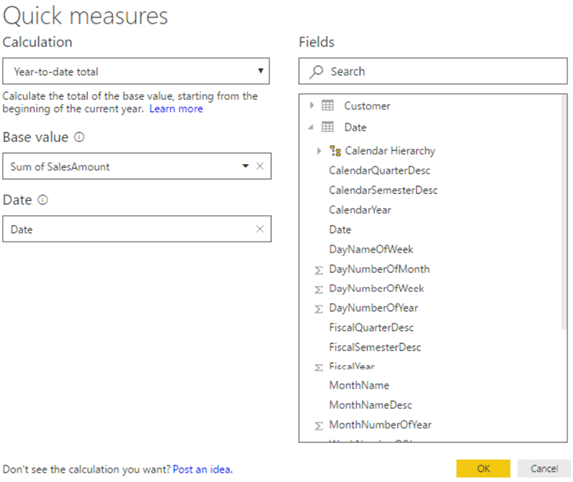

| Analysis and Content Creation | Embedded Advanced Analytics

Enables users to easily access advanced analytics capabilities that are self-contained within the platform itself or available through the import and integration of externally developed models. | High | Not sure what is meant here by “advanced analytics capabilities”. Power BI supports Quick Insights (brute-force machine learning), integration with R, Azure Machine Learning, clustering, forecasting, binning, but I might be missing something. |

Analytic Dashboards

The ability to create highly interactive dashboards and content, with visual exploration and embedded advanced and geospatial analytics, to be consumed by others. | High | “Highly interactive dashboards and content” is what Power BI is all about. |

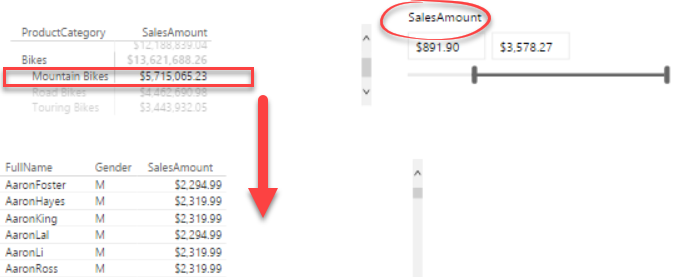

Interactive Visual Exploration

Enables the exploration of data via an array of visualization options that go beyond those of basic pie, bar and line charts to include heat and tree maps, geographic maps, scatter plots and other special-purpose visuals. These tools enable users to analyze and manipulate the data by interacting directly with a visual representation of it to display as percentages, bins and groups. | Medium | Power BI covers all stated requirements in this category. However, while its visualization capabilities are improving every week, more work is required to catch up with the Tableau and Qlik in some areas, such as cell drillthrough, ability to repeat visualizations, such as to nest a bar into a table, conditional binding, exression-based properties and filters, and others. |

Smart Data Discovery

Automatically finds, visualizes and narrates important findings such as correlations, exceptions, clusters, links and predictions in data that are relevant to users without requiring them to build models or write algorithms. Users explore data via visualizations, natural-language-generated narration, search and NLQ technologies. | Medium to High | Through Quick Insights, Power BI can automatically find correlations, outliers, and other hidden trends. It doesn’t currently have native narrative capabilities, except for the custom visual from Narrative Science. Power BI is one of the few vendors that offers natural queries. |

Mobile Exploration and Authoring

Enables organizations to develop and deliver content to mobile devices in a publishing and/or interactive mode, and takes advantage of mobile devices’ native capabilities, such as touchscreen, camera, location awareness and natural-language query | High | Native apps for iOS, Android and Windows to surface both Power BI and SSRS reports. |

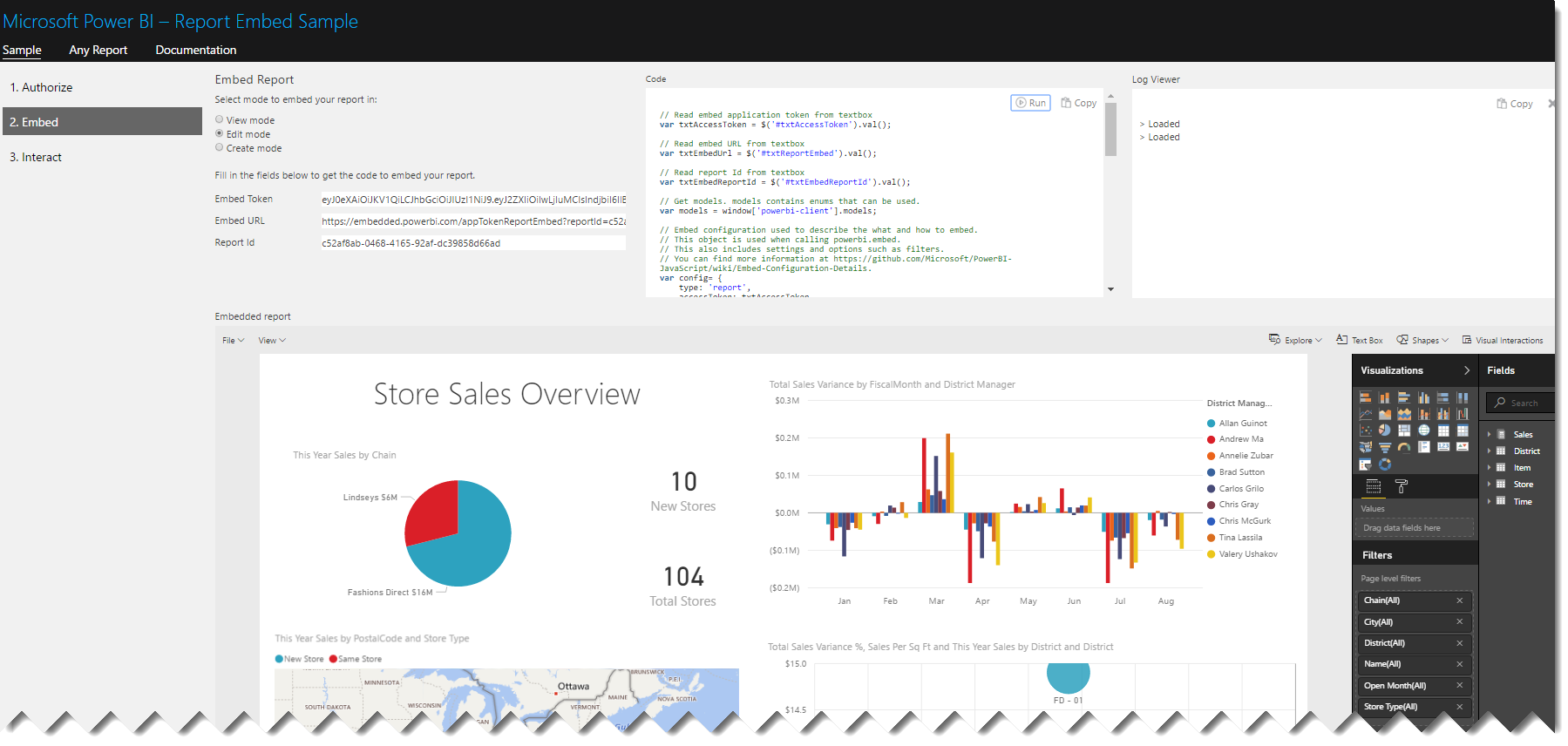

| Sharing of Findings | Embedding Analytic Content

Capabilities including a software developer’s kit with APIs and support for open standards for creating and modifying analytic content, visualizations and applications, embedding them into a business process, and/or an application or portal. These capabilities can reside outside the application (reusing the analytic infrastructure), but must be easily and seamlessly accessible from inside the application without forcing users to switch between systems. The capabilities for integrating BI and analytics with the application architecture will enable users to choose where in the business process the analytics should be embedded. | High | An Azure cloud service, Power BI Embedded allows you to embed reports for external users with very appealing cost-effective licensing model. On premises, SSRS offers similar capabilities. |

Publish, Share and Collaborate on Analytic Content

Capabilities that allow users to publish, deploy and operationalize analytic content through various output types and distribution methods, with support for content search, scheduling and alerts. Enables users to share, discuss and track information, analysis, analytic content and decisions via discussion threads, chat and annotations. | High | Power BI supports subscriptions and data alerts but we can do better, such as to allow an admin to subscribe other users. Power BI workspaces and groups allow users to share BI artifacts, participate in discussion lists, and share calendars. Power BI Mobile allows users to annotate reports. |

| Overall platform capabilities | Platform Capabilities and Workflow

This capability considers the degree to which capabilities are offered in a single, seamless product or across multiple products with little integration. | Medium to High | The integration hub is Power BI Service which integrates Power BI reports, Excel reports, SSRS reports. More work is required on integration with Excel reports that connect to SSAS models. |

Ease of Use and Visual Appeal

Ease of use to administer and deploy the platform, create content, consume and interact with content, as well as the visual appeal. | High | As far as deployment, Power BI is “five seconds to sign up, five minutes to wow!” As far as the visual appeal, beauty is the eye of the beholder but Power BI has done respectable job to follow best visualization practices. |

I hope you’re enjoying the beginning of 2017 and you’re staying healthy and busy. It’s been a hectic and exciting period for me. I published the second edition of my “Applied Microsoft Power BI”

I hope you’re enjoying the beginning of 2017 and you’re staying healthy and busy. It’s been a hectic and exciting period for me. I published the second edition of my “Applied Microsoft Power BI”