Implementing Cube Settings

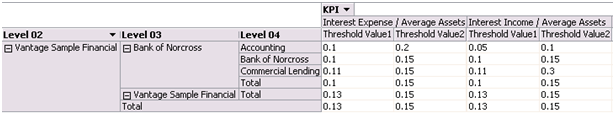

I had to tackle an interesting requirement the other day. A cube had a number of KPIs defined but the threshold values that were used to define the “good”, “bad”, and “so-so” status ranges had to be defined as configurable values at any level of the organization dimension and KPIs. I settled on implementing a measure group that intersects the organization dimension and a new KPI dimension that lists the KPI names.

For example, as the screenshot shows, each KPI has two threshold values (Threshold Value1 and Threshold Value2) which can be defined at any level of the organization hierarchy. Note that the parent values read the setting values directly from the fact table instead of being derived by rolling up from the children.

The first implementation approach that comes to mind is to use the None AggregateFunction function for the measure group so you read the measure values associated with the member directly from the fact table. Some experimenting revealed the following “noble” truths about the None AggregateFunction:

- BOL is wrong. I refer to the following statement:

(None AggregateFunction) No aggregation is performed, and all values for leaf and nonleaf members in a dimension are supplied directly from the fact table for the measure group that contains the measure. If no value can be read from the fact table for a member, the value for that member is set to null.

Specifically, When None is used, the server loads the fact data into the measure group leaves (just like with other aggregate function) but it doesn’t roll up the values to the dimension leaves. See this Mosha’s post to understand the difference between the dimension and measure group leaves.

- The way it stands, the None function brings a questionable value. A narrow case exists where you may want to perform assignments or calculations in the measure group leaves as the above post demonstrates. Why you would want to do this instead performing them at the fact table level (or as named calculations) for obvious performance advantages is beyond me.

- There is no way to bring the measure leaf values to the dimension leaves. Shoot me a note if you manage to come with some clever hack to do so.

Note: It would have been great if the None function behaves as described in the documentation. Even better, it could be nice if the server automatically brings up the fact value when the cube slice results in one fact record, i.e. the user has drilled down to measure group grain. This will make it possible to implement text-based measures, such to capture comments, or other text-based attributes, that don’t justify moving them to a dimension. If you agree, vote for this enhancement on connect.microsoft.com.

So, how do we get around this predicament? One option is define a custom ~ operator for the Organization parent-child dimension so the server doesn’t roll up the measure. However, the custom operator will be applied to all measures sliced by the Organization dimension which more than likely will be an issue. Of course, a trivial workaround is to duplicate the Organization dimension but this leads to other disadvantages. IMO, a better solution might be to use the DataMember property and a scope assignment:

SCOPE (

MeasureGroupMeasures(“KPI Configuration”),

[Organization].[Organization Hierarchy].Members,

[KPI].[KPI].[KPI]

);

this = ([Organization].[Organization Hierarchy].CurrentMember.DataMember);

END SCOPE;

I scope at the KPI Configuration measure group where it intersects with the members of the Organization and KPI dimensions. The AggregationFunction property of the measures in the KPI Configuration measure group is now set to SUM. The tuple on the right side of the this assignment returns the measure value associated directly with the current data member. The new result is that this assignment overwrites the SUM function with the measure leaf value.