Investigating Deadlocks

Two different customers having deadlock issues. The first one is having deadlock issues happening during DML operations in an OLTP database. The second one – during ETL load of a data warehouse. First, what is a deadlock? Imagine that a friend and you go to a supermarket to buy beer and chips. You both go to the same aisle and you both attempt to pick the same pack of beer and the same bag of cheeps (in reality, two rows might be located in the same page so the locks might be more coarse). While you pick the pack of beer and reach out for the chips, your friend has already picked the same bag of chips and he’s reaching for your pack of beer. Neither of you want to let go. So, a deadlock is caused by two transactions attempting to lock two resources in the reverse order.

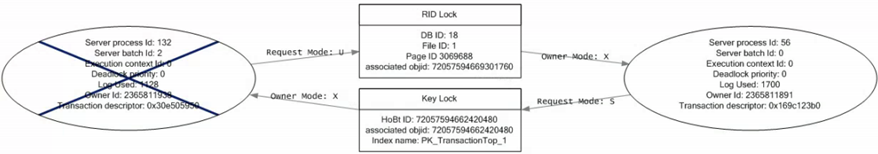

SQL Server has no way to resolve this situation but to terminate one of the transactions (usually the one that has done less work). It’s like the supermarket manager discovers the deadlock situation, figures out which customer entered second and sends him home empty handed. Terminating a customer is not good for business so I decided to use another metaphore. We can get more information about deadlocks by tracing the Deadlock Graph event in the SQL Profiler, such as to get this nice graph (I’ve noticed that when locks involved system processes, such as transactional replication, the graph is not so nice).

For more information about deadlocks and monitoring, read these two very informative blogs: How to Monitor Deadlocks in SQL Server and How to Track Down Deadlocks Using SQL Server 2005 Profiler. One thing that I want to add is that you can right-click the TextData column of the deadlock graph trace and save the payload as an XML file. Then, you can open the file in your favorite XML editor or Internet Explorer and see exactly which SQL statements are conflicting and which process was terminated.

After some investigation, it turned out that the deadlock issue for the first customer was caused by application transactions conflicting with triggers (recall that all operations in a trigger are executed under an implicit transaction). Interestingly, some of these trigger transactions were conflicting with a transactional replication that is replicating the tables from the OLTP server to a reporting database. The issue with the second customer is still under investigation but it looks like it’s caused by ETL processes trying to update a fact table at the same time possibly resulting in locking the same pages in reverse order. Here are some guidelines to avoid deadlocks:

- Revisit application transaction logic to make sure that tasks within transactions execute in the same order.

- Keep transactions short or don’t use transactions at all unless you absolutely must guarantee that the entire sequence of operations is undone if one operation fails. In many cases, it’s OK to let the first task succeed if a latter fails.

- Consider using NOLOCK to avoid locking when reading data. Try using the ROWLOCK hint for DML operations but watch for performance degradation when many rows are inserted. For parallel load into a partitioned table, change the table lock escalation ALTER TABLE … SET (LOCK_ESCALATION = AUTO). When loading a fact table, consider removing foreign keys on the large tables to avoid locking and speed up inserts

- Avoid triggers.

- When you inserted a batch of rows from different ETL packages into a fact table, carefully consider its clustered index. For example, if you choose to create the index on the DateKey column, all new rows will go into the end of the table, causing contention. One workaround could be to use a composite index, such as with two columns (LocationKey and DateKey) so inserts are spread out.