Prologika Newsletter Winter 2023

I’ve covered my first impression about Microsoft Fabric in a series of blogs starting with this one. In the recap, I said that certain features might benefit organizations that are already on Power BI Premium because all Fabric features are available in Power BI Premium plans. However, as they stand, features such as Lakehouse Copy to Table (no automated synchronization), ADF pipelines (no connectivity to on-prem data sources), Data Warehouse (missing important T-SQL features), Direct Lake (only available online), are half-baked, thus barring wide-spread Fabric adoption. This newsletter covers a good case for Fabric that I’ve recommended for a large insurer that has invested heavily in Microsoft Dynamics 365 and Power BI Premium. I’ll be in a better position to confirm after a POC with larger datasets so follow my blog for future updates.

I’ve covered my first impression about Microsoft Fabric in a series of blogs starting with this one. In the recap, I said that certain features might benefit organizations that are already on Power BI Premium because all Fabric features are available in Power BI Premium plans. However, as they stand, features such as Lakehouse Copy to Table (no automated synchronization), ADF pipelines (no connectivity to on-prem data sources), Data Warehouse (missing important T-SQL features), Direct Lake (only available online), are half-baked, thus barring wide-spread Fabric adoption. This newsletter covers a good case for Fabric that I’ve recommended for a large insurer that has invested heavily in Microsoft Dynamics 365 and Power BI Premium. I’ll be in a better position to confirm after a POC with larger datasets so follow my blog for future updates.

The Dynamics Integration Saga

Dynamics Online, aka Dynamics 365, epitomizes the customer’s struggle to export their data hosted in SaaS cloud offerings for analytics or other purposes. Since unfortunately Microsoft doesn’t provide direct access to the Dynamics native storage (Azure SQL Database), which often is the best solution, Dynamics has “compensated” throughout the years by provided the following options:

| Option | Pros | Cons |

| OData API | Original interface | Slow, no query folding, currently deprecated |

| Data Export Service | Export to Azure SQL Database | Deprecated |

| TDS endpoint | Real-time direct access to Dynamics Azure SQL Database | Not designed for massive extracts; slow |

| Dataverse API | Currently recommended by Microsoft | As far as I can tell, no REST operations (see below); questionable performance |

| Dataverse connector | Same as Dataverse API | Same as Dataverse API |

| Export to ADLS | Automatic synchronization | Perpetually in preview, files stored in proprietary CDM folder format |

| Fabric link | Automatic synchronization; delta tables; SQL joins | Requires Fabric licensing; up to 60 min data sync delay; TBD |

One of my clients, a large insurance company, has invested heavily in Dynamics 365. They have implemented various Power BI reports that would import millions of rows directly from common CRM entities, such as Account, Opportunity, as well as custom entities, using the OData API. The main struggle has always been that dataset refreshes take hours to complete. Because API calls don’t fold in Power Query, they apply REST operations to select a subset of columns, join tables, and filter data. They rely heavily on Power Query to shape the data which has worked reasonably well.

Dataverse Link to Fabric

Dataverse Link to Fabric looks promising in addressing some of the above pitfalls. Once you set it up, it extracts Dynamics data as Delta tables in a Fabric lakehouse. From there, you can use the SQL endpoint to join and filter tables.

After the initial synchronization, it might take up to 60 minutes to synchronize data changes in Dynamics so not really real-time data access but much better than the current state.

While working through the setup, keep the following in mind:

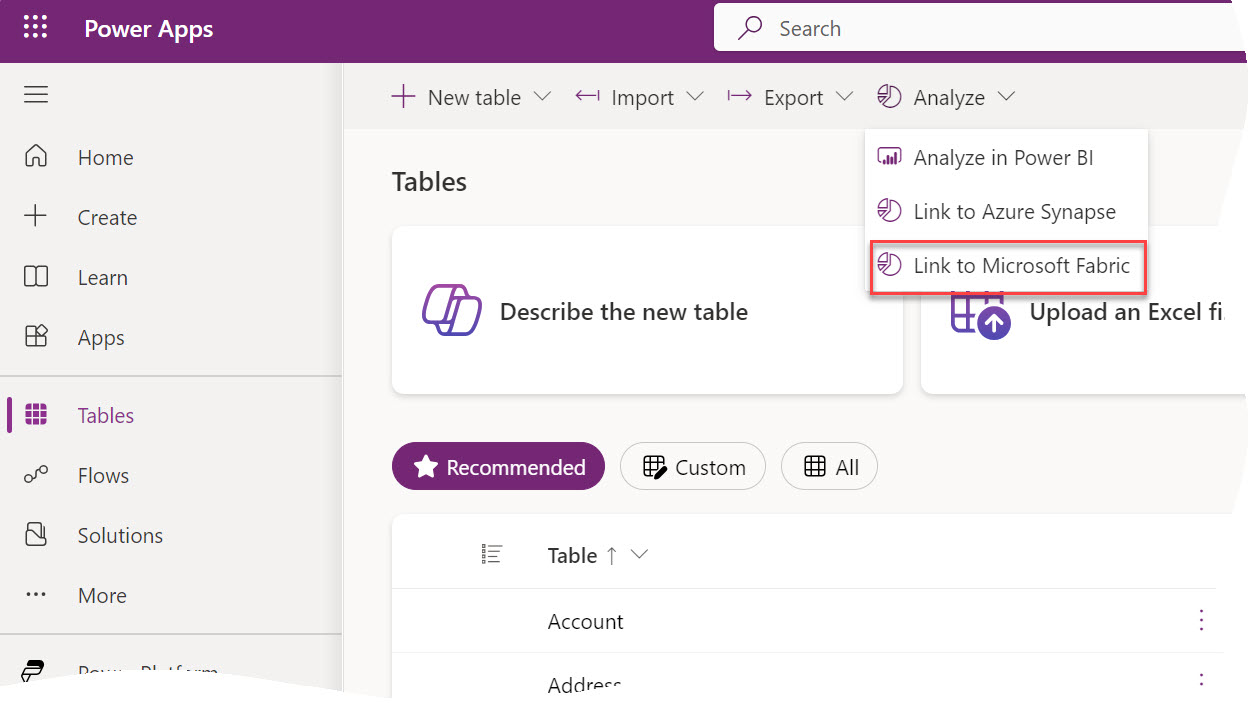

- The Link to Microsoft Fabric menu is found under the Analyze menu in the ribbon and not at the table level as the video shows. That’s because it exports all the tables.

- Referring to the comparison table between Fabric Link and Synapse Link, “No copy, no ETL direct integration with Microsoft Fabric” should be read as “No ETL” because the data is still copied. Further, “Data stays in Dataverse” should read as “Data is automatically copied to Fabric lakehouse”.

- As far as prerequisites, the most important one is “the workspace you choose to link with Dataverse must be assigned to a premium capacity in the same region as your Dataverse environment” with the emphasis on the “same region”. Not having them in the same region is a show stopper.

Cutting Cost

What if you are not on Power BI Premium and you are on a tight budget? Although I haven’t tested, this approach (suggested by Riccardo Perico) might work because Link to Fabric is available in all fabric capacities:

- Purchase a lower Fabric capacity, such as F2 or F4.

- Configure Dataverse Link to Fabric as usual.

- After the initial synchronization, pause and resume the capacity when not in use, such by using this approach.

- Import the data in a Power BI semantic model.

Dataverse Link to Fabric should queue the updates until the capacity is resumed.

Benefits

Again more testing is needed, but at this point I see the following advantages of using the Dataverse Link to Fabric:

- CRM data is staged in open file format (Delta Parquet tables) and available for any reporting needs without impacting Dynamics.

- No need to use APIs. Instead, you can use SQL to join and filter tables, such as to look up columns or filter data, which is much better than using the API syntax. This will also help you centralize column lookups in SQL views instead of doing this over and over for each report.

- Potentially much faster Power BI dataset refreshes

- No additional cost to Power BI Premium customers because the Fabric features are available in Power BI Premium.