Handling Data Quality and Data Enrichment

Some of the most difficult and tedious issues in almost every BI project are handling data quality issues (aka exceptions) and data enrichment. Examples of data quality issues typically originate from wrong data entries or violated business rules, such as misspelled and misclassified products. Data enrichment tasks may go beyond the design of the original data source, such as introducing new taxonomies for products or customers.

In a recent project, a client needed guidance on where to handle data quality and enrichment processes. Like cakes and ogres, a classic BI solution has layers. Starting upstream in the data pipeline and moving downstream are data sources, ODS (data staging and change data tracking), EDW (star schema) and semantic models.

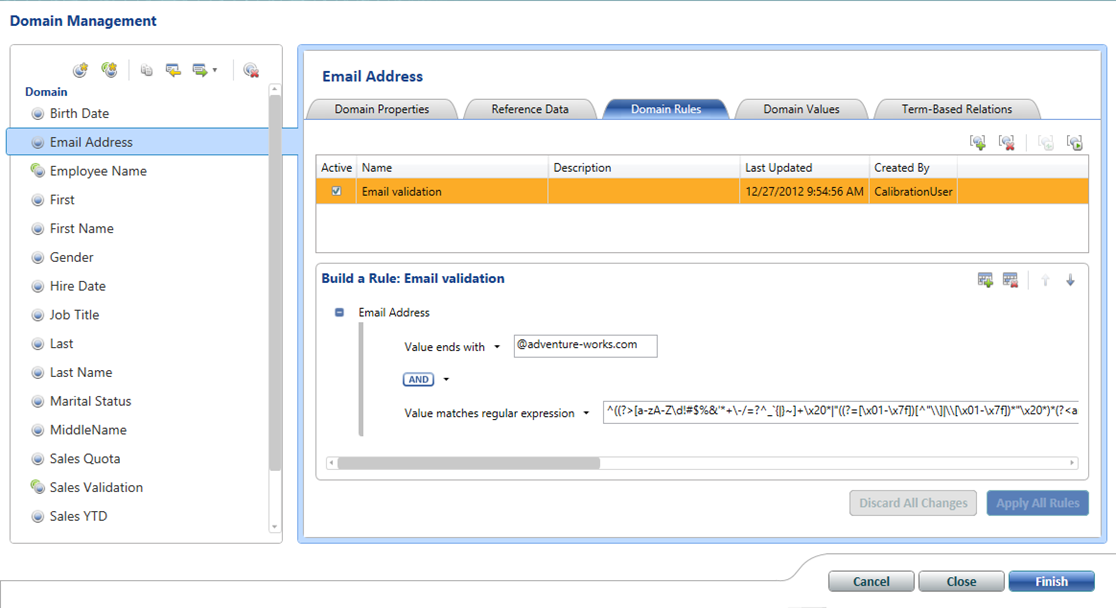

In general, unless absolutely necessary, I’m against master data management (MDM) systems because of their complexity and cost. Instead, I believe that most data quality and enrichment tasks can be addressed efficiently without formal MDM. I’m also a big believer in tackling these issues as further upstream as possible, ideally in the data source.

Consider the following guidelines:

- There are different types of exceptions and enrichment requirements, and they range in complexity and resolution domain. Each case must be triaged by the team to determine how to best handle it by examining the data pipeline upstream to downstream direction and traversing the layers.

- As a best practice, exceptions and enrichments tasks should be dealt with as further upstream as possible because the further downstream the correction is made, the narrower its availability to consumers will be and more implementation effort might be required.

- Data Source – examples include wrong entries, such as wrong employe’s job start date or adding custom fields to implement categories requires for standard hierarchies. The team should always start with the source system in attempt to address data quality issues at the source.

- ODS – examples include corrections not possible in the upstream layers but necessary for other consumers besides EDW.

- EDW – examples include corrections applicable only to analytics, such as IsAttendance flag to flag which attendance events should be evaluated.

- Semantic layer – calculated columns for data enrichment best done in DAX and additional fields the end user needs in a composite model.

- Shortcuts – When resolving the change upstream is time prohibitive, it should be permissible to handle the change further downstream while waiting for changes to an upstream system. For example, if fixing an issue in the data source is rather involved, a temporary fix can be done in ODS to prototype the change and expedite it to consumers. However, the team will remove that fix once the source system is enhanced.

- When consumers prefer access to uncorrected data, a pair of fields could be introduced as upstream as possible, such as:

- EmployeeId – raw field

- EmployeeIdCorrected – corrected field. Joins to the related dimension table will be done on this field.