Using DQS for Externalizing Data Validation Rules

Developers spend plenty of time coding for exceptions. The customer SSN must be 9 characters. The organization code must be four characters and it must be constrained to specific values. The happy path is easy, coding for exceptions is tedious. Some exceptions are easy to catch, others might require more complicated logic than implementing the happy path. And, it gets more difficult if the rules change often or your BI system needs to support adding new rules.

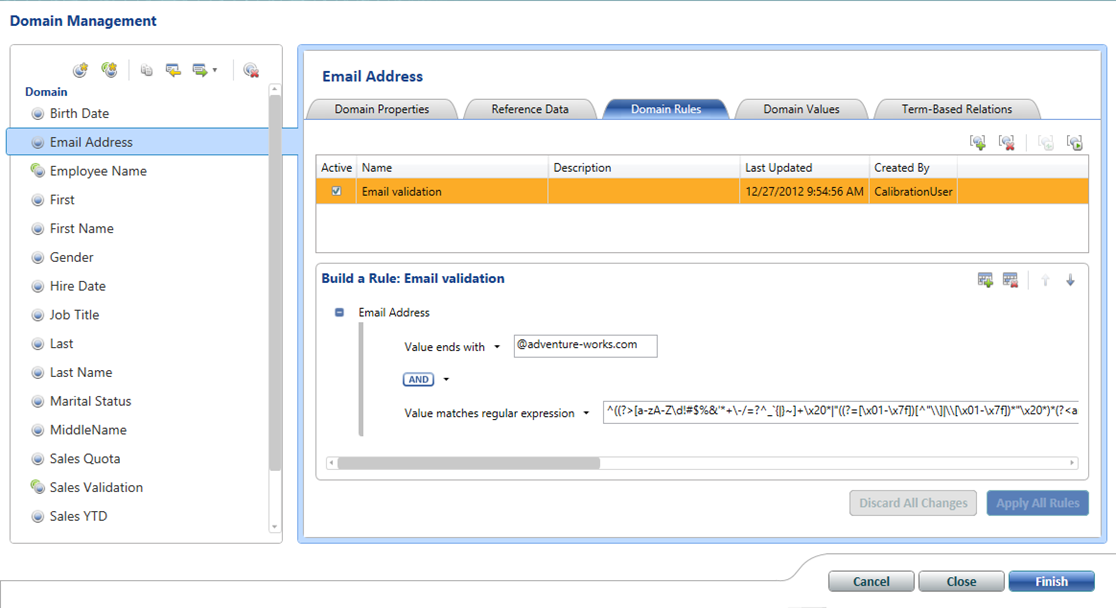

This is where Data Quality Services (DQS) can help. To me, the biggest strength of DQS is externalizing rules. Instead of hardcoding rules in SQL or ETL, you can externalize them and even offload rule management to business users. Using the Data Quality Client tool, a business user can define simple rules and corrections, such as that the e-mail address must match a specific regular expression. Then, the ETL developer can integrate the data flow with DQS to separate invalid and valid rows. Moreover, DQS will tell you exactly which rule is broken and a confidence factor for matching. This helps you avoid tedious coding and saves your company a lot of effort.

In its first release, DQS has left substantial areas for improvement which I hope SQL Server 2016 will address, including:

- I’m sure that there is a solid academic theory behind the “domains” concept, but requiring the user to create composite domains for simple-cross field validations might too much to ask. Instead, a better approach might be to have a hidden composite domain consisting of all fields.

- The lookup lists, such as “Value Is In” cannot be data-driven, requiring you to hardcode lookup values.

- More advanced rules are needed, such as IF-THEN, without requiring composite domains.

- Very slow performance with the SSIS DQS task because it uses a single thread.

- Currently, DQS has no API so automation is not possible.