Where Is My DQS KB Gone?

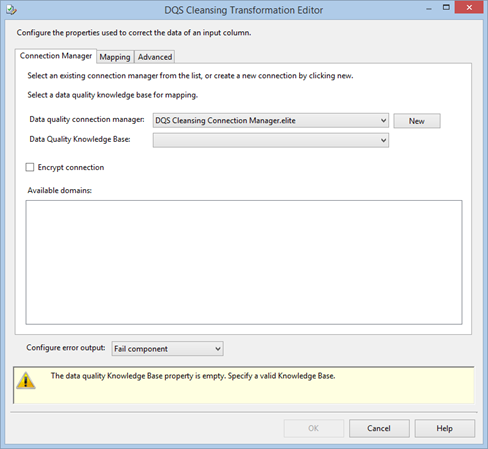

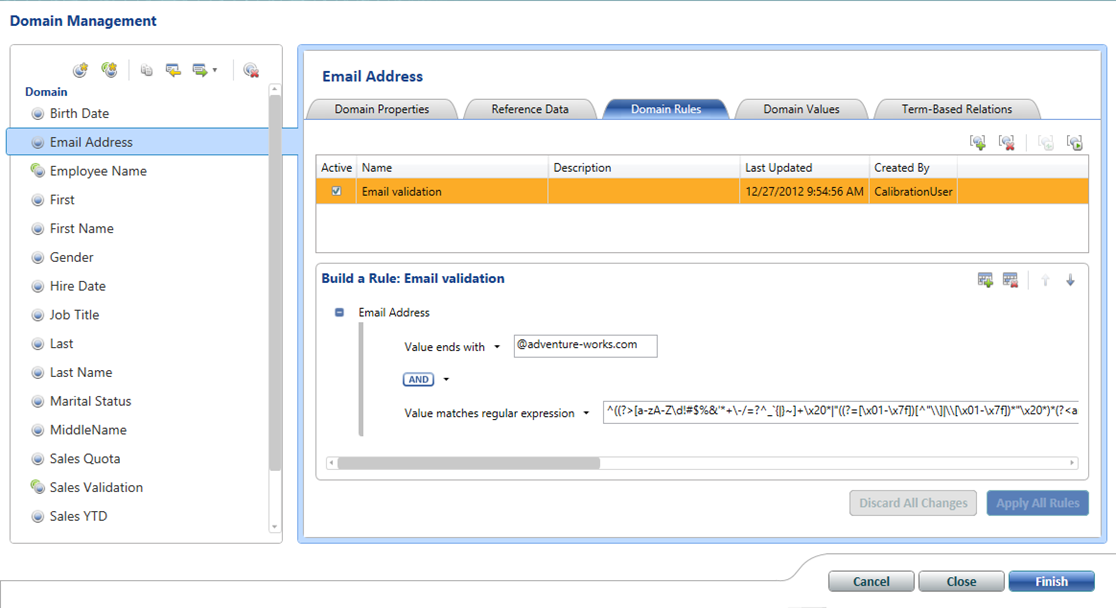

Scenario: You use the DQS Cleansing Transformation in an SSIS package to integrate data cleansing with DQS. Once you are done developing, you export the DQS KB and then create a new DQS KB by importing and publishing the KB to another server. Or, you might have deleted and recreated the KB with the same name. When you open the DQS Cleansing Transformation Editor, although the KB name is the same, you find that the reference to your DQS KB is gone and you get a warning message “The data quality Knowledge Base property is empty. Specify a valid Knowledge Base”. Naturally, you don’t want to remap the KB and recreate all mappings as this a tedious process involving many steps with many columns.

Solution: I’m not sure what design pattern was followed here, by SSIS identifies the KB by its identifier instead of by name. To fix the issue without remapping, you need to find the new KB ID and update the package source by following these steps:

- On the target SQL Server that is integrated with DQS, connect to the DQS_MAIN database and issue the following query by replacing the token with the name of your KB:

SELECT * FROM [DQS_MAIN].[dbo].[A_KNOWLEDGEBASE] WHERE NAME = ‘<YOUR KB NAME>’ - In the query results, locate the ID column and copy the identifier.

- In SSDT, right-click on the package and then click View Code to open the package XML source.

- Find the knowledgebaseName property and overwrite the new identifier.

<property dataType=“System.Int64” name=“KnowledgebaseName” typeConverter=“NOTBROWSABLE”>1020033</property>

- Save the source and open the package in the designer.

Note that to resolve the KB identifier successfully, not only you need to import the KB on the target server but also publish the KB.

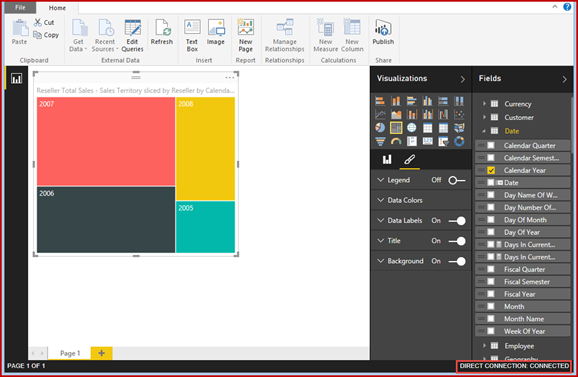

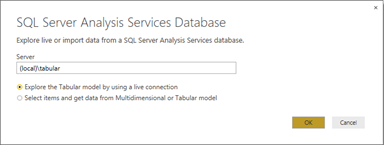

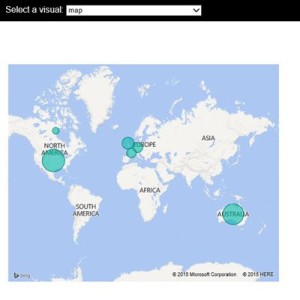

I think the Microsoft decision to make Power BI open will be a paradigm shift for the BI industry. Besides the Push API which are already published, Microsoft has already indicated plans to make Power BI even more open, such as to allow developers to embed reports in custom web applications. Now, if only Power BI supports on-prem installation …

I think the Microsoft decision to make Power BI open will be a paradigm shift for the BI industry. Besides the Push API which are already published, Microsoft has already indicated plans to make Power BI even more open, such as to allow developers to embed reports in custom web applications. Now, if only Power BI supports on-prem installation …