The Best Self-Service BI Tools of 2015

I came across this interesting PC Magazine article that just came up to compare 10 popular self-service BI tools. And the winner is? Power BI, of course, rubbing shoulders with Tableau for the Editor Choice award! The author, David Strom, did a great job reviewing the tools (this is not a trivial undertaking) but a few Power BI conclusions deserve clarifications:

- Cons: “Cloud version has a subset of features found in Windows version” – The cloud version is meant to be simple on purpose so that business users can start analyzing data without any modeling.

- Sharing: “Microsoft relies on the shared Microsoft OneDrive at Microsoft cloud service (or what it calls a “content pack”) to personalize and share your dashboard and reports via unique URLs” Power BI doesn’t rely on OneDrive for collaboration. Instead it supports three ways to share content: simple dashboard sharing, workspaces, and content packs.

- Custom visuals: “You can get quickly up to speed by searching through an online visualizations gallery to find the particular presentation template you want to use to show your data. This is the reverse of what many BI tools such as Tableau Desktop ($999.00) at Tableau Software and Domo ($2,000.00) at Domo have you do, and it takes a bit of getting used to.” Not sure what this refers to. There are built-in visualizations and starting up with them is no different than using other tools. But we have also custom visuals that no other vendor has.

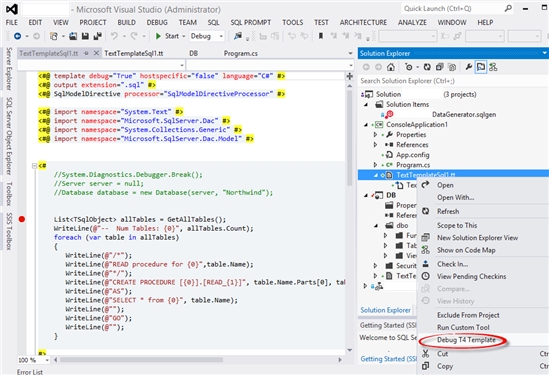

- Custom visuals:” A new section called “Developer Tools” lets you build custom visualizations using a Visual Basic-like scripting language that is documented in a GitHub project. While it is still in beta, it could be a very powerful way to add your own custom look to your dashboards” The Dev Tools for implementing custom visuals outside Visual Studio is in preview but the actual visualization framework is not. And developers use TypeScript (superset of JavaScript) and not Visual Basic.

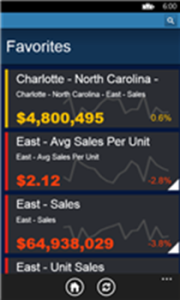

Speaking about reviews, here are some important Power BI characteristics that make it stand above the rest of the pack:

- Data engine and DAX – no other tool can come close to the Power BI in-memory engine that allows data analysts to build data models that are on a par with professional models.

- Hybrid architecture that allows to connect your visualizations to on premise data sources.

- Self-service ETL with Power Query – as far as I know, no other tool has such capabilities.

- Open architecture that allows developers to extend the Power BI capabilities

- Great value proposition that follows the freemium model – Power BI Desktop is free, Power BI Mobile is free, Power BI service is mostly free.