Open Data Protocol (OData), previously known as ADO.NET Data Services, is quickly gaining popularity as a web protocol for querying and updating data. A few Microsoft products already expose their functionality as OData services, including SharePoint 2010, SQL Azure, and Reporting Services (see my blog about reports as data feeds). Therefore, chances are that you may need to report off OData services. As it stands, Reporting Services doesn’t include a native support for OData but the XML Data Provider, which has been around since version 2005, may get the job done.

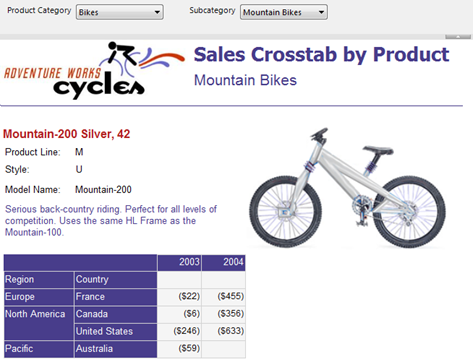

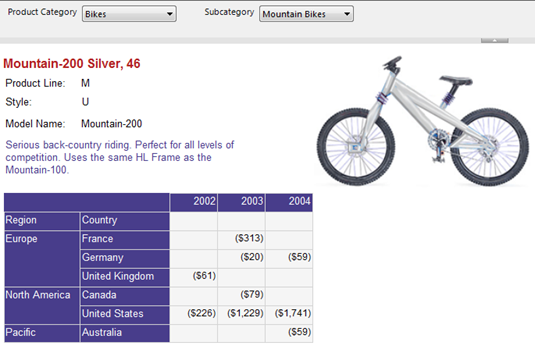

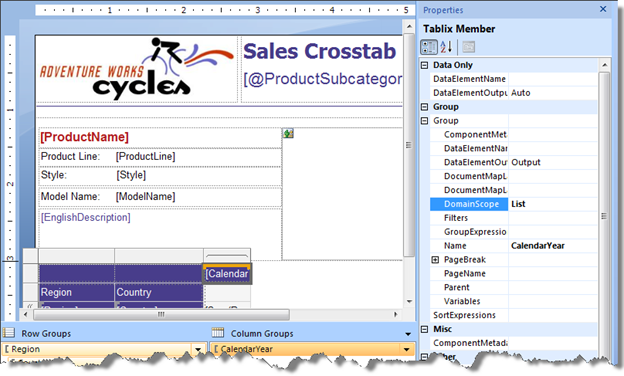

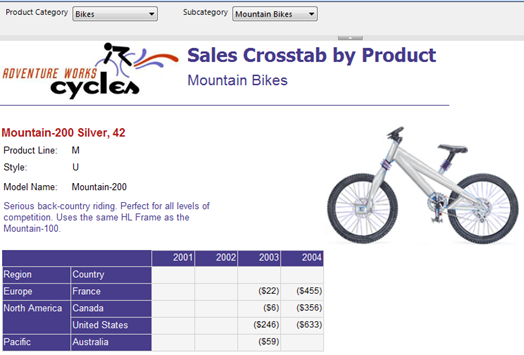

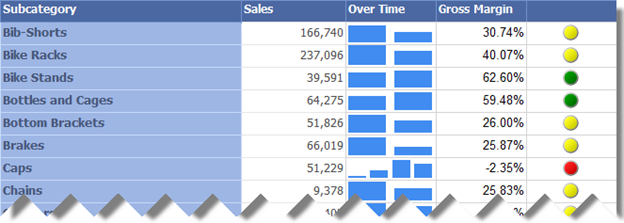

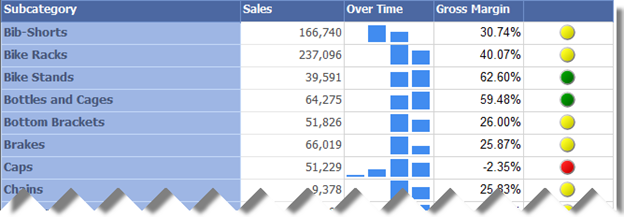

The OData report demonstrates how you can use the XML Data Provider to query OData services. It has two datasets. The Customers dataset retrieves data from the sample Northwind OData service. The CompanySales dataset queries the Adventure Works Company Sales report as a data feed.

Northwind OData Service

The Northwind datasets shows a list of Northwind customers. I implemented the report as follows.

- Set up the Northwind data source that uses the XML Data Provider and whose connection string points to the Northwind Customers service.

http://services.odata.org/Northwind/Northwind.svc/CustomersAs you’ve probably guessed it, you need to set up a data source for each OData service you want to query. If you open the above link in IE with the feed view turned off (Internet Options, Content tab, Settings, uncheck Turn On Feed Reading View), you will see the output of the service.<?xml version=”1.0″ encoding=”utf-8″ standalone=”yes” ?>

– <feed xml:base=”http://services.odata.org/Northwind/Northwind.svc/“

xmlns:d=”http://schemas.microsoft.com/ado/2007/08/dataservices“

xmlns:m=”http://schemas.microsoft.com/ado/2007/08/dataservices/metadata“ xmlns=”http://www.w3.org/2005/Atom“>

<title type=”text“>Customers</title>

<id>http://services.odata.org/Northwind/Northwind.svc/Customers</id>

<updated>2010-04-25T15:07:07Z</updated>

<link rel=”self“ title=”Customers“ href=”Customers” />

– <entry>

<id>http://services.odata.org/Northwind/Northwind.svc/Customers(‘ALFKI’)</id>

<title type=”text” />

<updated>2010-04-25T15:07:07Z</updated>

– <author>

<name />

</author>

<link rel=”edit“ title=”Customer“ href=”Customers(‘ALFKI’)” />

<link rel=”http://schemas.microsoft.com/ado/2007/08/dataservices/related/Orders“ type=”application/atom+xml;type=feed“ title=”Orders“ href=”Customers(‘ALFKI’)/Orders” />

<link rel=”http://schemas.microsoft.com/ado/2007/08/dataservices/related/CustomerDemographics“ type=”application/atom+xml;type=feed“ title=”CustomerDemographics“ href=”Customers(‘ALFKI’)/CustomerDemographics” />

<category term=”NorthwindModel.Customer“ scheme=”http://schemas.microsoft.com/ado/2007/08/dataservices/scheme” />

– <content type=”application/xml“>

– <m:properties>

<d:CustomerID>ALFKI</d:CustomerID>

<d:CompanyName>Alfreds Futterkiste</d:CompanyName>

<d:ContactName>Maria Anders</d:ContactName>

<d:ContactTitle>Sales Representative</d:ContactTitle>

<d:Address>Obere Str. 57</d:Address>

<d:City>Berlin</d:City>

<d:Region m:null=”true” />

<d:PostalCode>12209</d:PostalCode>

<d:Country>Germany</d:Country>

<d:Phone>030-0074321</d:Phone>

<d:Fax>030-0076545</d:Fax>

</m:properties>

</content>

Set up a dataset query.

<Query>

<ElementPath IgnoreNamespaces=”true”>

feed{}/entry{}/content{}/properties

</ElementPath>

</Query>

The IgnoreNamespaces attribute instructs the provider to ignore the XML namespaces. The ElementPath text specifies a path to the element whose nodes you want to display. In this case, the element is properties which is the element that contains the interesting OData stuff. The empty curly braces for the preceding nodes are used to ignore information from the preceding nodes in the path.

CompanySales

Since starting with R2, reports are available as data feeds, you may also implement a quick and easy way to display data from one report in another. In this blog, I provided more details about the query syntax. In the case of the Company Sales, the data source connection string is as follows:

http://localhost/ReportServerR2?/Adventure Works Sample Reports/Company Sales 2008&ShowDescription=False&StartDate=01/01/2002 00:00:00&EndDate=12/31/2003 00:00:00&rs:ParameterLanguage=&rs:Command=Render&rs:Format=ATOM&rc:DataFeed=xAx0x2xCx0xMx0

The dataset query is the same because all OData services return data in the same format.

Moving forward, it will be nice if a future release includes a native OData provider that handles parameters and supports methods, as the XML Data Provider does for SOAP web methods, so you don’t have to set up a data source for each OData service.