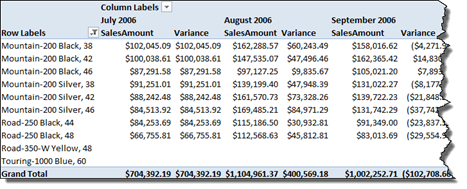

Relationships in analytical models (Multidimensional and Tabular) are both a blessing and a curse. Once defined by the modeler, relationships power “slicing and dicing” data. The analytical tool doesn’t have to worry about and include the relationships in the query because they are defined in the model and the server “knows” how to use them to aggregate the data. On the other hand, as they stand, all analytical model flavors (Multidimensional, Tabular, and PowerPivot) have inherent limitations. The following table compares relationship limitations between Multidimensional and Tabular (PowerPivot).

| Multidimensional | Tabular and PowerPivot |

| Many-to-Many | Supported | Not supported |

| Parent-child | Supported | Requires flattening the hierarchy |

| Role-playing | Supported | Not supported |

| Multi-grain | Supported | Not supported |

| Fact-to-fact | Not supported | Supported but rarely useful |

Let’s discuss these limitations in more details.

Many-to-Many

The classic example of a many-to-many relationship is a joint bank account that is owned by multiple customers. Many-to-many relationships are natively supported in Multidimensional and the server produces correct results when aggregating the data. Tabular and PowerPivot don’t support many-to-many out of the box. When the relationship One-To-Many flow is reversed through a bridge table, Tabular will let you continue but produce wrong results. In PowerPivot, this limitation manifests with the “Relationships might be needed” warning in the PowerPivot Field List. The workaround is to introduce DAX explicit measures for each column that requires aggregation using the formula =CALCULATE (SUM (FactTable[Column] ), BridgeTable). On the upside, due to the Tabular memory-resident nature, expect M2M over a large fact table to perform much better in comparison with Multidimensional.

Parent-Child

A parent-child relationship defines a recursive join between two columns, such as an employee and a manager. This relationship type is supported natively in Multidimensional but requires flattening the hierarchy using DAX calculated columns for each level in the hierarchy. Flattening the hierarchy is accomplished by using DAX PATH-related functions.

Role-playing

A role-playing relationship allows a dimension (lookup) table to be joined multiple times to a fact table, such as to join a Date dimension multiple times to a Sales fact table in order to support aggregating the fact data by OrderDate, ShipDate, and DueDate. Multidimensional supports role-playing relationships natively. Tabular doesn’t and flags only one of the relationships as active. The first (and recommended for most scenarios) workaround is simply to reimport the Date table as many times as needed. This gives you the flexibility to control the schema and data between the Date table instances, e.g. the ShipDate table includes only valid ship dates, at the expense of storage and increased model complexity. The second more complicated workaround is to create calculated measures, such as ShipAmount, DueAmount, etc., that use the DAX USERELATIONSHIP function to traverse the inactive relationships. This approach presents maintenance challenges because the chances are that the fact table will have many columns and you’ll have to create role-playing variants for each measure that needs to be aggregated.

Multi-grain

A multi-grain relationship exists when a dimension joins a fact table at a higher level than the dimension key. For example, you might have an Organization table that goes all the way down to business unit. However, an Expenses fact table might record expenses at a higher level in the organizational hierarchy, such as Division. Consequently, the modeler needs to set up a relationship from the Expenses fact table to the Division column as opposed to the Business Unit column (dimension key). Multidimensional supports this scenario out of the box. Tabular doesn’t because the joined column on the One side of the relationship must be the dimension key which uniquely identifies each row in the dimension table. The workaround is to break the Organization dimension table into two tables: Organization and OrganizationDivision in order to join the OrganizationDivision table to the Expense fact table on the Division column which is now the key column. Then, you need to join the OrganizationDivision table to the Organization table for consolidated reporting purposes, such as to see Sales and Expenses side by side.

Fact-to-fact

Suppose that your model has two fact tables: OrderHeaders and OrderLineItems. You would like to have a transactional report that shows the line items for each order. Let’s say that that the OrderHeaders fact table has an OrderID column and the OrderLineItems have a matching OrderID column. On a first thought, you might attempt to solve the problem by creating a relationship between the OrderHeaders and OrderLineItems tables. However, Multidimensional doesn’t support fact-to-fact relationships. That’s because the classic OLAP model insists on having relationships between dimensions and fact tables only. To solve this predicament in Multidimensional you need a PO Order dimension that joins both OrderHeaders and OrderLineItems tables. However, the chances are that you might end up with another complication if you take this route. This might not be applicable to the order header-line items scenario but what if the rows in the two fact tables have different dates. If a Date dimension joins them and you attempt to slice by date, the business question will be “Show me orders and order line items that have matching dates”. This might or might not be correct depending on your situation. So, this is where you’ll find that although very useful, relationship in analytical models might not be that flexible for ad hoc analysis.

Thanks to its more relaxed schema requirements, Tabular doesn’t care about the table type (dimension or fact) and it will let you set up a relationship between OrderHeaders and OrderLineItems as long as they comply to the One-To-Many rule . However, you’ll face another limitation that Tabular doesn’t support closed-loop relationships. More than likely, the OrderHeaders and OrderLineItems will have relationships to other lookup tables already, such as Account, Organization, etc. Once you create a fact-to-fact relationship, you’ll close the relationship loop. Tabular and PowerPivot will detect this and automatically deactivate the fact-to-fact relationship (its line will be dotted in the diagram view). Consequently, you must resort to the Multidimensional workaround to create an OrderHeader lookup table. Another workaround is to merge the OrderHeaders and OrderLineItems tables into a single table. Or, create a model that has only the OrderHeaders and OrderLineItems tables so you can create the fact-to-fact relationship.

Relationships are a challenging concept to grasp. As a modeler, you need to understand their limitations and workarounds while hoping that a future version of Analysis Services will relax or remove some of the existing constraints.