Are You Modern Yet?

If you’re a BI architect responsible for designing Azure-based cloud BI solutions and you’re not following the James Serra’s blog, you’re missing a lot. Previously an independent consultant and MVP, James works now as a Big Data/Data Warehouse Evangelist at Microsoft. I can’t agree more with his latest blog “Should I load structured data into my data lake?”

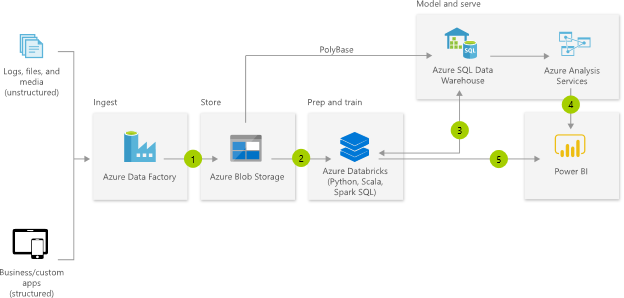

We’ve heard a lot lately about what the “modern” data warehouse architecture should look like from Microsoft and other vendors. Here is the diagram taken from, you guessed it, the Microsoft Modern Data Warehouse page.

The problem is that many organizations take this diagram verbatim when it makes sense and when it doesn’t. For example, a client exports data from on-premises relational databases to text files in Azure Data Lake, only to load it later in a cloud data warehouse. To my observation that this is redundant and pointless, they answered that I shouldn’t deemphasize the “whole Big Data” architecture should one day their users decide to do machine learning. To my observation that should this moment arrive, data scientists will benefit more if the data is readily accessible in a relational database, they said that it contradicts what Microsoft recommended.

Of course, this “modern” architecture makes a lot of sense to the vendor (it’s a nice and perpetual revenue stream) or the consulting partner (the more stuff, the higher the bill) but more than likely is an overkill for you. Here are the most common issues I see organizations making on their BI journey to the cloud:

- Excessive data movement – The more you move the data, the more problems. You know it and I know it. Data quality issues, latency, maintenance and endless troubleshooting, etc. In another case, I saw a Cosmos DB sneaked in between, just in case there is too much structure in the source data.

- Architecture “gold plating” – A classic example, which the diagram perpetuates, is Azure SQL Data Warehouse. If you have a few million rows of data in your data center, would you buy Teradata? Of course, you wouldn’t! Now, just because you can afford the monthly premium for Azure SQL Data Warehouse, why would you go for an MPP platform? Not to mention that more than likely you’d have to redo your entire ETL if you are migrating from premises as there is still no T-SQL parity. I wouldn’t even consider Azure SQL Data Warehouse for workloads less than 1 TB. And not even then because a semantic model will probably do the heavy lifting. And on ETL, where Microsoft recommends ADF, read my thoughts on it here.

- Migrating to the cloud to save cost – No vendor would let its cloud (or whatever) alternative cannibalize another offering. For me, your primary goal for migrating to the cloud should be not cost savings but reliability and convenience. Try to get your IT department to promise SLA or promise anything for that matter. Or provision a VM in minutes? Cost alone, more than likely you’ll spend more moving to the cloud, especially if you go PaaS. For example, a client found that their bill went up astronomically because each BI PaaS offering (Database Engine, Analysis Services, ETL, Power BI) has its own pricing compared to covering all of these with a single SQL Server EE license in their data center. The “modern” architecture above could easily run $50,000/mo which is what a perpetual SQL Server EE license will cost you but over years.

They asked a famous writer one day when he knows that the manuscript is done, and he said when there is nothing more to take out. This principle should apply to BI architectures too. What can you take out to meet the business requirements without overcomplicating? It does cost a lot to be “modern”.