5 Storage Gotchas for Power BI Dataflows

Over the past few years, the BI industry has come up with new file formats, such as Parquet, ORC, and Avro, which are widely used today. To facilitate its vision for cross-industry data integration, Microsoft introduced a few years ago the Common Data Model (CDM) and CDM Folders. Power BI dataflows output CSV files to CDM folders and each table is saved in its own folder. You can bring your own data lake to directly access these files. If do so, you’ll find the following folder structure:

Although accessing the dataflow files might open all sorts of data integration scenarios, here are some things to watch for concerning the dataflow output:

- If you plan to migrate from other self-service ETL tools, such as Alteryx, note that a Power BI dataflow can output only to a CDM folder and export the data as CSV.

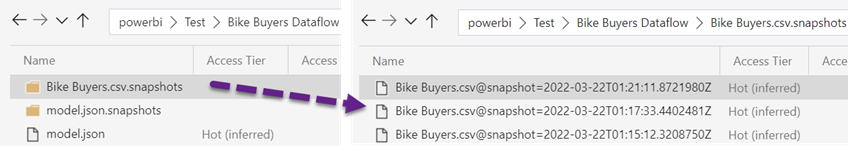

- Each time the dataflow refreshes, a new snapshot file is generated and added to the <table>.csv.snapshots folder. Currently, dataflows don’t delete previous snapshots and there is no retention policy. You could consider this a feature that lets traverse the dataflow run history, but very quickly you might end up with lots of files.

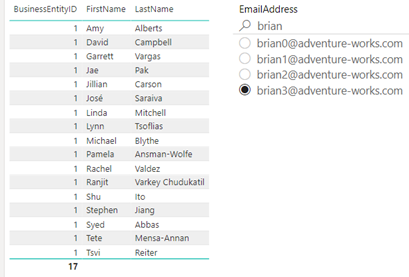

- Working the snapshot files is cumbersome. For example, if you plan to load the data directly from the CSV files, you’d typically want to access the latest data and you’d want the file name to stay the same. However, as you can see in the screenshot, the file name includes the timestamp. So, if the tool doesn’t have a CDM connector, it must sort the files in the folder and load from the top file.

- The metadata (column names and data types) is stored in the model.json file. Continuing the previous example, you won’t get the column headers and types if you just load the snapshot file.

- Very few tools today support CDM folders. To support them, the tool must first query the model.json file to determine the location of the latest snapshot. The tool must then apply the data types from model.json. The Azure Data Lake Gen 2 connector in Power BI support them, but it’s been in a perpetual Beta. Azure Data Factory supports CDM, but it requires mapping data flows that I typically try to avoid. Microsoft has a Databricks package that understand CDM. I see that Informatica has put up a connector. This is a timid response from the industry considering the Microsoft’s ambitious vision. Even Microsoft’s own Synapse Serverless doesn’t support them yet. Why didn’t Microsoft decide to use modern and established file formats, such as Parquet, that can save both the metadata and data in the same file? It’s clear that Microsoft opted for the lowest denominator that every tool supports, which is CSV. But because CSV files don’t include the metadata, Microsoft had to find a way to provide it.

As a workaround for these limitations, consider implementing Power BI datasets that wrap the dataflows using the Dataflow connector. If you use Power BI Premium or PPU, enable DirectQuery in the enhanced compute engine, so that you don’t have to import the data (the only data connectivity supported by the ADLS connector is import) and you don’t have to refresh both the dataset and dataflow. Looking forward, I’d like to see dataflows supporting output settings, such as retention, immutable file name for the latest snapshot. Dataflows should add an output connector to define where the data should be sent, such as to output to Parquet files to store both the schema and metadata in the file or output to a relational database. I’d like also to see Synapse Serverless extended to support CDM folders.

In summary, yes, you can directly access the dataflow raw files in your own lake, but as it stands, the CDM folder implementation limits your data integration options.