Atlanta MS BI and Power BI Group Meeting on January 9th (Integrating Azure Synapse Analytics and Power BI)

The Atlanta MS BI and Power BI Group is resuming in-person meetings! Please join us for the next meeting on Monday, January 9th, at 6:30 PM ET. Elayne Jones (Data Engineer at 3Cloud) will show you how to integrate Synapse with Power BI. For more details and sign up, visit our group page.

WE ARE RESUMING IN-PERSON MEETINGS AT THE MICROSOFT OFFICE IN ALPHARETTA. WE STRONGLY ENCOURAGE YOU TO ATTEND THE EVENT IN PERSON FOR BEST EXPERIENCE. PLEASE NOTE THAT GUESTS ENTERING MICROSOFT BUILDINGS IN THE U.S. MUST PROVIDE PROOF OF VACCINATION OR SELF-ATTEST WITH HEALTHCHECK (HTTPS://AKA.MS/HEALTHCHECK). ALTERNATIVELY, YOU CAN JOIN OUR MEETINGS ONLINE VIA MS TEAMS. WHEN POSSIBLE, WE WILL RECORD THE MEETINGS AND MAKE RECORDINGS AVAILABLE AT HTTPS://BIT.LY/ATLANTABIRECS. PLEASE RSVP ONLY IF COMING TO OUR IN-PERSON MEETING.

Presentation: Integrating Azure Synapse Analytics and Power BI

Date: January 9th

Time: 6:30 – 8:30 PM ET

Place: Onsite and online

ONSITE

Microsoft Office (Alpharetta)

8000 Avalon Boulevard Suite 900

Alpharetta, GA 30009

ONLINE

Click here to join the meeting

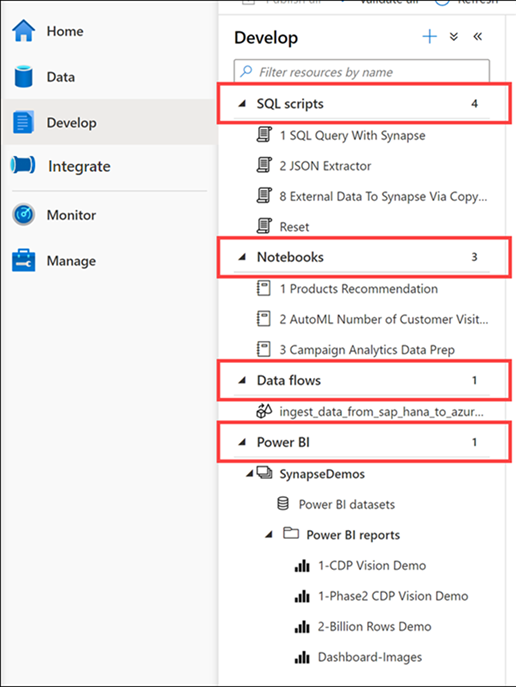

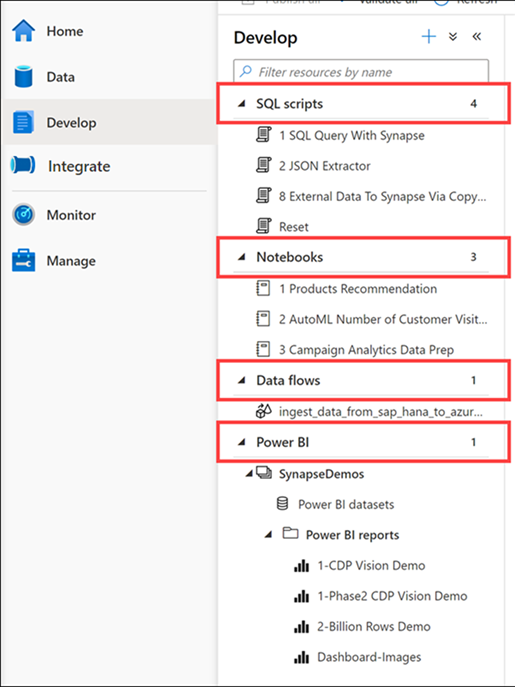

Overview: Combining the forces of Azure Synapse Analytics and Microsoft Power BI allows you to weave together the full lifecycle of data ingestion, transformation, and visualization. Synapse encompasses the traditional processes of data warehousing, cleansing, and visualizing all within Synapse Studio, fostering unity among teams and driving efficiency across organizations.

Speaker: Elayne Jones is a Data Engineer at 3Cloud. She specializes in data visualization and data modeling using Power BI. She has expertise developing Power Apps and creating Power Platform solutions that drive efficiency within organizations. Elayne is also experienced querying data using the DAX and SQL languages. Elayne has delivered numerous BI trainings and written blog posts on various BI and reporting topics.

A few days ago an exalted customer shared that they’ve acquired Synapse and now they’re ready for Power BI modeling. He just wasn’t sure how to give business users access to Synapse so cool self-service BI can finally start. In the process of the conversation, it became clear that he opened Synapse Studio and was left with the impression that Synapse has semantic modeling features. This is what happens when Marketing gets involved and people get confused about what a tool actually does. This newsletter attempts to clear up this confusion.

A few days ago an exalted customer shared that they’ve acquired Synapse and now they’re ready for Power BI modeling. He just wasn’t sure how to give business users access to Synapse so cool self-service BI can finally start. In the process of the conversation, it became clear that he opened Synapse Studio and was left with the impression that Synapse has semantic modeling features. This is what happens when Marketing gets involved and people get confused about what a tool actually does. This newsletter attempts to clear up this confusion.