SaaS Losers and Winners: Paylocity and Element

“We are sailing to Philadelphia

A world away from the coaly Tyne

Sailing to Philadelphia

To draw the line

The Mason-Dixon line”

“Sailing to Philadelphia”, Mark Knopfler

As I’ve said in the past, I consider it a travesty when a SaaS provider disallows direct access to the data in its native storage, such as by ODBC and OLE DB providers, and force you to use file extracts or APIs (often horrible and typically designed for app integration and not DW loads). This greatly inhibits data integration scenarios, such as extracting data for data warehousing. I wrote on this subject many times, including here, here and here.

Continuing on this subject, let’s consider two other vendors: Paylocity and Element.

SaaS Loser: Paylocity

Like Workday, Paylocity is a popular HR cloud platform. And like Workday, Paylocity doesn’t provide direct access to their database citing “security and IP concerns”. Instead, you must resort to “work in progress” APIs. Or opt for Paylocity pushing file extracts to an SFTP server set up by you or them.

In both cases, Paylocity charges a setup fee and per-employee fee. This will be the equivalent of putting your money in the bank and they charging you a withdrawal fee for every dollar you get out. This is actually not a far-fetched example considering that banks in some countries have started deposit fees. And who knows what lies ahead with the resurgence of socialism, but I digress…

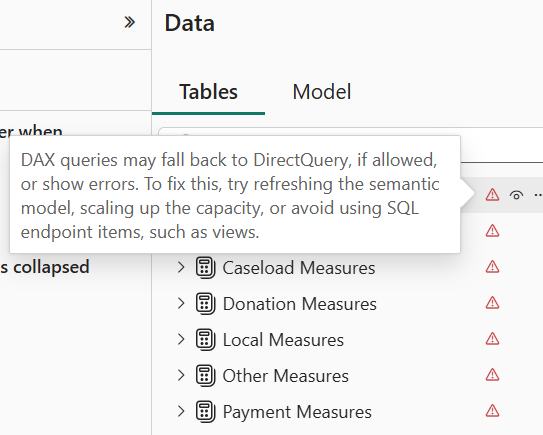

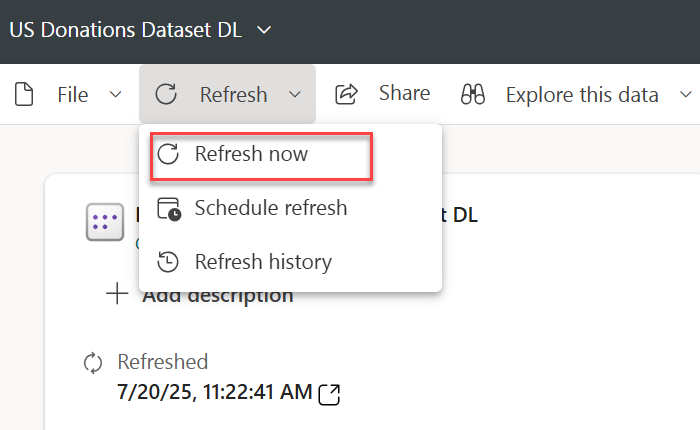

SaaS Winner: Element

Element is a niche ERP system for environmental testing. Element stores data in an Azure SQL database. They provide direct access to the database as though it’s on-premises data store. Getting write access is not an issue if you are fine with the usual disclaimer. This proved extremely useful in a current project where complex business rules require creating temporary and permanent tables. Why can’t we have more of these SSAS vendors?

When it comes to choosing a SaaS vendor, you must draw a line: is it your data that must be easily accessible or is it vendor’s property with strings attached?