Prologika Newsletter Summer 2018

Microsoft Common Data Services

BI and data integration projects often benefit from an operational data source (ODS), whose benefits and design I discussed in my “Designing an Operational Data Store (ODS)” newsletter. A corporate ODS typically fall into the organizational BI area, which means that it’s implemented and sanctioned by IT. Wouldn’t be nice to let Business stage the data needed for business applications and analytics? Of course, it would! Think of the Microsoft Common Data Services as a cloud staging database or ODS by Business and for Business. But before I discuss the details, makes sure to review our Terms of Use, which has been updated as part of our commitment to transparency and to address the requirements set forth by the new European privacy law (General Data Protection Regulation). By continuing to use the Prologika website and its online services, you consent that you have read, understand and accept the terms of the Prologika Privacy Policy. If you have any questions regarding our updated Privacy Policy, please contact us by writing to info@prologika.com.

BI and data integration projects often benefit from an operational data source (ODS), whose benefits and design I discussed in my “Designing an Operational Data Store (ODS)” newsletter. A corporate ODS typically fall into the organizational BI area, which means that it’s implemented and sanctioned by IT. Wouldn’t be nice to let Business stage the data needed for business applications and analytics? Of course, it would! Think of the Microsoft Common Data Services as a cloud staging database or ODS by Business and for Business. But before I discuss the details, makes sure to review our Terms of Use, which has been updated as part of our commitment to transparency and to address the requirements set forth by the new European privacy law (General Data Protection Regulation). By continuing to use the Prologika website and its online services, you consent that you have read, understand and accept the terms of the Prologika Privacy Policy. If you have any questions regarding our updated Privacy Policy, please contact us by writing to info@prologika.com.

What’s Common Data Services?

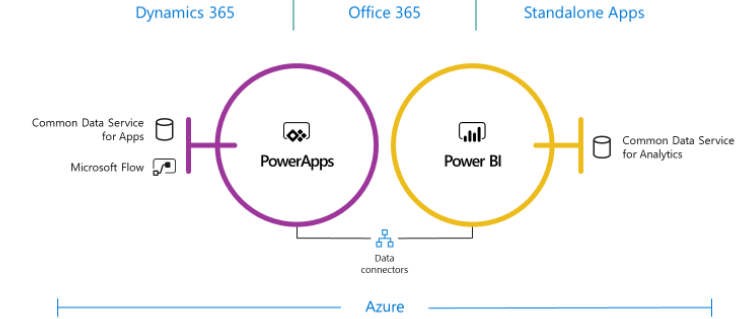

Microsoft introduced Common Data Services were introduced as a part of the reimagined Business Application Platform as a “one connected platform that empowers everyone to innovate” and to put all the data you need into a standardized data model. Common Data Services consists of two offerings: Common Data Service for Apps (CDS for Apps) and Common Data Service for Analytics (CDS for Analytics).

Why two flavors? Think of the Microsoft Common Data Service for Apps (CDS for Apps) as a cloud OLTP-like repository by Business and for Business. Officially introduced in 2016 and running on Azure SQL Database, CDS for Apps is now the entity and data model behind Dynamics 365. This is where Dynamics 365 stores its data. Because it’s transaction-oriented, it’s layered on top of SQL Server. By contrast, Common Data Service for Analytics (CDS for Analytics) is oriented towards supporting analytical requirements.

How Do They Compare?

The following table compares the two CDS types.

| CDS for Apps | CDS for Analytics | |

| Primary usage | OLTP | OLAP |

| Primary tool for loading data | PowerApps/Power Query | Power Query |

| Primary tool for reading data | PowerApps/Power BI | Power BI |

| Data storage | Azure SQL Database | Azure Blob Storage (a CSV text file per entity and a JSON file for the schema) |

| Power BI connectivity mechanism | OData | Azure Blob Storage |

| Pricing | Included in PowerApps plans | Included in Power BI Pro/Premium |

| Storage Quota | 10 GB per database | Restricted by associated app workspace quota |

| Add-ons | Logic and validation | Power BI Insights apps |

Both CDS types support standardized entities, whose definitions are documented in the GitHub repository of the Common Data Model. Currently, the schema of these entities is designed and controlled by Microsoft and it’s limited to Dynamics entities, such as Account, Opportunity, and so on. However, Microsoft hopes that other vendors will provide solutions and extend the CDS schema. Of course, because CDS is your database, you can extend it with your own custom entities. Note that both CDS types target business users willing to store and analyze data in a business-friendly staging database. Over time Microsoft hopes that partners will deliver more value to CDS by implementing apps (CDS apps are like the prepacked apps that already exist in Power BI, such as for Salesforce and Dynamics). Let’s now highlight some of the differences of the two CDS flavors.

Common Data Service for Apps

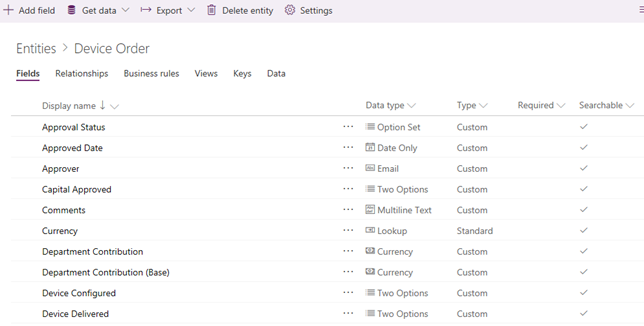

The main usage scenario for CDS for Apps is to jumpstart the development of PowerApps applications with a standardized data model that you can extend to your own needs.

The Good

There is a lot to like about CDS for Apps. Let’s start with pricing. Other vendors, such as Oracle and Teradata, have similar visions and products but their offerings are very expensive. The CDS for Apps pricing is included in the PowerApps licensing model because PowerApps is the primary client for creating CDS for Apps-centered solutions. Using CDS outside selected Dynamics 365 plans (that include it already) will cost you at least $7 per user and per month. CDS for Apps is more than just a data repository. It’s a business application platform with a collection of data, business rules, processes, plugins and more. In this regard, it resembles SQL Server Master Data Services (MDS). The modeler can:

- Define and change entities, fields, relationships, and constraints. For example, the screenshot shows a custom Device Order entity that I’ve created.

- Business rules, such as to prepopulate Ship Date based on Order Date.

- Secure data to ensure that users can see it only if you grant them access. Role-based security allows you to control access to entities for different users within your organization

Besides the original PowerApps canvas apps (like InfoPath forms), CDS for Apps also opens the possibility to create model-driven PowerApps applications (require PowerApps P2 plan). Model-driven apps are somewhat like creating Access data forms but more versatile. Because PowerApps knows CDS for Apps, you can create the app bottom-up, i.e. start with CDS for Apps and then generate the app based on the actual schema and data. For example, you can use PowerApps to build a model-driven app for implementing the workflow for approving a certain process. Model-driven apps are a new style of a PowerApps application that makes it easy to build entity forms, entity views, and workflows. How do you get data into CDS for Apps custom entities? Your PowerApps app can write to it. Or, you can create and schedule a project that uses Power Query (yep, the same one as in Power BI) to load data from somewhere into CDS for Apps.

The Bad

How do you get data out from CDS for App, such as to import data from some entities into a Power BI model? Microsoft has released a preview build of the Common Data Service for Apps connector for Power BI. However, this connector is even slower that the Dynamics connector. It uses the OData v4 Web API. Based on my limited tests, it took the connector about a minute to download 40,000 rows from Dynamics, clocking 10% slower than the Dynamics connector. To make things worse, the connector doesn’t support query folding, so Power BI must download the entire dataset before Power Query applies filters. Because the connector doesn’t support also REST filter and select predicates, so you can’t filter data or select a subset of columns at the source. Microsoft is actively working on improving the connector performance and it might get better in time.

Continuing down the list of limitations, CDS for Apps doesn’t support change tracking (to capture changes to a given row) and incremental loads, such as to load or refresh only the data that has changed yesterday or previous month. These are all essential features that could make ODS even more valuable.

The Ugly

For years people were complaining that after migrating from the on-premises Dynamics to the cloud, they lost the ability to connect to its database directly and they had to rely on the REST APIs (slow) or Data Export Service to export the data to an SQL Server Database (fast but requires additional effort and budget). Unfortunately, although CDS for Apps stores data in Azure SQL Database, Microsoft doesn’t expose its database directly to get data out fast and bypass the REST endpoint. When I raised this issue to Microsoft I got feedback that CDS for Apps is a business platform and there are layers on top of data to handle security, rules, calculations, and so on. However, the argument that CDS for Apps is more than just a database is nonsensical to me. Try to explain to a customer that cakes have layers and CDS for Apps has layers, and therefore getting something out of it is slow. As I mentioned, the “layered nature” of the CDS is conceptually like MDS. In fact, I see a lot of overlap. MDS also supports rules, security, etc. but it doesn’t force me to go through the web service interface if all I need is the raw data. Hence, my wish to support direct connectivity to the Azure SQL Database endpoint of CDS for Apps.

Common Data Service for Analytics

CDS for Analytics is a standard feature of Power BI so every Power BI Pro user can access it. CDS for Analytics is exposed to the end user in Power BI as datapools. A datapool is a collection of entities associated with a Power BI app workspace. An entity maps to a text file in Azure Storage. Business users will rely on Power Query to populate (manually or via a scheduled refresh) entities in CDS for Analytics. You can access the workspace datapool in the workspace content page

The Good

I can think of three primary scenarios where CDS for Apps can deliver value as it stands today:

- Offline data staging – Let’s say IT doesn’t allow direct connectivity to LOB applications but you need to create some reports on top of this data. You can stage the data as text files into CDS for Analytics. I don’t think CDS for Analtyics would bring much value if you could connect directly to it in Power BI Desktop if direct connectivity is an option. The more you move the data, the more problems you may run into. At least for now, having apps on top of text files doesn’t look like a good reason to me but I guess we have to see what apps will become available in time.

- Prepackaged third-party solutions – Sometime ago, a software vendor asked me how they can deploy a solution to Power BI for their customers but still retain ownership. Back then I didn’t have a good answer but CDS for Apps might be a good option now. In fact, besides the Power Query as a primary tool for loading entities, any service that can write to Azure Storage can bring data to CDS for Analytics. The ISV can write the entities as CSV files and tell CDS Analytics to “mount” the storage container. CDS Analytics can now see these mounted entities and treat them as part of the whole. Worried about protecting intellectual property? Currently only the Insight App installer would have access to the installed workspace and artifacts (other users in the organization would just see the published reports which are shared with them).

- Prepackaged insights – Like CDS for Apps, CDS for Analytics understands the Common Data Model. Over time, Microsoft and partners can contribute prepackaged “insights” that are built on top of popular LOB apps, such as Dynamics or Salesforce.

Pricing is also right. CDS for Analytics is included in Power BI although it storage counts towards the workspace quota. Another thing I like about CDS for Analytics is that the Power BI connector is very fast unlike the CDS for Apps connector.

The Bad

As of now datapools support only a small subset of the Power Query connectors. This is probably just a temporary limitation for the preview cycle. I’d imagine that all Power BI connectors for cloud and on-premises data sources will be eventually available. Continuing on the list of limitations, like CDS for Apps, CDS for Analytics doesn’t support incremental refreshes so be careful downloading millions for rows every night.

The Ugly

CDS for Analytics promises to break silos but a datapool is associated with a Power BI workspace. This architecture fragments CDS for Analytics into Power BI workspaces. However, most users would probably require access to common entities, such as Customer, Product. Not only this is not possible but the datapool storage is also limited by the workspace quota. So, if you are a Power BI Pro user who has access to an app workspace, you’re currently limited to 10 GB storage quota which includes not only Power BI datasets but also CDS entities. I wish that CDS has no association to workspaces and it was designed a global staging area, just like Azure Storage. Microsoft has promised at some point in future to allow you to reference entities between datapools in different workspaces and create calculated entities on top of them.

The success of Common Data Services for Apps will depend largely on adoption and contributions by Microsoft partners. Although it lacks typical ODS features and fast connectivity, CDS for Apps gains in “business platform” features. CDS for Analytics and Power BI Insights are new additions to Power BI. CDS for Analytics delivers Operational Data Store (ODS) to business users that is populated and maintained by business users. Microsoft and partners can augment CDS for Analytics with Power BI Insights apps.

Teo Lachev

Prologika, LLC | Making Sense of Data

Microsoft Partner | Gold Data Analytics

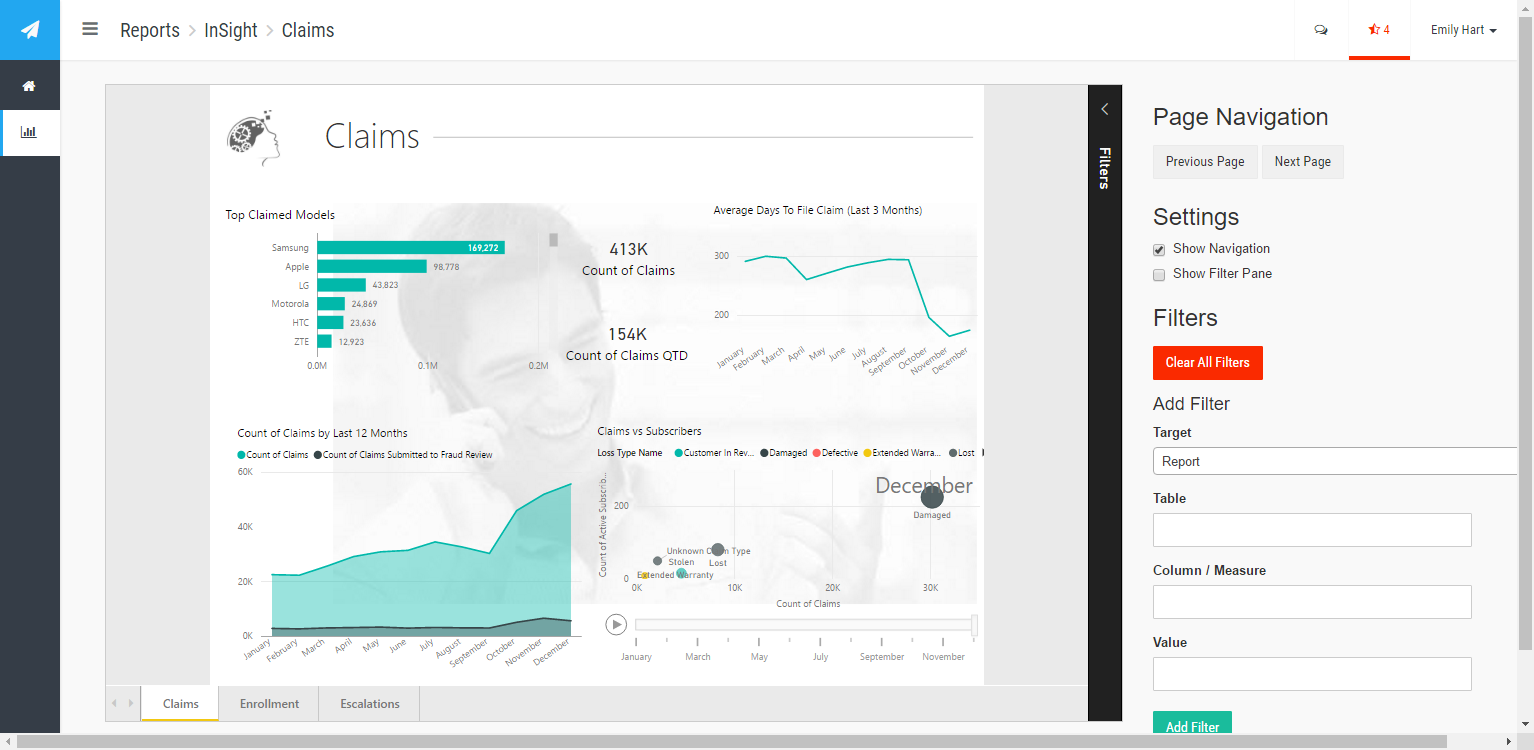

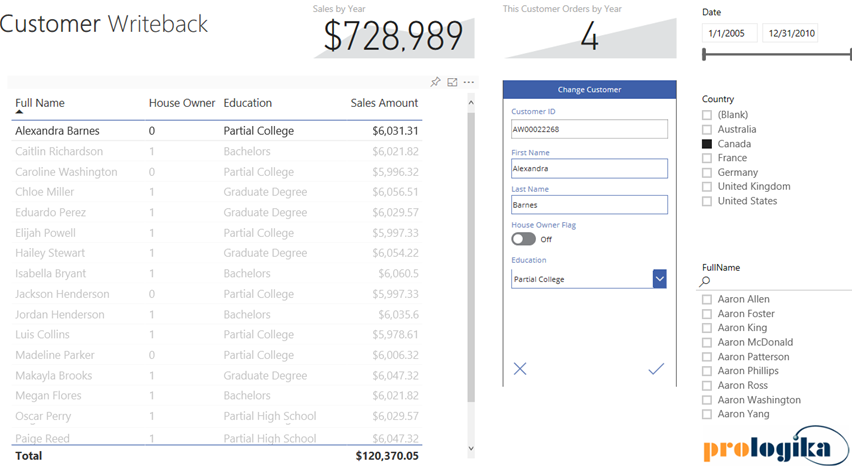

With hundreds of vendors out there, choosing a BI tool isn’t easy. If you’ve been following me for a while, you know that I love Power BI. One of the Power BI most prominent strengths is that it’s a part of the much broader Microsoft Data Platform that spans various on-premises and cloud offerings for data storage, processing, and analysis. Thanks to this integration, you can implement real-time dashboards, extend your Power BI reports with predictive capabilities, integrate with Big Data, and much more. In this newsletter, I’ll talk about an exciting integration option: extending Power BI with business applications, thanks to its integration with PowerApps and Flow. And if you happen to be in the Microsoft office in Alpharetta, feel free to ask for a demo of the Power BI Writeback that Prologika prepared and deployed to the Microsoft environment. You’ll be directed to one of the big screens when you can test the report that I mention in this newsletter.

With hundreds of vendors out there, choosing a BI tool isn’t easy. If you’ve been following me for a while, you know that I love Power BI. One of the Power BI most prominent strengths is that it’s a part of the much broader Microsoft Data Platform that spans various on-premises and cloud offerings for data storage, processing, and analysis. Thanks to this integration, you can implement real-time dashboards, extend your Power BI reports with predictive capabilities, integrate with Big Data, and much more. In this newsletter, I’ll talk about an exciting integration option: extending Power BI with business applications, thanks to its integration with PowerApps and Flow. And if you happen to be in the Microsoft office in Alpharetta, feel free to ask for a demo of the Power BI Writeback that Prologika prepared and deployed to the Microsoft environment. You’ll be directed to one of the big screens when you can test the report that I mention in this newsletter.

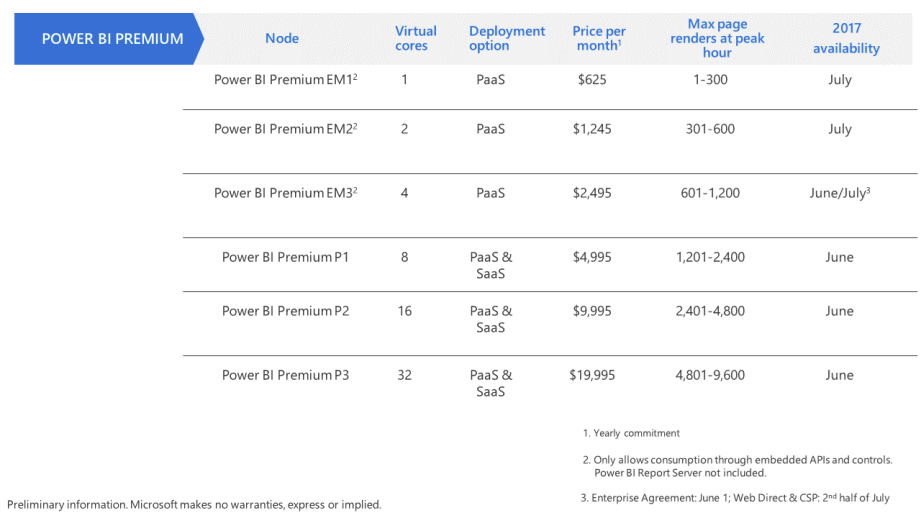

hope you’re enjoying this special time of the year – time for sharing and giving. Speaking of sharing, how does your company share BI artifacts with your B2B or B2C partners? Do you still send Excel spreadsheets or embed canned SSRS reports? If so, Power BI offers a better sharing model and I’ll show you how in this letter. And about Power BI, I’m proud to announce that the third revision of my “Applied Power BI Book” should be out in a week or so. Thoroughly updated to reflect the latest of the ever-changing cloud world of Power BI and featuring 20% new content, this book is the self-study resource your organization needs to master Power BI.

hope you’re enjoying this special time of the year – time for sharing and giving. Speaking of sharing, how does your company share BI artifacts with your B2B or B2C partners? Do you still send Excel spreadsheets or embed canned SSRS reports? If so, Power BI offers a better sharing model and I’ll show you how in this letter. And about Power BI, I’m proud to announce that the third revision of my “Applied Power BI Book” should be out in a week or so. Thoroughly updated to reflect the latest of the ever-changing cloud world of Power BI and featuring 20% new content, this book is the self-study resource your organization needs to master Power BI.

As I’m writing this newsletter, Florida and Georgia (I live in Atlanta, GA) are in the midst of planning for hurricane Irma, which is expected to be one of the

As I’m writing this newsletter, Florida and Georgia (I live in Atlanta, GA) are in the midst of planning for hurricane Irma, which is expected to be one of the

I hope you’re enjoying the beginning of 2017 and you’re staying healthy and busy. It’s been a hectic and exciting period for me. I published the second edition of my “Applied Microsoft Power BI”

I hope you’re enjoying the beginning of 2017 and you’re staying healthy and busy. It’s been a hectic and exciting period for me. I published the second edition of my “Applied Microsoft Power BI”

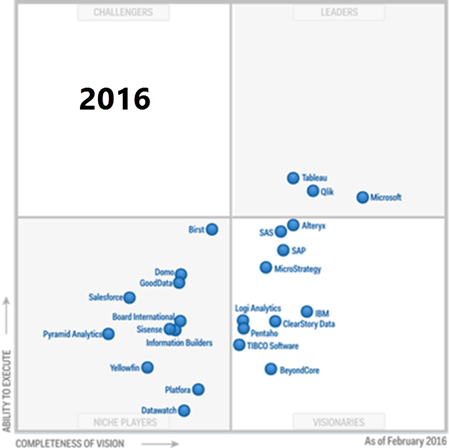

Last week’s seminar on formulating a Power BI enterprise strategy held at the Microsoft office was a great success. Over 50 people witnessed the amazing capabilities of the Power BI platform. As Power BI evolves, we’ll have similar events to bring you up to date. If you couldn’t attend, you can find the slides

Last week’s seminar on formulating a Power BI enterprise strategy held at the Microsoft office was a great success. Over 50 people witnessed the amazing capabilities of the Power BI platform. As Power BI evolves, we’ll have similar events to bring you up to date. If you couldn’t attend, you can find the slides