Prologika Newsletter Fall 2016

Embedding Reports in Custom Applications

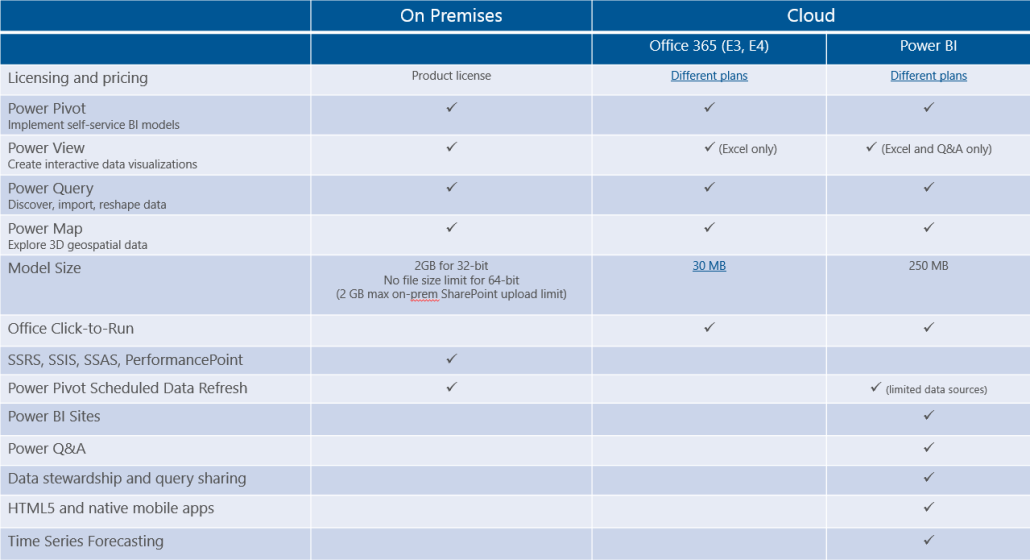

Last week’s seminar on formulating a Power BI enterprise strategy held at the Microsoft office was a great success. Over 50 people witnessed the amazing capabilities of the Power BI platform. As Power BI evolves, we’ll have similar events to bring you up to date. If you couldn’t attend, you can find the slides here. Or, are you looking for more in-depth Power BI training? There is still time to register for my 2-day public Power BI workshop on Sep 14-15 at the Microsoft Office in Atlanta. Reserve your seat today to attend this exclusive event for only $999 and learn practical Power BI knowledge and data analytics skills that you can immediately apply to your job.

Last week’s seminar on formulating a Power BI enterprise strategy held at the Microsoft office was a great success. Over 50 people witnessed the amazing capabilities of the Power BI platform. As Power BI evolves, we’ll have similar events to bring you up to date. If you couldn’t attend, you can find the slides here. Or, are you looking for more in-depth Power BI training? There is still time to register for my 2-day public Power BI workshop on Sep 14-15 at the Microsoft Office in Atlanta. Reserve your seat today to attend this exclusive event for only $999 and learn practical Power BI knowledge and data analytics skills that you can immediately apply to your job.

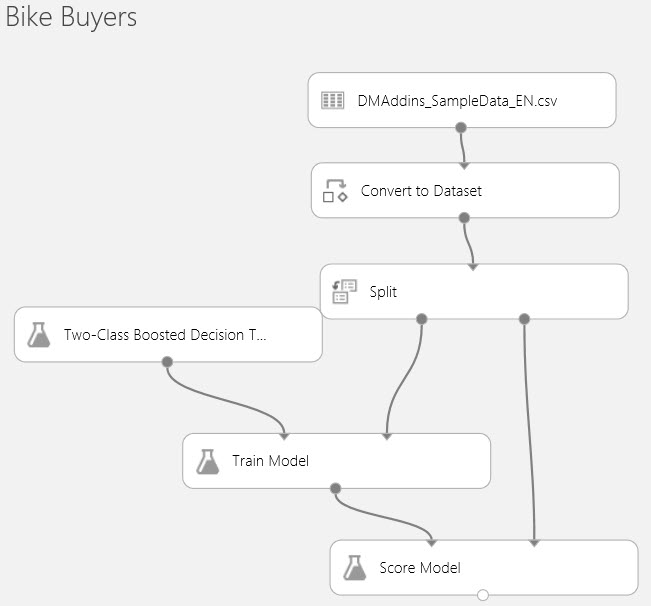

Speaking of Power BI, do you need to embed reports in custom apps in order to bring data analytics to your customers? If so, look no further than Power BI Embedded, which Prologika has been using to help ISVs increase the value of their apps by bringing instant insights to their customers. Most applications need some reporting capabilities. Report-enabling custom applications has been traditionally challenging with the Microsoft BI platform. True, Visual Studio includes Windows Forms and ASP.NET ReportViewer controls that make it very easy to embed Reporting Services reports (also known as paginated reports). However, the chances are that you might prefer more interactive reports that can be viewed with any browser and on any device. This is where Power BI Embedded comes in. You have the app. You have the data. Now bring data to life inside your app with Power BI Embedded!

What’s Power BI Embedded?

Power BI Embedded is an Azure cloud service that help developers embed Power BI interactive reports in custom apps for a third party. Notice that I said “third party”. The current licensing model prevents you for using Power BI Embedded to distribute reports inside your organization or re-implement functionality that already exists in Power BI. Fair enough – internal users are already covered by Power BI licenses and Microsoft doesn’t want you to come up with a tool that competes with Power BI. Speaking about licensing, what’s great about the Power BI Embedded pricing model is the recent change that Microsoft made where you’re charged per a report session and not for the number of reports that are rendered or registered customers! When the user opens a report, a session is started for one hour or until the user closes the app. The first 100 sessions/mo are on Microsoft. After that you’re charged $5 for 100 sessions per month. Besides the Power BI Embedded great features, this cost-effective pricing model is why independent software vendors (ISVs) and developers are flocking to Power BI Embedded.

How are users provisioned?

If you recall, Power BI requires each user to sign up. Power BI Pro charges the user $9.99 per month (based on feedback from customers, they don’t pay the sticker price because they either acquire Power BI through the Office 365 E5 plan and/or have a discounted price). If you have an app that potentially can be accessed by thousands of users, the Power BI pricing model is not cost effective. By contrast, Power BI Embedded leaves it up to the custom app to authenticate the user. So there is no provisioning you have do. However, although Power BI Embedded uses the Power BI infrastructure, don’t expect the Power BI reports to show up when you log in to Power BI. In other words, Power BI Embedded and Power BI don’t share datasets and reports. Consequently, at the least for now, Power BI Embedded is limited to embedding reports only. No Q&A, no quick insights, no portal, and no other Power BI goodies. Another limitation is that at least for now Power BI Embedded is limited to report viewing only. Users can’t edit the reports, such as to add or remove fields.

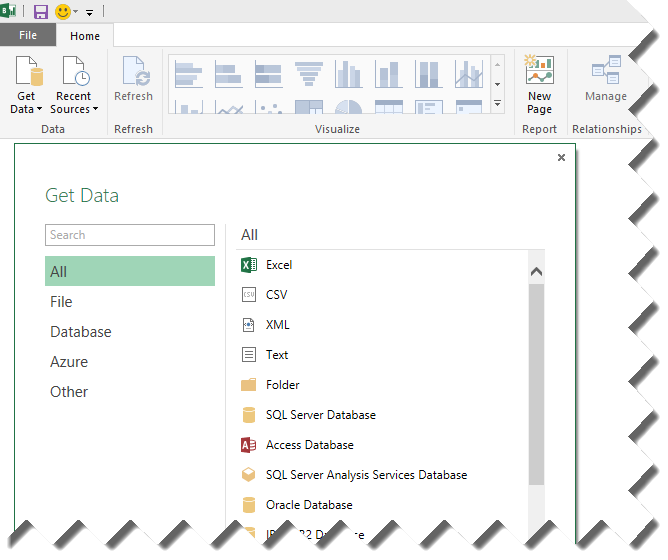

What data sources are supported?

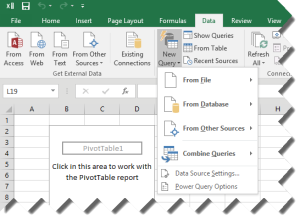

One what to acquire data is to import your data in Power BI Desktop. When you import data, you’re limited of 1 GB of compressed data, which is the same dataset limitation that Power BI currently has. Because of the excellent compression (x5-10 ration), you can still pack a lot of data into a 1 GB dataset. For example, you can create a separate extract for each customer if you have a limited number of customers or you need to provide a high degree of customization for each customer. Another option is to connect live to cloud data sources. Because Power BI Embedded is an Azure cloud service, naturally it works with Azure-resident PaaS data sources, such as Azure SQL Database and Azure SQL Data Warehouse.

How does security work?

Power BI Embedded uses OAuth to authorize your users with Power BI. Your application authenticates the user as it would normally do. If the user has access to reports, your application would login to the Power BI Embedded using a special access key (think of it as a password). When the user requests the report, the application generates a token that Power BI validates to grant access to the report. If you need to support row-level security (RLS), Power BI Embedded get you covered too! You can define your security roles and row filters in Power BI Desktop. Then, your application can pass the user login (it doesn’t have to be on your domain) and what role(s) the user belongs to. The net result is that the user can see only the data the user is authorized to see.

How customizable is Power BI Embedded?

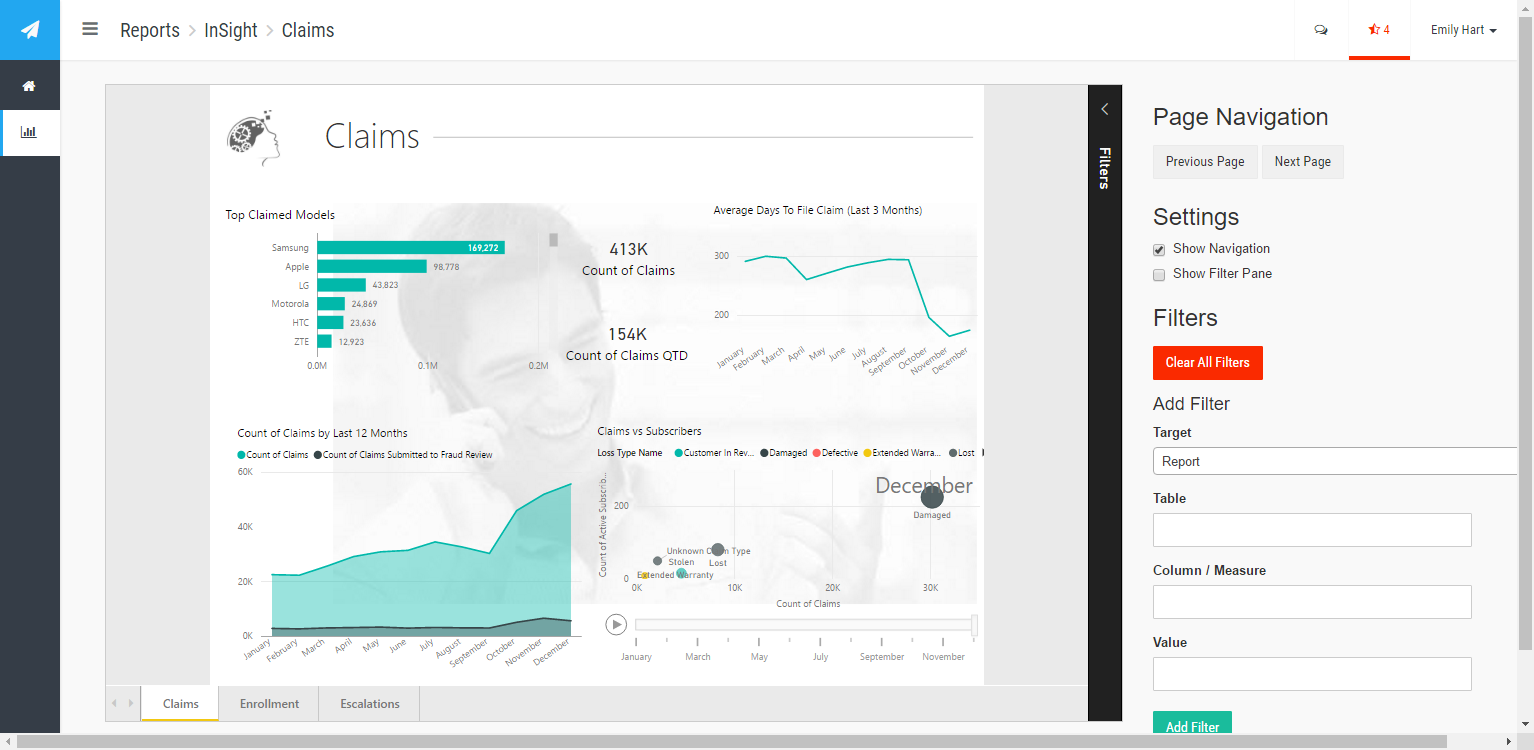

The Power BI Embedded customization story got even better with the introduction of the JavaScript APIs. They allow you to programmatically access the report object model on the client, such as to react to page change events, pass filters, or hide the report filter pane. For example, in the screenshot above, the application has its own page navigation and filter pane that replaces the Microsoft-provided filter pane. This gives the application more control about validating and passing visual, page, or report-level filters.

How do I get started?

Microsoft has provided an excellent ASP MVC sample to get you started. Check also my “Configuring Power BI Embedded” blog for a better configuration experience

MS BI Events in Atlanta

- Prologika: 2-day Applied Power BI public workshop in Atlanta by Teo Lachev on Sep 14-15

- Atlanta BI Group: “Power BI at one year – a look back and a look forward ” presentation by Siva Harinath on 9/26

- Atlanta Code Camp 2016: A free training event for developers on October 15th. I’ll have a presentation on Power BI Embedded.

Regards,

Teo Lachev

Prologika, LLC | Making Sense of Data

Microsoft Partner | Gold Data Analytics

I’m excited to announce the availability of my latest (7th) book –

I’m excited to announce the availability of my latest (7th) book –

Prologika: “

Prologika: “ Before we get to the subject of this newsletter, I’m happy to announce the availability of my latest class –

Before we get to the subject of this newsletter, I’m happy to announce the availability of my latest class –

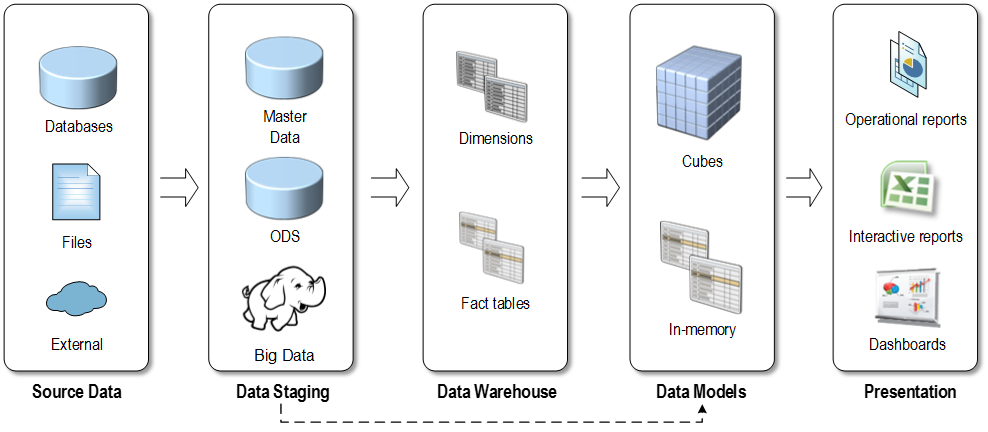

A while back I met with a client that was considering overhauling their BI. They asked me if the traditional data warehousing still makes sense or should they consider a logical data warehouse, Big Data, or some other “modern variant”. This newsletter discusses where data warehousing is going and explains how different data architectures complement instead of compete with each other.

A while back I met with a client that was considering overhauling their BI. They asked me if the traditional data warehousing still makes sense or should they consider a logical data warehouse, Big Data, or some other “modern variant”. This newsletter discusses where data warehousing is going and explains how different data architectures complement instead of compete with each other.

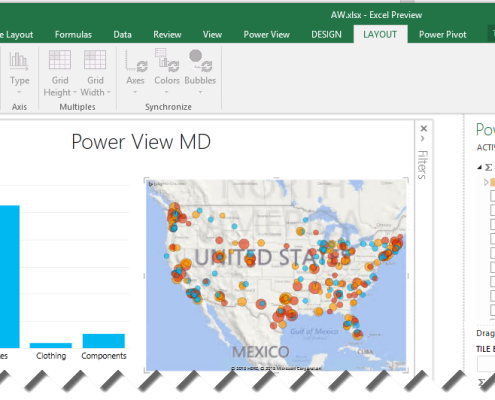

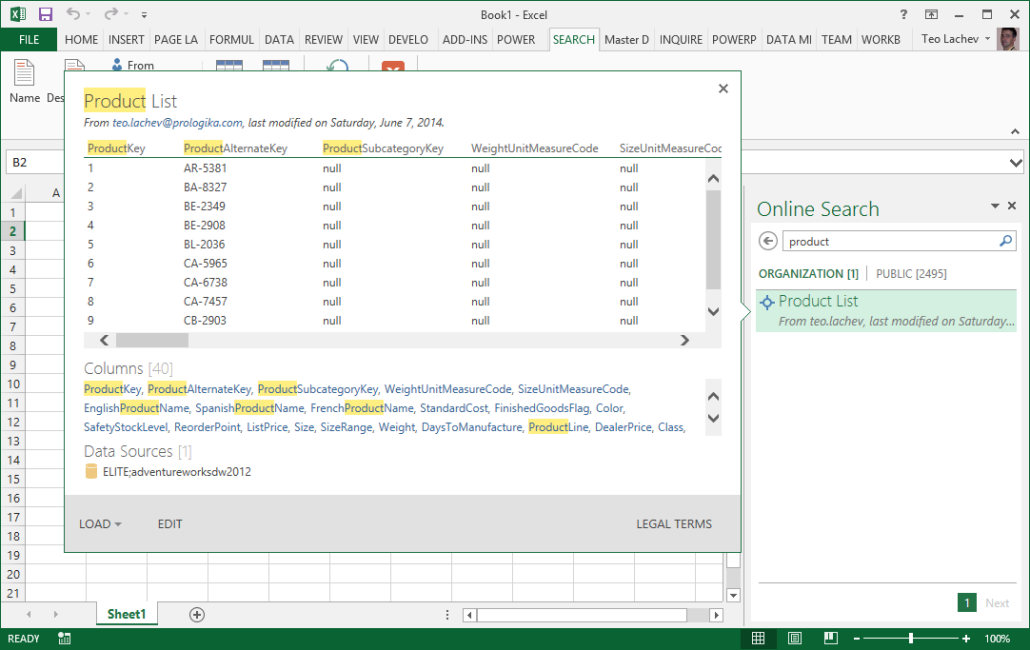

After an appetizer of embedded Power View reports , Microsoft proceeded to the main course that is a true Christmas gift – a

After an appetizer of embedded Power View reports , Microsoft proceeded to the main course that is a true Christmas gift – a