Finding Data Movement Bottlenecks

As you’ve heard, data volumes are increasing and putting pressure on BI practitioners to do more with less within ever shrinking processing windows. I’ve provided some ETL best practices in various blogs, such as my “Is ETL (E)ating (T)hou (L)ive?” newsletter. A few additional considerations regarding data movement bottlenecks came from two recent projects targeting SQL Server.

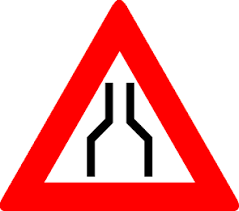

Slow Network

If you’re loading data from data sources on Wide Area Network (WAN), network speed is probably going to be a major concern. While LAN enjoys speeds at around 1,000 Mbps, you’ll probably find WAN speeds to be x10 less. You can use the network tab in the Windows Task Manager to find the network throughput. The next step is to determine how much it slows down data movement using a simple approach:

- Remote in to the remote server.

- Open SSMS, create a query, and execute a SELECT statement from the your ETL data flow task.

- Before running the query, you might want to go to the query options (Grid settings) and check “Discard results after execution” to prevent loading thousands of rows in the query grid and running SSMS out of memory.

- Record the time to execute the query. This is your baseline speed that doesn’t involve the network at all.

- Now remote in to the server where ETL is running and repeat the test. The delta between the two durations would tell you how much time is spent moving the data across the wire.

You now know how much the network slows down ETL but reducing network latencies might be out of your control. Hence, incremental extraction and parallel ETL execution become important.

Slow DML Operations

It’s not uncommon for BI ETL tasks to load huge datasets. Sure, you have SSD drives that got the fast I/O covered, right? Well, there are other things to check. In a recent project, we had a two-node Always On cluster for a high availability of an OLTP database. To reduce SQL Server licensing cost, the data warehouse is hosted on the cluster. Because availability group replication requires Full recovery mode, the data warehouse was configured for Full Recovery. This effectively disables minimal logging which becomes otherwise easily attainable with SQL Server 2016 or higher. Here are some stats from running a simple batch insert of 10 million rows:

- Data warehouse database in Simple Recovery mode: 1:20 min

- Data warehouse database in Full Recover mode: 1:34 min (no minimal logging)

- Data warehouse database synchronized: 6:58 minutes!

As you can see, Full Recovery adds about 18% more overhead (besides huge logs during massive DML operations). However, the worst culprit is the synchronization between primary and secondary replicas, slowing down the overall execution time five times! You’ll know that you are waiting for replica synchronization when you see HADR_SYNC_COMMIT wait type. You can follow the steps in this blog to try reducing the cluster synchronization latency but the best strategy might be to remove the DW database from synchronization and to change its recovery mode to Simple, assuming that DW is not a mission critical resource.

All projects start simple. Adventure Works-type data loads are fast and require no optimization. But many of us are not that lucky and tackling 100s of millions (if not billions of rows) is becoming the norm. You must bend backwards to optimize data movement and reduce ETL processing windows before discovering that ETL tasks are running back to back 24×7.