High Memory Usage and Calculated Columns

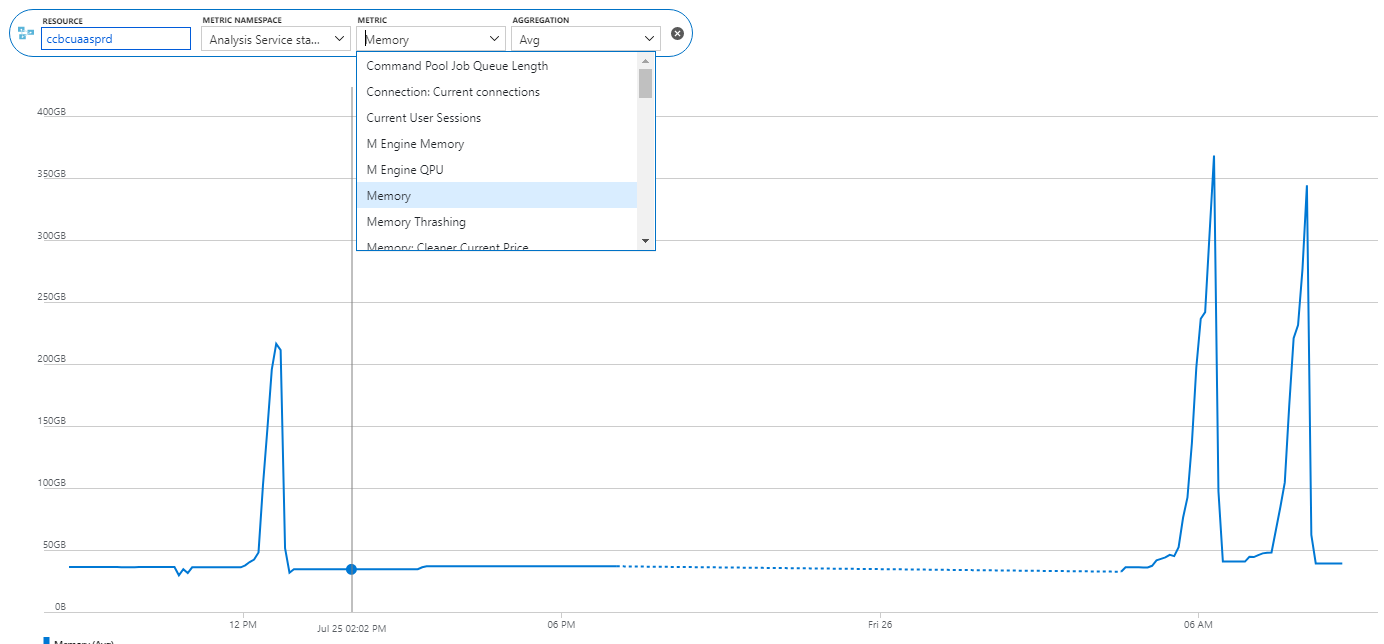

Scenario: A client reports a memory spike during processing. They have a Tabular semantic model deployed to Azure Analysis Services. They fully process the model daily. The model normally takes less than 50 GB RAM but during processing, it spikes five times and Azure Analysis Services terminates the processing task complaining that it “reached the maximum allowable memory in our pricing tier”. Normally, fully processing the model should take about twice the memory but five times?

Solution: Upon expecting the model design, I discovered that the client has decided to add (many) calculated columns to the two fact tables in the model. Most of these columns are used to calculated variances to prior year. The formulas contain DATESYTD and other DAX date-related functions. After data is read, Tabular processes calculated columns, relationships and hierarchies. In this case, the spike was due to calculations involving large time ranges and ineffective DAX expressions. Converting these columns to measures resolved the issue.

Solution: Upon expecting the model design, I discovered that the client has decided to add (many) calculated columns to the two fact tables in the model. Most of these columns are used to calculated variances to prior year. The formulas contain DATESYTD and other DAX date-related functions. After data is read, Tabular processes calculated columns, relationships and hierarchies. In this case, the spike was due to calculations involving large time ranges and ineffective DAX expressions. Converting these columns to measures resolved the issue.

As a best practice, abstain from using calculated columns (especially in fact tables). Make sure you understand the difference between measures and calculated columns (I cover this extensively in my latest book “Applied DAX with Power BI“). If you do need expression-based columns, such to materialize expensive calculations, consider defining them upstream, such as in SQL views or Power Query.