Prologika Newsletter Winter 2018

Happy Holidays! I hope you’re enjoying this special time of the year. According to Gartner, “poor data quality is hitting organizations where it hurts – to the tune of $15 million as the average annual financial cost in 2017″. Data integration is another huge challenge, you know it and I know it. Microsoft hopes that Power BI dataflows will help data analysts tackle these issues and dataflows are the subject of this newsletter. But before I give you my opinion about this newcomer, I’m excited to announce the availability of the fourth edition of my “Applied Microsoft Power BI” book – the only Power BI book that it’s updated every year to keep it up with the ever-changing world of Power BI and the Microsoft Data Platform! The book is making slowly its way to the retailers and it should be available on Amazon in the first days of 2019.

Happy Holidays! I hope you’re enjoying this special time of the year. According to Gartner, “poor data quality is hitting organizations where it hurts – to the tune of $15 million as the average annual financial cost in 2017″. Data integration is another huge challenge, you know it and I know it. Microsoft hopes that Power BI dataflows will help data analysts tackle these issues and dataflows are the subject of this newsletter. But before I give you my opinion about this newcomer, I’m excited to announce the availability of the fourth edition of my “Applied Microsoft Power BI” book – the only Power BI book that it’s updated every year to keep it up with the ever-changing world of Power BI and the Microsoft Data Platform! The book is making slowly its way to the retailers and it should be available on Amazon in the first days of 2019.

What’s a dataflow?

I’m sure that by now you’ve heard about Power Query – a tool that is integrated with Power BI Desktop to help the data analyst clean and shape the data. The issue with the Power Query though is that it’s tightly coupled with Power BI Desktop while data integration challenges sometimes need to be solved at a larger scale. Think of dataflows as “Power Query in the Cloud”. Dataflows is to self-service BI what ETL is to Power BI pros.

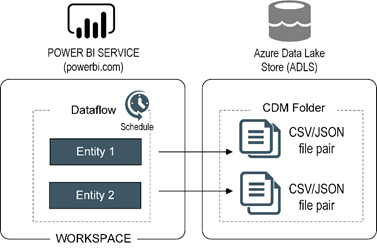

A dataflow is a collection of Power Query queries, also known as entities, and it lives in a Power BI organizational workspace.

For example, if I want to stage data from Salesforce, I can create a dataflow with as many entities as Salesforce tables I want to stage. Unlike Power Query, which can only output to a table in a data model, a dataflow saves its output as a pair of a CSV file (data) and JSON file (metadata) in a Microsoft provided data lake store.

So, for the BI pros who are familiar with SSIS projects for ETL, think of a dataflow as a project and queries as SSIS packages. Just like you can deploy an SSIS project and schedule it to run at a specific time, you can schedule a dataflow, and this will execute all its queries.

The Good

There is a lot to like about dataflows. I can think of two primary self-service scenarios that can benefit from dataflows:

- Data staging – Many organizations implement operational data stores (ODS) and staging databases before the data is processed and loaded in a data warehouse. As a business user, you can use data-flows for a similar purpose. For example, one of our clients is a large insurance company that uses Microsoft Dynamics 365 for customer relationship management. Various data analysts create data models from the same CRM data, but they find that refreshing the CRM data is time consuming. Instead, they can create a dataflow to stage some CRM entities before importing them in Power BI Desktop. Even better, you could import the staged CRM data into a single dataset or in an organizational semantic model to multiple data copies and duplicating business logic.

- Certified datasets – One way to improve data quality and promote better self-service BI is to prepare a set of certified common entities, such as Organization, Product, and Vendor. A data steward can be responsible for designing and managing these entities. Once in place, data analysts can import the certified entities in their data models.

There is a solid architecture, vision, and investments behind dataflows. You create dataflows using a tool that you’re already familiar with: Power Query. If you know Power Query, you know dataflows. Microsoft has provided the data lake storage and pricing is included in Power BI Pro/Power BI Premium. So, you have everything you need to get started with dataflows today. Ingesting the dataflow output is very fast too because you import CSV files.

The Bad

My blog on same subject started with positioning as I was left with the impression from watching the MS presentations that Microsoft encourages business users to consider dataflows for any data integration task, from data staging to loading data warehouses and even replacing data warehouses and ETL (heard that vibe before?) But Matthew Roche clarified that “dataflows don’t replace data warehouses and ETL any more than Power BI desktop replaces a full set of BI pro tools. Dataflows complement these pro tools, and enable users who are not BI pros to fill in more gaps in a BI solution, similar to other Power BI capabilities. Although dataflows mean that self-service users can do more without help, the same patterns still apply”. So, consider this if you hear a different propaganda and use the right tool for task at hand.

You need Power BI Premium to realize the full potential of dataflows. For some obscure reason, features like linked and computed entities are not available in Power BI Pro. I wonder how many customers will feel pushed to go Power BI Premium as more and more features are only available there. I don’t mind scalability and performance related features but incremental refresh and linked entities?

Lastly, the CSV output is both a blessing and a curse. True, ingestion is fast, but text files are not a relational database. Business users can’t make direct changes to the staged data. Further, the only option is to import the dataflow output in Power BI as DirectQuery is not available for text files.

The Ugly

As dataflows stand today (in preview), you (almost) can’t access directly the output generated by dataflows. This precludes the continuum between self-service and organizational worlds. For example, IT might need to import a certified dataset into a data warehouse. But you can only connect to a dataflow in Power BI Desktop because the CSV files are not directly accessible. True, Power BI Premium lets you bring your own data lake storage (currently a preview feature), but a better option in my opinion was to provide access to the raw data in both Power BI Pro and Premium given that this is your data lake and your data.