Unblocking MDX Queries from SSRS Reports

I love performance optimization. It’s exciting to peek under the hood and take a deep dive in the mysterious world of how Microsoft designed things. And it always humbles me by bringing forth gaps in my knowledge.

Scenario: You have SSRS reports that query Analysis Services Multidimensional cubes. When users request reports in Report Manager (aka Web Portal starting with SSRS 2016) and … the report hangs sporadically, without even getting to the ReportViewer page… for minutes. The server doesn’t appear to be under resource pressure. The issue happens when the cube is being processed. And no, this is not about locking.

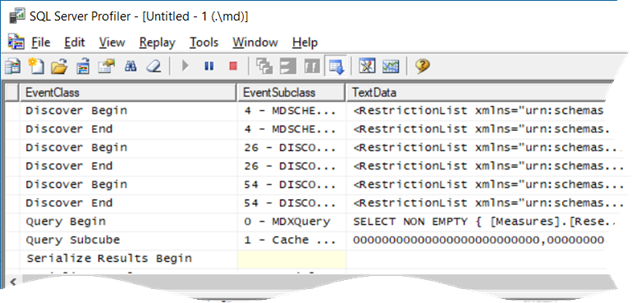

Solution: This one wasn’t trivial to figure out. The profiler showed that when the report is requested, SSRS fires the first MDX query (typically, a query that populates the parameter available values). The breakthrough came when I executed the same query in SSMS while the SSRS one was blocked, and it executed immediately. With some help from Microsoft, it turned out that flattened rowsets (SSRS requests flattened rowsets), are serialized by threads in the SSAS processing pool. Indeed, once I enabled the Serialize events in the profiler, I noticed that the Serialize Results Begin event fires but not Serialize Results End.

So, if there are not enough threads in the SSAS processing pool, the flattened queries are queued. You can use the MSAS11 Threads: Processing Pool Job Queue Length counter to confirm that processing threads are queued. In this case, the queue length was about 200 threads when fully processing the database!

The resolution depends on your specific situation. If you have plenty of CPU cores, try increasing the pool size by increasing the ThreadPool\Process\MaxThreads SSAS setting. The default value of this setting is 0, causing SSAS to allocate the maximum of (64, number of logical cores). So, if you have a two-socket server with 16 hyperthreaded cores (32 logical cores), the maximum number of processing threads would be 64. On the other hand, if you see a high CPU utilization during processing, try reducing the parallelism of the processing task by using one of these three approaches:

- Reduce the maximum number of connections in the SSAS data source properties.

- Set the MaxParallel setting on the processing batch command.

- Reduce the CoordinatorExecutionMode SSAS configuration settings.

Also, I recommend you load test your production server to ensure that it has enough capacity. I did a “Can Your BI Solution Scale” presentation at TechEd 2011 about load testing SSAS and SSRS that shows how this could be done.

Many thanks to Akshai Mirchandani from the SSAS product team for shedding light in dark places.