More Details about BISM (UDM 2.0)

It looks like this year SQL PASS was the one to go. A must read blog from Chris Webb who apparently shares the same feelings and emotions about the seismic change in Analysis Services 11 as I do. A must read…

It looks like this year SQL PASS was the one to go. A must read blog from Chris Webb who apparently shares the same feelings and emotions about the seismic change in Analysis Services 11 as I do. A must read…

Now that the official word got out during the Ted Kummert’s keynote today at SQL PASS, I can open my mouth about Crescent – the code name of an ad-hoc reporting layer that will be released in the next version of SQL Server – Denali. Crescent is a major enhancement to Reporting Services and Microsoft Self-Service BI strategy. Up to now, SSRS didn’t have a web-based report designer. Denali will change that by adding a brand new report authoring tool that will be powered by Silverlight. So, this will be the fifth report designer after BIDS, Report Builder 1.0 (not sure if RB 1.0 will survive SQL 11), Report Builder 3.0, Visual Studio Report Designer.

Besides brining report authoring to the web, what’s interesting about Crescent is that it will redefine the report authoring experience and even what a report is. Traditionally, Reporting Services reports (as well as reports from other vendors) have been “canned”, that is, once you publish the report, its layout becomes fixed. True, you could implement interactive features to jazz up the report a bit but changes to the original design, such as adding new columns or switching from a tabular layout to a crosstab layout, requires opening the report in a report designer, making the changes, and viewing/republishing the report. As you would recall, each of the previous report designers would have separate design and preview modes.

Crescent will change all of this and it will make the reporting experience more interactive and similar to Excel PivotTable and tools from other vendors, such as Tableau. Those of you who saw the keynote today got a sneak preview of Crescent and its capabilities. You saw how the end user can quickly create an interactive report by dragging metadata, a-la Microsoft Excel, and then with a few mouse clicks change the report layout without switching to design mode. In fact, Crescent doesn’t have a formal design mode.

How will this magic happen? As it turns out, Crescent will be powered by a new ad-hoc model called Business Intelligence Semantic Model (BISM) that probably will be a fusion between SMDL (think Report Builder models) and PowerPivot, with the latter now supporting also relational data sources. The Amir’s demo showed an impressive response time when querying billion rows from a relational database. I still need to wrap my head around the new model as more details become available (stay tuned) but I am excited about it and the new BI scenarios it will make possible besides traditional standard reporting. It’s great to see the Reporting Services and Analysis Services teams working together and I am sure good things will happen to those who wait. Following the trend toward SharePoint as a BI hub, Crescent unfurtantely will be available only in SharePoint mode. At this point, we don’t know what Reporting Services and RDL features it will support but one can expect tradeoffs given its first release, brand new architecture and self-service BI focus.

So, Crescent is a code name for a new web-based fusion between SSRS and BISM (to be more accurate Analysis Services in VertiPaq mode). I won’t be surprised if its official name will be PowerReport. Now that I picked your interest, where is Crescent? Crescent is not included in CTP1. More than likely, it will be in the next CTP which is expected around January timeframe.

Greg Galloway just published a ResMon cube sample on CodePlex that aggregates execution statistics (rolls up information about Analysis Services such as memory usage by object, perfmon counters, aggregation hits/misses, and current session stats) from Analysis Services dynamic management views (DMVs) and makes it easily available for slicing and dicing in a cube. I think this will be a very useful tool to analyze the runtime performance of an Analysis Services server or as a learning tool to understand how to work with DMVs. Kudos to Greg!

Mark Tabladillo delivered a great presentation about using Excel for data mining at our last Atlanta BI SIG meeting. The lack of an Excel 2010 x64 DM add-in came up. There was a question from the audience whether Microsoft has abandoned DM technology since it stopped enhancing it. A concern was also raised that Microsoft might have also neglected the corporate BI vision in favor of self-service BI.

That’s definitely not the case. I managed to get a clarification from the Microsoft Analysis Services team that Corporate BI will be a major focus in “Denali”, which is the code name for the next version of SQL Server – version 11. As far as the long-overdue x64 DM add-in for Excel, Microsoft is working on it and it will be delivered eventually. Meanwhile, the 2007 add-in works with the x32 version of Excel 2010.

For those of you going to SQL PASS (unfortunately, I won’t be one of them), Microsoft will announce important news about Denali and hopefully give a sneak preview about the cool BI stuff that is coming up.

Analysis Services has a very efficient processing architecture and server is capable of processing rows as fast as the data source can provide. You should see processing rate in the ballpark 40-50K rows/sec or even better. One of my customers just bought a new shiny HP ProLiant BL680c server only to find out that processing time went three times higher than the old server. I did a simple test where I asked to execute the processing on both the old server and the new server. The query on the old server would return all rows within 2 minutes, while the same query would execute for 20 minutes which averages to about 4K rows processed/sec. This test ruled out Analysis Services right off the bat. It was clear that the network is the bottleneck. Luckily, the server had a lot of processing power, so processing wasn’t ten times slower.

As it turned out, the company has a policy to cap the network traffic at the switch for all non-production subnets or security and performance reasons. Since the new server was still considered a non-production server, it was on plugged in to a restricted network segment. The moral of this story is that often basic steps could help you isolate and troubleshoot “huge” issues.

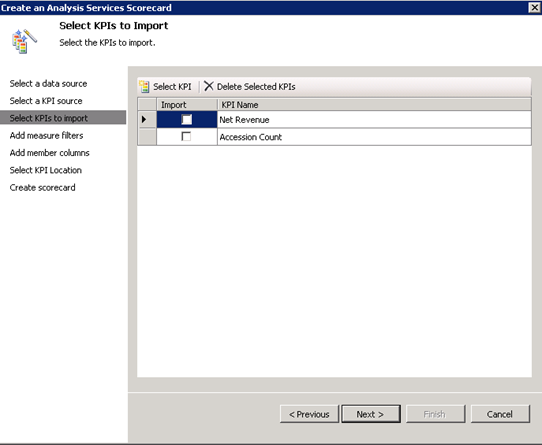

Forging ahead through the unchartered land of PerformancePoint 2010, I ran into a snag today. Attempting to import Analysis Services KPIs resulting in the following error:

An unexpected error occurred. Error 47205.

Exception details:

System.IO.FileNotFoundException: Could not load file or assembly ‘Microsoft.AnalysisServices, Version=10.0.0.0, Culture=neutral, PublicKeyToken=89845dcd8080cc91’ or one of its dependencies. The system cannot find the file specified.

File name: ‘Microsoft.AnalysisServices, Version=10.0.0.0, Culture=neutral, PublicKeyToken=89845dcd8080cc91’

at Microsoft.PerformancePoint.Scorecards.Server.ImportExportHelper.GetImportableAsKpis(DataSource asDataSource)

at Microsoft.PerformancePoint.Scorecards.Server.PmServer.GetImportableAsKpis(DataSource dataSource)

Since in our case, PerformancePoint was running on a SharePoint web front end server (WFE) which didn’t have any of the SQL Server 2008 components installed, it was clear that PerformancePoint was missing a connectivity component. Among many other things, I tried installing the Analysis Services Management Objects (AMO) from the SQL Server 2008 Feature Pack but the error won’t go away. I fixed it by running the SQL Server 2008 setup program and installing the Client Tools Connectivity option only. Then, the KPIs magically appear in the Scorecard Wizard.

I am back from vacation in Florida and I am all rested despite the intensive sun exposure and the appearance of some tar from the oil spill. I have scheduled the next two runs of my online training classes:

Applied Reporting Services 2008 Online Training Class

Date: June 28 – June 30, 2010

Time: Noon – 4:30 pm EDT; 9 am – 1:30 pm PDT

Applied Analysis Services 2008 Online Training Class

Date: July 7 – July 9, 2010

Time: Noon – 4:30 pm EDT; 9 am – 1:30 pm PDT

Yes, I am also tossing in an hour of consulting with me to spend it any way you want absolutely FREE! This is your chance to pick up my brain about this nasty requirement your boss wants you to implement. So, sign up while the offer lasts. Don’t forget that you can request custom dates if you enroll several people from your company.

The other day I decided to spend some time and educate myself better on the subject of aggregations. Much to my surprise, no matter how hard I tried hitting different aggregations in the Internet Sales measure group of the Adventure Works cube, when I got the Get Data From Aggregation event in the SQL Profiler, it always indicated an Aggregation 1 hit.

Aggregation 1 100,111111111111111111111

I found this strange given that all partitions in the Internet Sales measure group share the same aggregation design which has 57 aggregations in the SQL Server R2 version of Adventure Works. And I couldn’t relate the Aggregation 1 aggregation any of the Internet Sales aggregations. BTW, the aggregation number reported by the profiler is a hex number, so Aggregation 16 is actually Aggregation 22 in the Advanced View of the Aggregations tab in Cube Designer. With some help from Chris Webb and Darren Gosbell, we realized that Aggregation 1 is an aggregation from the Exchange Rates measure group. Too bad the profiler doesn’t tell you which measure group the aggregation belongs to. But since the Aggregation 1 vector has only two comma-separated sections, you can deduce that the containing measure group references two dimensions only. This is exactly the case of the Exchange Rates measure group because it references the Date and Destination Currency dimensions only.

But this still doesn’t answer the question why the queries can’t hit any of the other aggregations. As it turned out, the Internet Sales and Reseller Sales measure group includes measures with measure expressions to convert currency amounts to USD Dollars, such as Internet Sales, Reseller Sales, etc. Because the server resolves measure expressions at the leaf members of the dimensions at run time, the server cannot benefit from any aggregations. Basically, the server says “this measure value is a dynamic value and pre-aggregated summaries won’t help here”. More interestingly, even if the query requests another measure that doesn’t have a measure expression from these measure groups, it won’t take advantage of any aggregations that belong to these measure groups. That’s because when you query for one measure value in a measure group, the server actually returns all the measure values in that measure group. The Storage Engine always fetches all measures, including a measure based on a measure expression – even when it may not have been requested by the current query. So, it doesn’t really matter in this case that you requested a different measure – it will always aggregate from leaves.

To make the story short, if a measure group has measures with measure expressions, don’t bother adding aggregations to that measure group. So, all these 57 aggregations in Internet Sales and Measure Sales that the Adventure Works demonstrates are pretty much useless. To benefit from an aggregation design in these measure groups, you either have to move measures with expressions to a separate measure group or don’t use measure expressions at all. As a material boy, who tries to materialize calculations as much as possible for better performance, I’d happily go for the latter approach.

BTW, while we are on the subject of aggregations, I highly recommend you watch Chris Webb’s excellent Designing Effective Aggregation with Analysis Services presentation to learn what the aggregations are and how to design them. Finally, unlike the partition size recommendations that I discussed in this blog, aggregations are likely to help with much smaller datasets. Chris mentions that aggregations may help even with 1-2 million rows in the fact table. The issue with aggregations is often about building the correct aggregations, and in some cases to be careful not to build too many aggregations.

As Analysis Services users undoubtedly know, partitioning and aggregations are the two core tenants of a good Analysis Services data design. Or, at least they have been since its first release. The thing though is that a lot of things have changed since then. Disks and memory got faster and cheaper, and Analysis Services have been getting faster and more intelligent with each new release. You should catch up with these trends, of course, and re-think some old myths, the most prevalent being that you must partition and add aggregations to every cube so you can get faster queries.

In theory, partitioning reduces the data slice the storage engine needs to cover and the smaller the data slice, the less time the SE will spent reading data. But there is a great deal of confusion about partitioning through the BI land. Folks don’t know when to partition and how to partition. They just know that they have to do it since everybody does it. The feedback from the Analysis Services team contributes somewhat to this state of affairs. For example, the Microsoft SQL Server 2005 Analysis Services Performance Guide advocates a partitioning strategy for 15-20 million rows per partitions or 2GB as a maximum partition size. However, the Microsoft SQL Server 2008 Analysis Services Performance Guide is somewhat silent on the subject of performance recommendations for partitioning. The only thing you will find is:

For nondistinct count measure groups, tests with partition sizes in the range of 200 megabytes (MB) to up to 3 gigabytes (GB) indicate that partition size alone does not have a substantial impact on query speeds.

Notice the huge data size range – 200 MB to 3 GB. Does this make you doubtful about partitioning for getting faster query times? And why did they remove the partition size recommendation? As it turns out, folks with really large cubes (billions of rows) would follow this recommendation blindly and end up with thousands of partitions! This is even a worse problem than having no partitions at all because the server trashes time for managing all these partitions. Unfortunately (or fortunately), I’ve never had to chance to deal with such massive cubes. Nevertheless, I would like to suggest the following partitioning strategy for the small to mid-size cubes:

If the above sounds too complicated, here are some general recommendations that originate from the Analysis Services team to give you rough size guidelines about partitioning:

One area where partitioning does help is manageability if this is important for you. For example, partitions can be processed independently, incrementally (e.g. add only new data), have different aggregation designs, combine fact tables (e.g. actual, budget, and scenario tables in one measure group). Partitioning can help you also reduce the overall cube processing time with multiple CPUs and cores. Processing is a highly parallel operation and partitions are processed in parallel. So, you may still want to add a few partitions, e.g. partition by year, to get the cube to process faster. Just be somewhat skeptical about partitioning for improving the query performance.

There is still time to register for the online Applied Analysis Services 2008 class run on May 17th. No travel, no hotel expenses, just 100% content delivered right to your desktop! This intensive 3-day online class (14 training hours) teaches you the knowledge and skills to master Analysis Services to its fullest. Use the opportunity to ask questions and learn best practices.