SaaS Winners – InTempo Software

I ranted many times about SaaS vendors preventing direct access to data in its native storage, which is typically a relational database. It’s sort of like going to a bank, handing them over your money, and they telling you that you can only get your money back in cash, in Bitcoin, in ₿100 bills but no more than ₿1,000 per withdrawal, or whatever madness suits the bank best. I wonder how long this bank will be in business. Yet, many companies just hand over the keys of the data kingdom to a SaaS vendor and they wonder how to get their “precious” back so they can build EDW and enable modern analytics. Since when direct access became an anathema perpetuated by mega software vendors, including Microsoft? I’ll repeat again that there are no engineering reasons to prevent direct access to your data, even if you must pay more to “isolate” you from other customers for security, performance, liability, or other “tradeoffs” for you choosing the SaaS path.

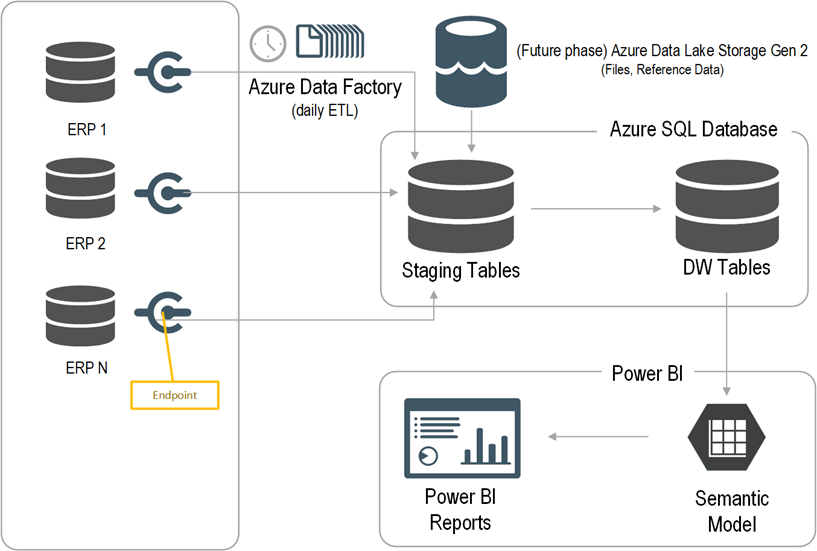

However, there are rays of sunshine here and there. In a recent integration project, I had to extract data from the InTempo ERP cloud software. Besides other integration options, InTempo supports ODBC access to their IBM Db2 database over VPN connectivity. Not only did InTempo give us direct access to the ERP data, but they also provided support services to help with the SQL queries. I couldn’t believe my luck. Finally, an enlightened vendor! On the downside, we had to send freeform SELECT statements down the wire from ADF while I personally would have preferred an option to package them in SQL views residing at the database, but I’d be pushing my luck.

Direct access to your data should be on top of your SaaS vendor selection criteria. TIP: if you hear “API” in any flavor (REST, ODATA, SOAP, etc.), run for the exit. I have nothing but horrible experience taking that path, even with Microsoft Dynamics APIs. “Data lake” is a tad better but still your best option is direct access to data in its native storage to avoid excessive integration effort and reduce data latency.

Enterprise Resource Planning (ERP) systems capture a wealth of information from main business processes within an organization, such as Sales, Inventory, Orders, etc. Therefore, a BI journey typically starts with analyzing the ERP data, with revenue analytics usually taking the center stage because of its vital metrics, such Revenue, Margin, Profit. In fact, this scenario has repeated so often for the past few years that Prologika has developed a fast-track implementation for ERP analytics. The client presented in this case study had more complicated integration needs, but other projects for both larger and smaller clients in the same or other industries have benefited from the same methodology.

Enterprise Resource Planning (ERP) systems capture a wealth of information from main business processes within an organization, such as Sales, Inventory, Orders, etc. Therefore, a BI journey typically starts with analyzing the ERP data, with revenue analytics usually taking the center stage because of its vital metrics, such Revenue, Margin, Profit. In fact, this scenario has repeated so often for the past few years that Prologika has developed a fast-track implementation for ERP analytics. The client presented in this case study had more complicated integration needs, but other projects for both larger and smaller clients in the same or other industries have benefited from the same methodology.