Prologika Newsletter Winter 2025

If Microsoft Fabric is in your future, you need to come up with a strategy to get your data in Fabric OneLake. That’s because the holy grail of Fabric is the Delta Parquet file format. The good news is that all Fabric data ingestion options (Dataflows Gen 2, pipelines, Copy Job and notebooks) support this format and the Microsoft V-Order extension that’s important for Direct Lake performance. Fabric also supports mirroring data from a growing list of data sources. This could be useful if your data is outside Fabric, such as EDW hosted in Google BigQuery, which is the scenario discussed in this newsletter.

Avoiding mirroring issues

A recent engagement required replicating some DW tables from Google BigQuery to a Fabric Lakehouse. We considered the Fabric mirroring feature for Google BigQuery (back then in private preview, now in public preview) and learned some lessons along the way:

1. 400 Error during replication configuration – Caused by attempting to use a read-only GBQ dataset that is linked to another GBQ dataset, but the link was broken.

2. Internal System Error – Again caused by GBQ linked datasets which are read-only. Fabric mirroring requires GBQ change history to be enabled on tables so that it can track changes and only mirror incremental changes after first initial load.

3. (Showstopper for this project) The two permissions that raised security red flags are bigquery.datasets.create and bigquery.jobs.create. To grant those permissions, you must assign one of these BigQuery roles:

• BigQuery Admin

• BigQuery Data Editor

• BigQuery Data Owner

• BigQuery Studio Admin

• BigQuery User

All these roles grant other permissions, and the client was cautious about data security. At the end, we end up using a nightly Fabric Copy Job to replicate the data.

Fabric Copy Job Pros and Cons

The client was overall pleased with the Fabric Copy Job.

Pros

- 250 million rows replicated in 30-40 seconds!

- You can have only one job to replicate all tables in Overwrite mode.

- In the simplest case, you don’t need to create pipelines.

Cons

The Copy Job is work in progress and subject to various limitations.

- No incremental extraction

- You can’t mix different load options (Append and Overwrite) so you must split tables in separate jobs

- No custom SQL SELECT when copying multiple tables

- (Bug) Lost explicit column bindings when making changes

- Cannot change the job’s JSON file

- The user interface is clunky and it’s difficult to work with

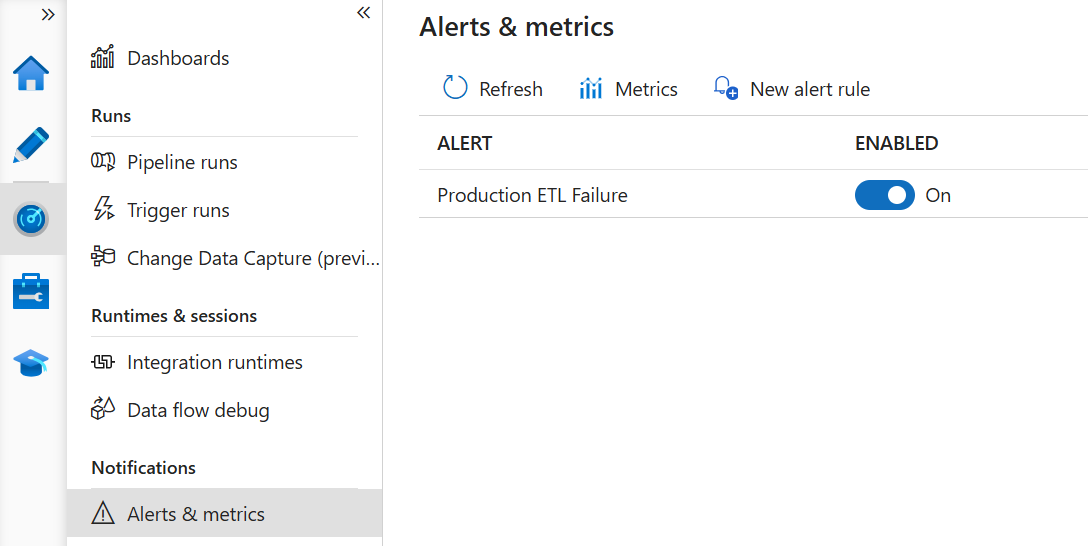

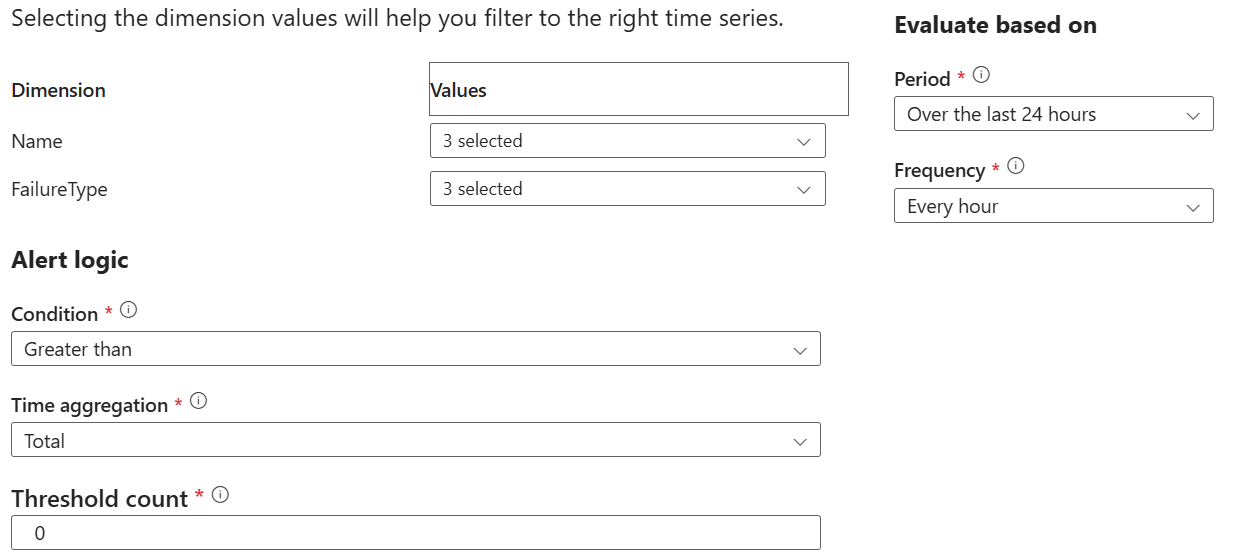

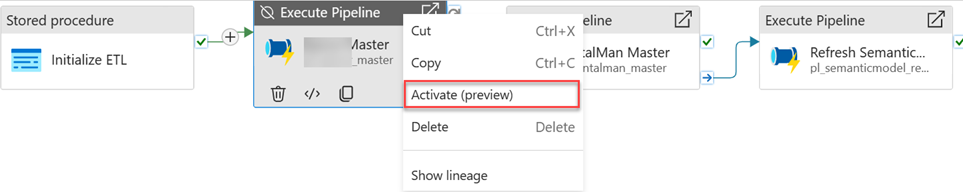

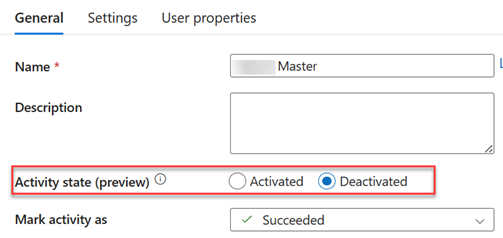

- No failure notification mechanism. As a workaround: add Copy Job to data pipeline or call it via REST API

Summary

In summary, the Fabric Google BigQuery built-in mirroring could be useful for real-time data replication. However, it relies on GBQ change history which requires certain permissions. Kudos to Microsoft for their excellent support during the private preview.