The Science of Counting

I’m watching the witness testimonies for election irregularities in Georgia (the state where I live). I’m shocked about how this election became such a mess and international embarrassment. United States spent 10 billion on the 2020 election. Georgia alone spent more than 100 million on some machines the security experts said can be hacked in minutes. If we add the countless number of manhours, investigations, and litigations, these numbers will probably double by the time the dust settles down.

What did we get back? Based on what I’ve heard, 50% of Americans believe this election is rigged, just like 50% believed so in 2016. The 2020 election added of course more options for abuse because of the large number of mail-in ballots. It’s astonishing how manual and complicated the whole process is, not to mention that each state does things differently. But the more human involvement and moving parts, the higher the attack vector and probability for intentional or unintentional mishandling due to “human nature”, improper training, or total disregard of rules. I casted an absentee ballot without knowing how it was applied.

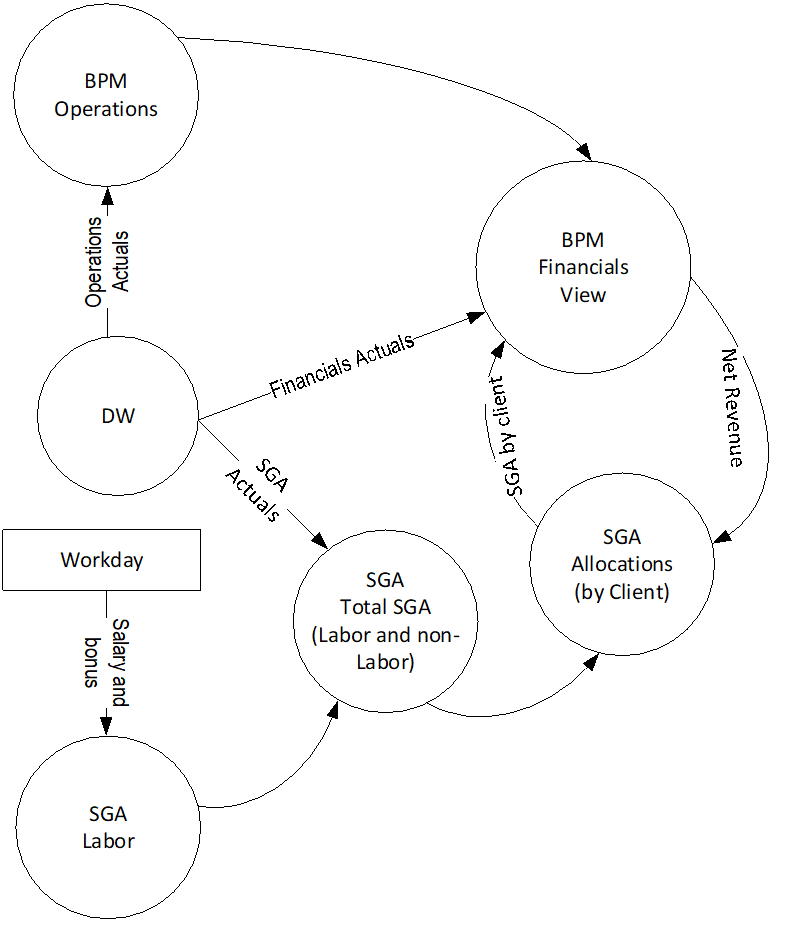

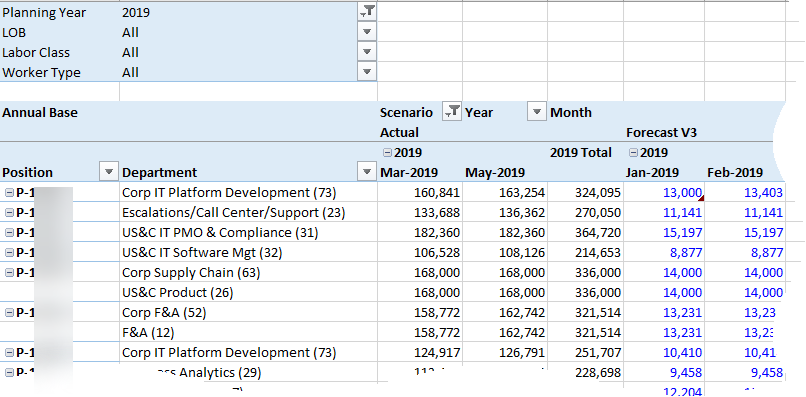

So, your humble correspondent thinks that it’s about time to computerize voting. Where humans fall short, machines take over. Unless hacked, algorithms don’t make “mistakes”. How about a modern Federal Internet Voting system that can standardize voting in all states? If the Government can put together a system for our obligations to pay taxes, it should be able to do it for the right to vote. If the most advanced country can get a vaccine done in six months, we should be able to figure out how to count votes. Just like in data analytics, elections will benefit from a single version of truth. Despite the security concerns surrounding a web app, I believe it will be far more secure that this charade that’s going on right now. In this world where no one trusts anyone, we can’t apparently trust bureaucrats to do things right.

Other advantages:

- Anybody can vote from any device so no vote “suppression”

- Better authentication (face recognition and capture, cross-check with other systems, ML, etc.)

- Vote confirmation

- Ability to centralize security surveillance and monitoring by an independent committee

- Report results in minutes

- Additional options for analytics on post-election results

- Save enormous amount of money and energy!

I’m just saying …