Atlanta Microsoft BI Group Meeting on August 7th (Introducing Microsoft Fabric)

Atlanta BI fans, please join us for the next meeting on Monday, August 7th, at 6:30 PM ET. James Serra (Data & AI Solution Architect at Microsoft) will join us remotely to introduce us to Microsoft Fabric. For more details and sign up, visit our group page.

PLEASE NOTE A CHANGE TO OUR MEETING POLICY. WE HAVE DISCONTINUED ONLINE MEETINGS VIA TEAMS. THIS GROUP MEETS ONLY IN PERSON. WE WON’T RECORD MEETINGS ANYMORE. THEREFORE, AS DURING THE PRE-PANDEMIC TIMES, PLEASE RSVP AND ATTEND IN PERSON IF YOU ARE INTERESTED IN THIS MEETING.

Presentation: Introducing Microsoft Fabric

Delivery: Speaker will join us remotely via Teams

Date: August 7th

Time: 18:30 – 20:30 ET

Level: Beginner

Food: Sponsor wanted

Agenda:

18:15-18:30 Registration and networking

18:30-19:00 Organizer and sponsor time (events, Power BI latest, sponsor marketing)

19:00-20:15 Main presentation

20:15-20:30 Q&A

ONSITE

Improving Office

11675 Rainwater Dr

Suite #100

Alpharetta, GA 30009

Overview: Microsoft Fabric is the next version of Azure Data Factory, Azure Data Explorer, Azure Synapse Analytics, and Power BI. It brings all these capabilities together into a single unified analytics platform that goes from the data lake to the business user in a SaaS-like environment. Therefore, the vision of Fabric is to be a one-stop shop for all the analytical needs for every enterprise and one platform for everyone from a citizen developer to a data engineer. Fabric will cover the complete spectrum of services including data movement, data lake, data engineering, data integration and data science, observational analytics, and business intelligence. With Fabric, there is no need to stitch together different services from multiple vendors. Instead, the customer enjoys end-to-end, highly integrated, single offering that is easy to understand, onboard, create and operate.

This is a hugely important new product from Microsoft, and I will simplify your understanding of it via a presentation and demo.

Speaker: James Serra works at Microsoft as a big data and data warehousing solution architect where he has been for most of the last nine years. He is a thought leader in the use and application of Big Data and advanced analytics, including data architectures, such as the modern data warehouse, data lakehouse, data fabric, and data mesh. Previously he was an independent consultant working as a Data Warehouse/Business Intelligence architect and developer. He is a prior SQL Server MVP with over 35 years of IT experience. He is a popular blogger (JamesSerra.com) and speaker, having presented at dozens of major events including SQLBits, PASS Summit, Data Summit and the Enterprise Data World conference. He is the author of the upcoming book “Deciphering Data Architectures: Choosing Between a Modern Data Warehouse, Data Fabric, Data Lakehouse, and Data Mesh”.

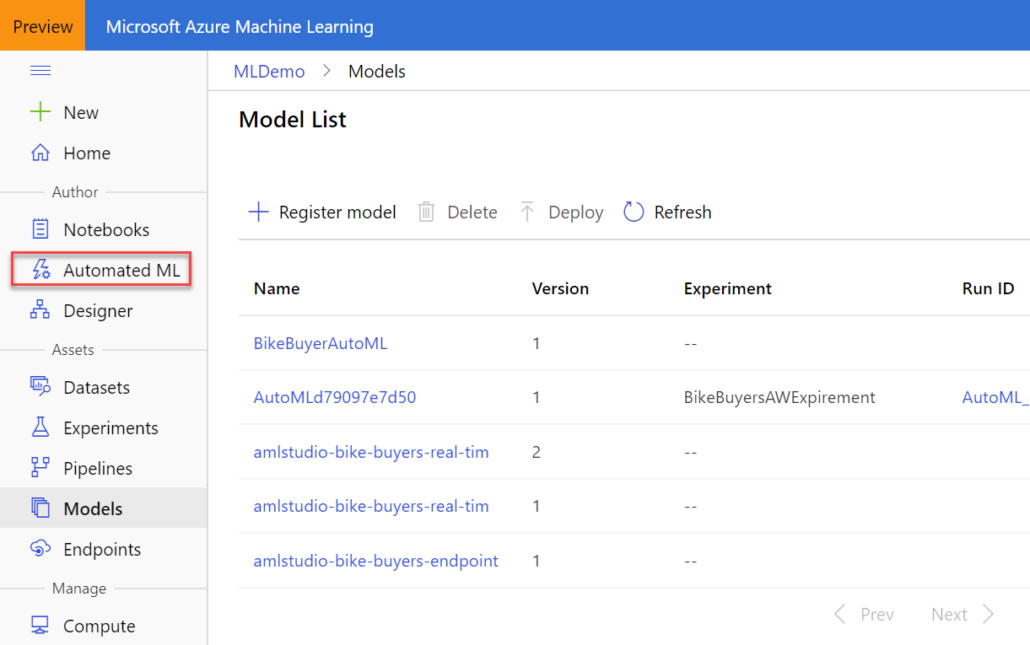

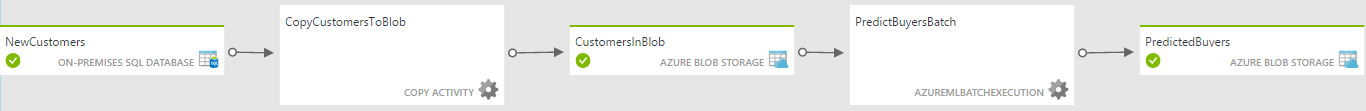

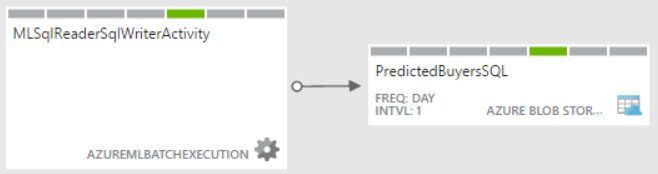

Do you know that according to Gartner, at least five of the top 10 technology trends for 2020 will involve predictive analytics? And the third on the list is “democratization” to deliver it to non-specialists. With the growing demand for predictive analytics, Automated Machine Learning (AutoML) aims to simplify and democratize predictive analytics so business users can create their own predictive models. The promise of AutoML is to bring predictive analytics to business users, just like Power BI democratizes data analytics, Power Apps democratizes app dev, and Power Query democratizes data shaping and transformation.

Do you know that according to Gartner, at least five of the top 10 technology trends for 2020 will involve predictive analytics? And the third on the list is “democratization” to deliver it to non-specialists. With the growing demand for predictive analytics, Automated Machine Learning (AutoML) aims to simplify and democratize predictive analytics so business users can create their own predictive models. The promise of AutoML is to bring predictive analytics to business users, just like Power BI democratizes data analytics, Power Apps democratizes app dev, and Power Query democratizes data shaping and transformation.