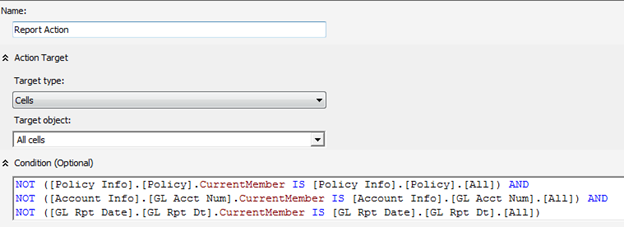

Scenario: A customer wants to have report row-level security and data-driven subscriptions. If you have used SSRS for a while, you will now the cornerstone of report row-level security is User!UserID which returns the user identity. But here is the catch – SSRS will refuse creating a data driven subscription if a report has an expression with User!UserID.

Solution: How do we solve this horrible issue? A great hack is in order. We will disguise the User!UserID in an embedded function inside the report or in an external assembly so SSRS can’t see it. (The reason why this function is named GetHashedUser although no hashing is in place will become obvious in a moment)

Public Function GetHashedUser() as String

return Report.User!UserID.ToLower()

End Function

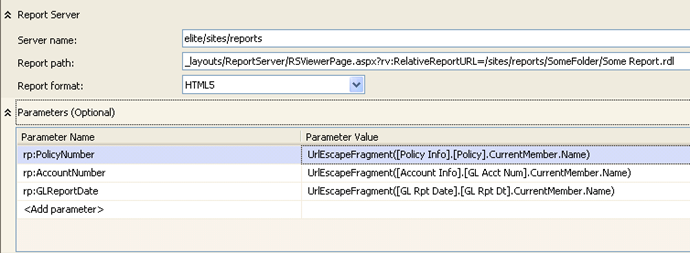

Believe it or not, this lets the data-driven subscription going. But there is another catch. A data-driven subscription runs in unattended mode and you need to pass the user identity as a parameter. So, when the report is executed live we want to pass the internal User!UserID to the report query or stored procedure. But when the report is subscribed to, we want to pass the user identity as a parameter. A simple expression for the query parameter will do the trick.

=iif(Parameters!UserID.Value =“”, Code.GetHashedUser(), Parameters!UserID.Value)

If the parameter is empty (live delivery), we will call the GetHashedUser function, otherwise, we will pass the parameter value to the database. So far so good, but we now have a security hole. There is nothing stopping the end user from spoofing the report parameter and passing another user’s identity to it to force the report take the data-driven subscription path. To fix this, we will need some level of encryption. I suggest a solution that encrypts the user identity with SHA1 hash both in the report and the database. This should keep spoofy users at bay.

You can download the complete code with an AdventureWorks sample report here (requires registration).

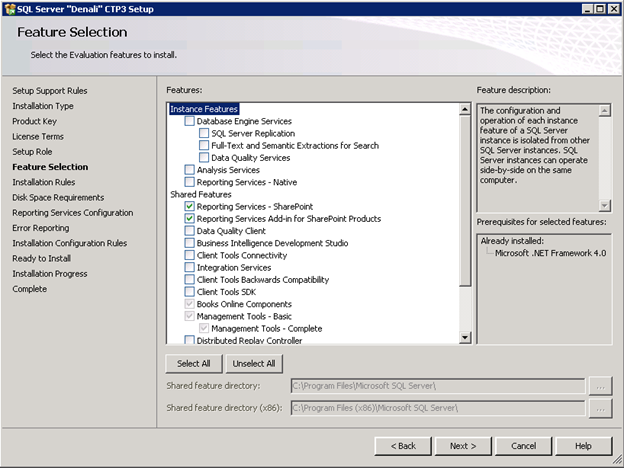

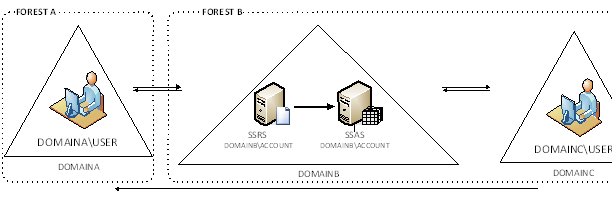

Note: The above solution assumes a report connection string with stored credentials which is what you would typically have for T-SQL (relational) reports. What about SSAS with Windows and dimension security? Not a good story and no changes in Denali unless you vote for the following suggestions on connect:

- Enabling Subscriptions for non-stored credentials reports only has 3 votes on Connect (see link)

- Fixing the SSAS connection string issue has only 4 votes on Connect (see link)

- Enabling Shared Data Sources to have Expression Based Connection strings has only 4 votes on Connect (see link)

- Can’t create a subscription if data source is set to impersonate the authenticated user (see link)

SSAS and data-driven subscriptions has been the bane of my existence. Greg Galloway has an excellent writeup here. In a nutshell, clumsy workarounds include duplicating the report definitions and using an embedded data source with expression-based connection strings, or implementing the row-level security in the report (as you would do with relational reports). So, please vote! Let’s see if can convince Microsoft that we do use SSAS and this is a wide-spread issue that deserves to be fixed ASAP.