Every organization should have a strategy for self-service BI. As a rule of thumb, my advice is that 80% of the BI effort should be directed toward implementing organizational BI solutions (DW, ETL, data model, dashboards, big data, predictive analytics, and so on), while 20% should be left for completing self-service BI analytics. But which tool to choose for self-service BI? With so many vendors on the market, it’s easy to get persuaded by marketing propaganda and eye candy. My advice would be to start with what you already have. And, what you have is probably Excel. It’s common when I talk to clients to find that they don’t realize that the most if not all of their self-service BI needs can be met by the Excel self-service BI capabilities, many of which are unmatched by the competition.

Microsoft has built a comprehensive self-service ecosystem marketed under the Power BI name umbrella that should warrant your serious interest for at least the following reasons:

Flexible Data Schema

Unlike other tools that are limited to importing a single dataset, Power Pivot allows you to import and relate multiple datasets so your business users can build sophisticated models on a par with organizational BI solutions.

Easy Data Acquisition

Unlike other vendors who assume programming and scripting knowledge for data import, importing data with Microsoft self-service BI adopts a wizard-driven approach for importing data. In fact, in Excel business users have several ways to bring data into the data model depending on the data complexity, including Excel native data import (great for working with text files), Power Pivot (great for working with relational and other data sources), and Power Query (even more data sources). Currently, the preferred approach for best performance is to cache the data into the state-of-the art xVelocity in-memory store, but Microsoft is adding pass-through query capabilities as well.

Data Transformation

I don’t think that there is a self-service tool on the market that allows you to transform the data before it’s imported. By contrast, Power Query to Microsoft self-service BI is what SSIS is to organizational BI. Power Query supports a variety of data transformation tasks, ranging from simple tasks, such as combining and splitting columns, to a full-featured M language for advanced transformations.

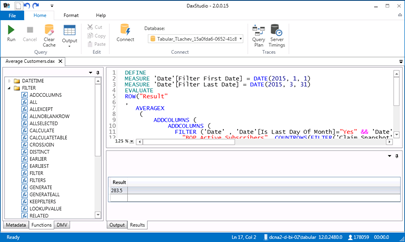

Powerful Programming Language

A BI solution is rarely complete without business calculations that can range from simple aggregations to rather sophisticated business metrics. The Data Analysis Expressions (DAX) is a full-featured analytical language that you’ll be hard-pressed to find in competing products.

Flexible Reporting

With a few clicks, business users familiar with Excel can use build Excel PivotTable and PivotChart reports connected to the Power Pivot data model. If you need more eye candy, Power View should help. While you might find that the current presentation toolset has left a room for improvement, check what’s coming up in Power BI vNext.

Performance and Scaling

Most popular self-service BI vendors offer desktop-based solutions that import data in files. When it comes to scaling out, these vendors fell short. By contrast, an organization that has adopted Microsoft self-service BI can easily scale out data models originated by Business to enterprise data models powered by Analysis Services and sanctioned by IT.

Q&A

Your users don’t know how to get started with the BI model someone else built? Everyone loves the Power BI Q&A feature that lets users type semantic questions, such as “show me sales for last quarter”. None of the popular self-service BI vendors have currently a similar feature, although it looks like startups, such as ThoughtSpot, are rallying to offer similar offering.

Flexible Deployment

Don’t use Excel or Office 365? Have SharePoint on premises or cloud? Don’t have or care about SharePoint? The coming Power BI vNext (free and paid versions) and Power BI Designer removes adoption barriers by allowing you to implement self-service BI outside Excel and SharePoint. My newsletter on this subject provides more details.

Cloud on Your Terms

If you are looking for a cloud-based BI platform, Power BI is just that. And, with Power BI vNext you don’t have to upload your data. Data can stay on premises, such as in a Tabular model or a cube, while your reports can be deployed to the cloud. Moreover, you are always up-to-date with the latest features.

Great Partner Ecosystem

One of the greatest strengths of Microsoft is having a great partner ecosystem and readily available talent.

Cost

OK, that’s the 11th reason but it’s hard to pass free given that the Power tools (Power Pivot, Power Query, Power View, and Power Map) are either bundled with Excel or available for download) and Power BI vNext cloud offering has a free Power BI Designer and a free cloud edition.

While Microsoft self-service BI is not perfect, it should warrant your interest if you are on the market looking for a tool. Sometimes, all we need to do is appreciate what we already have.