Transactional Reporting with BISM Tabular

Continuing on BISM Tabular performance (see my Cube vs. VertiPaq Query Performance blog), I was disappointed to find that there are no performance improvements in PowerPivot/BISM Tabular in CTP3 in the area of detailed (transactional-level) reporting which has plagued me since the R2 release. Consider the following report build on top of an Adventure Works PowerPivot model.

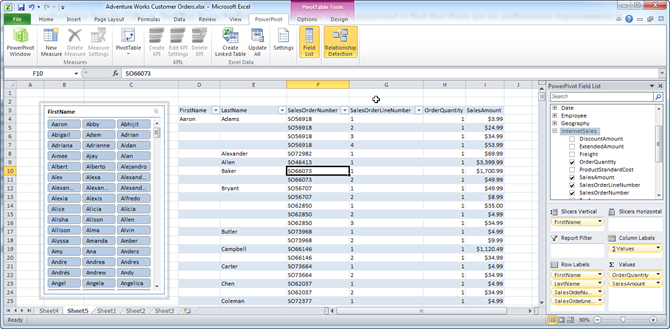

This report shows all Adventure Works customers and their order information by combining data from the Customer (18,000 rows) and InternetSales (60,000 rows) tables. Specifically, the report includes two columns from the Customer table and four columns from the InternetSales table. The report has a slicer to let the user filter a customer. Given that PowerPivot is an end-user tool it’s likely that end users would build such reports.

Unfortunately, each time you click the slicer or filter a column, the report query takes about ten minutes (!) to execute. One would surely expect better performance from the “engine of the devil”. To be fair to VertiPaq, Excel OLAP PivotTable is designed for summarized reports and not transactional reports. I am not excluding the possibility of a bug in the VertiPaq engine because a similar report connected to the Adventure Works cube takes milliseconds to execute. Here is the MDX statement generated by PowerPivot report when I filter on Aaron:

SELECT {[Measures].[Sum of OrderQuantity 2],[Measures].[Sum of SalesAmount 2]} DIMENSION PROPERTIES PARENT_UNIQUE_NAME,HIERARCHY_UNIQUE_NAME ON COLUMNS , NON EMPTY Hierarchize(DrilldownMember(DrilldownMember(DrilldownMember(CrossJoin({[Customer].[FirstName].[All],[Customer].[FirstName].[FirstName].AllMembers}, {([Customer].[LastName].[All],[InternetSales].[SalesOrderNumber].[All],[InternetSales].[SalesOrderLineNumber].[All])}), [Customer].[FirstName].[FirstName].AllMembers, [Customer].[LastName]), [Customer].[LastName].[LastName].AllMembers, [InternetSales].[SalesOrderNumber]), [InternetSales].[SalesOrderNumber].[SalesOrderNumber].AllMembers, [InternetSales].[SalesOrderLineNumber])) DIMENSION PROPERTIES PARENT_UNIQUE_NAME,HIERARCHY_UNIQUE_NAME ON ROWS FROM (SELECT ({[Customer].[FirstName].&[Aaron]}) ON COLUMNS FROM [Sandbox]) CELL PROPERTIES VALUE, FORMAT_STRING, LANGUAGE, BACK_COLOR, FORE_COLOR, FONT_FLAGS

We would probably need to wait for the next version of Office to fix this performance issue at its core. Meanwhile, avoid Excel pivot reports and consider Crescent for detail-level reporting. Crescent generates native DAX queries and I verified that detail-level reporting is efficient.

Please vote for the connect feedback I’ve submitted on this subject.

UPDATE 8/14/2011

The slowdown is caused by the subselects added to the MDX query when applying a slicer or column filtering. Specifically, when the query requests calculations, the server applies Autoexists before doing NON EMPTY which could be rather expensive over a large space. Filtering on a field added to the Report Filter zone is very fast because the query uses a WHERE clause.