My TechEd 2011 Sessions Available Online

All TechEd 2011 breakout sessions are available online on MSDN Channel9 website. Here the links to mine:

Top 10 Reasons to Upgrade to Microsoft SQL Server 2008/R2 Reporting Services

Enjoy and vote!

All TechEd 2011 breakout sessions are available online on MSDN Channel9 website. Here the links to mine:

Top 10 Reasons to Upgrade to Microsoft SQL Server 2008/R2 Reporting Services

Enjoy and vote!

While I’ve been busy at TechEd talking to BI enthusiasts, professionals and wannabes and delivering sessions, the news is out about the new BI roadmap and BISM positioning. See:

So, I won’t repeat what’s been already said. Instead, I’ll attempt to summarize my thoughts about the new BI developments and give you some take-home points to help you plan future BI work.

Self-service BI – This is a no-brainer – PowerPivot and Excel. If you want to let business users analyze data on their own, look no further than Excel.

Team BI – How do business users share PowerPivot models with other users? SharePoint and PowerPivot of course. Another no-brainer.

Corporate BI – This is where things get trickier. Let’s go through a few scenarios:

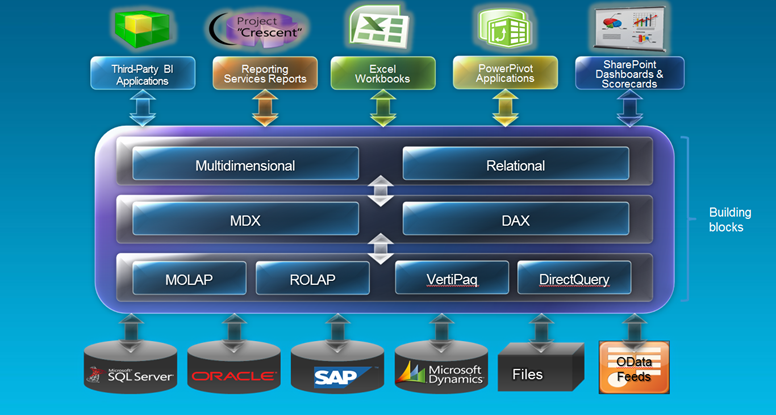

I’d like to indulge myself and think that one day, I hope in not so distant future, BISM will evolve to become a true single model that delivers on the UDM promise and combines the best of OLAP and Tabular. Then, we would be able to pick and mix features from both OLAP and in-memory paths, e.g. an in-memory storage with MDX calculations, and use the best technology for the task at hand. Until then, we will have to choose between the multidimensional and tabular paths.

The slides and source code for my TechEd 2011 North America session are available for download on the Prologika website (requires registration). If you haven’t registered, please click the Join link in the upper right corner. My breakout sessions are:

DBI205 – Top 10 Reasons to Upgrade to Microsoft SQL Server 2008/R2 Reporting Services

Rich in demos, this session presents top reasons to upgrade to Microsoft SQL Server 2008 and SQL Server 2008 R2 versions of Reporting Services. Use this information to convince your boss that it’s time to upgrade and remove past limitations. If you already use these versions, you might discover features that can help you find ways to simplify report authoring and maintenance.

DBI330 – Can Your BI Solution Scale?

Your Business Intelligence solution is ready for prime-time but will it perform well? Join this session to learn techniques for testing the performance and scalability of Microsoft SQL Server Reporting Services (SSRS) and Microsoft SQL Server Analysis Services (SSAS).

Watching the TechEd keynote during which it was announced that we have some 10,000 people attending TechEd this year. On the BI side of things, Amir Netz, Distinguished Engineer at Microsoft, came on stage to unveil some of the new features coming up in Denali and demonstrate the BI continuum story in Denali (PowerPivot, SharePoint with Crescent reporting, and Corporate BI). The Corporate BI model had some 2 billion rows cached in-memory VertiPaq engine. You will be able to use Visual Studio to “take over” from Excel and build models exceeding the Excel limitation of 2GB file size. Wait for more exciting news tomorrow at sessions DBI206 – What’s New in Microsoft SQL Server Code-Named “Denali” for SQL Server Analysis Services and PowerPivot and DBI211 – What’s New in Microsoft SQL Server Code-Named “Denali” for Reporting Services!

On the Windows Phone area, interesting news was that there will be an upgrade later on this year that would let mobile users integrated with Office365 and Lync Mobile. Unfortunately, no news to make BI available to Windows 7 phones yet.

My TechEd sessions got finalized. This would be my sixth TechEd to go to and present at (this one conveniently located in my home city). This time I am honored to have three sessions (two breakout sessions and one interactive session). You can’t attend, you should be able to find me hanging around at the DBI booth.

| Code | Session | Day | Time |

| DBI205 | Top 10 Reasons to Upgrade to Microsoft SQL Server 2008/R2 Reporting Services Breakout Session Primary Speaker(s): Teo Lachev Proposed Speaker(s:) Rich in demos, this session presents top reasons to upgrade to Microsoft SQL Server 2008 and SQL Server 2008 R2 versions of Reporting Services. Use this information to convince your boss that it’s time to upgrade and remove past limitations. If you already use these versions, you might discover features that can help you find ways to simplify report authoring and maintenance. | Monday, May 16 | 3:00 PM – 4:15 PM |

| DBI330 | Can Your BI Solution Scale? Breakout Session Primary Speaker(s): Teo Lachev Proposed Speaker(s:) Your Business Intelligence solution is ready for prime-time but will it perform well? Join this session to learn techniques for testing the performance and scalability of Microsoft SQL Server Reporting Services (SSRS) and Microsoft SQL Server Analysis Services (SSAS). | Monday, May 16 | 4:45 PM – 6:00 PM |

| DBI378-INT | Microsoft SQL Server Reporting Services Tips and Tricks, How-to and Beyond Interactive Discussion Primary Speaker(s): Teo Lachev Speaker Assistant(s): Proposed Speaker(s:) Join this session to understand how Reporting Services 2008 and R2 can help you find solutions and workarounds for some of the features you requested the most. Learn report authoring and management tips and tricks that will help you bring your investment to the next level. | Thursday, May 19 | 2:45 PM – 4:00 PM |

Here are my DBI booth staffing times where I will be awaiting your BI questions:

Monday – 5:30 PM-7:30 PM

Tuesday – 12:30 PM-3:30 PM

Wednesday – 3:00 PM-5:00 PM

After dropping the Microsoft Business Intelligence Indexing Connector, Microsoft is working on an advanced content crawler, code-named Project Barcelona, that will index BI content, such as SQL Server, Excel, SharePoint, Reporting Services, Analysis Services, SSIS. Although initially aimed at DBA/ETL developers, its ambitions go well beyond that. According to the team blog, “At the end of the day, we want to build a set of tools that makes managing the modern enterprise data topology significantly easier – so we strongly believe we will need significant feedback before landing on the right design and feature set”. The blog doesn’t say when Barcelona will be released and how it will be implemented. I’d expect it to become a part of SharePoint and released alongside SQL 11 but this is just a speculation on my part.

I’ve made a decision. My next book will be Applied Kerberos BI. Jokes aside, I’ve been doing a lot of security work lately to get Kerberos working for internal and external users. Here is my latest success story.

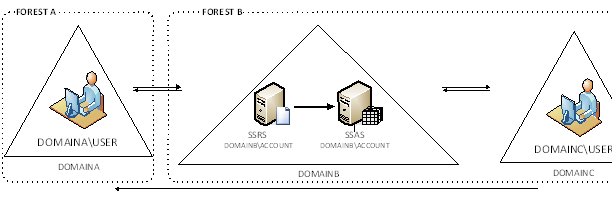

In this case, the customer has two AD forests. The internal users are on a DOMAINA in FORESTA. The production servers are in DOMAINB. The external user accounts are in DOMAINC. Both DOMAINB and DOMAINC are in a separate forest. There is a bi-directional trust between DOMAINA and DOMAINB and between DOMAINB and DOMAINC. DOMAINC trusts DOMAINA.

After registering SPNs, etc. we’ve found that Kerberos doesn’t work. Or, to be more specific, it works partially. If a service account from DOMAINB is used to run SSRS and SSAS, the double hop (user-SSRS-SSAS) is successful for users on DOMAINC but not for DOMAINA users. And vice versa, if a DOMAINA service account is used, only DOMAINA users can run reports. Upon a fair amount of tracing, we saw that when DOMAINB tried to resolve the SSAS SPN it fails with KDC_ERR_S_PRINCIPAL_UNKNOWN error. For some obscure reason, it was trying to resolve the SPN by using the SSAS NetBIOS name against the DOMAINA domain controller and DOMAINA was failing to find it. You would expect Kerberos to fall back and do a second search in the domain the end user belong to but it doesn’t. Perhaps, it gets lost in the forests.

How do we solve this horrible issue? Use a FQDN SSAS server name in the report data source. For example, instead of SSASServer, we would use SSASServer.DOMAINB.LOCAL. This forces the Kerberos to look up the SSAS SPN in DOMAINB. Please note that in this case, the SSRS server runs in a native mode. I don’t know what will happen if the SSRS server is configured for SharePoint integration. The Configuring Kerberos Authentication for Microsoft SharePoint 2010 Products whitepaper states “Basic Kerberos delegation can cross domain boundaries in a single forest, but cannot cross a forest boundary regardless of trust relationship. Kerberos constrained delegation cannot cross domain or forest boundaries in any scenario.” This makes me somewhat skeptical but it might apply to the scenario where SharePoint and SSAS are in two different forests.

I had a laptop which blue screened and shut down. Some files got corrupted and fixed by the CheckDisk. Apparently, something got whacked during the process because the Analysis Services would refuse to start with the following error:

The service cannot be started: XML parsing failed at line 1, column 40961: Illegal xml character.

Unfortunately, Analysis Services doesn’t support starting in recovery mode, such as SQL Server Database Engine. Usually, when you get a message like this, the error message tells you which object file, such as dimension, got corrupted. To fix, you would delete the database from C:\Program Files\Microsoft SQL Server\<SSAS Version>\OLAP\Data and reprocess the database or restore from backup. But in this case, the error message in the Windows Event Viewer didn’t indicate any object. As it turned out the issue was a corrupted master.mvp file in the Data folder. The resolution path was:

Scenario: A customer wants to have report row-level security and data-driven subscriptions. If you have used SSRS for a while, you will now the cornerstone of report row-level security is User!UserID which returns the user identity. But here is the catch – SSRS will refuse creating a data driven subscription if a report has an expression with User!UserID.

Solution: How do we solve this horrible issue? A great hack is in order. We will disguise the User!UserID in an embedded function inside the report or in an external assembly so SSRS can’t see it. (The reason why this function is named GetHashedUser although no hashing is in place will become obvious in a moment)

Public Function GetHashedUser() as String

return Report.User!UserID.ToLower()

End Function

Believe it or not, this lets the data-driven subscription going. But there is another catch. A data-driven subscription runs in unattended mode and you need to pass the user identity as a parameter. So, when the report is executed live we want to pass the internal User!UserID to the report query or stored procedure. But when the report is subscribed to, we want to pass the user identity as a parameter. A simple expression for the query parameter will do the trick.

=iif(Parameters!UserID.Value =“”, Code.GetHashedUser(), Parameters!UserID.Value)

If the parameter is empty (live delivery), we will call the GetHashedUser function, otherwise, we will pass the parameter value to the database. So far so good, but we now have a security hole. There is nothing stopping the end user from spoofing the report parameter and passing another user’s identity to it to force the report take the data-driven subscription path. To fix this, we will need some level of encryption. I suggest a solution that encrypts the user identity with SHA1 hash both in the report and the database. This should keep spoofy users at bay.

You can download the complete code with an AdventureWorks sample report here (requires registration).

Note: The above solution assumes a report connection string with stored credentials which is what you would typically have for T-SQL (relational) reports. What about SSAS with Windows and dimension security? Not a good story and no changes in Denali unless you vote for the following suggestions on connect:

SSAS and data-driven subscriptions has been the bane of my existence. Greg Galloway has an excellent writeup here. In a nutshell, clumsy workarounds include duplicating the report definitions and using an embedded data source with expression-based connection strings, or implementing the row-level security in the report (as you would do with relational reports). So, please vote! Let’s see if can convince Microsoft that we do use SSAS and this is a wide-spread issue that deserves to be fixed ASAP.

I recall seeing “fast queries in SSMS but slow in SSRS” issues reported a few times in the public discussion list but I’ve always dismissed them as unrelated to SSRS. Alas, I happened to see one today when a report would take a very long time (7 min) to execute. As usual, the first stop was to take a look at the SSRS Execution Log which showed that all the time was spent in data retrieval. However, executing the report query in SSMS would take a few seconds. In this case, the report had a complex free-text T-SQL SELECT statement that referenced a single report parameter several times in the query.

The workaround we found at the end was to declare a variable at the beginning of the query that referenced the parameter once, as follows:

DECLARE @invoice_number as int

SET @invoice_number = @inv_parameter

Then, we referenced the variable in the report query instead of the query parameter. This solved the mysterious query performance issue which appears to be a bug with parameter passing in the SQL Server dataset extension. In this case, the customer had SQL Server 2008. The issue might be fixed in SQL Server 2008 R2, I haven’t checked.