Day 1 of TechEd 2009 is almost over with the exception of the Community Influencers Party tonight. I heard that this year they expect 7,000 attendees. This is a huge scale-down from previous years. For instance, we had 16,000 attendees at TechEd USA 2007. Economy is hitting everything hard.

I thought the keynote was kind of lame. Judging by it, Microsoft has only three products: Windows 7 (officially announced to ship around holidays although Microsoft didn’t say which holidays), Windows Server 2008 (the buzz is now the forthcoming R2 release), and Exchange Server 2010. Unlike previous TechEds, there wasn’t a single announcement about other products. SQL Server KJ, Office 2010, Azure, dev tools? Nope, apparently not worth mentioning. Sure Mark Russinovich, whom I respect very much, did some cool Windows 7 demos but there were not enough to pique my interest. I understand that OS and Exchange Server are bedrock for every business and after the sad Vista saga, we have to show the world that now we’ll do things right with Windows 7, but the BI soul in me was thirsty for more.

After lunch, I hang around the BI area of the Learning Center, where I answered questions and met with other peers, including Nick Barclay (MVP) whom I wanted to meet personally for a while. Then, I attended the excellent Donald Farmer and Kamal Hathi ‘s Microsoft Project Code Name “Gemini: Self Service Analysis and the Future of BI and I had the chance to see the Gemini, which I blogged briefly about before without knowing too much, for the first time in action and gain more in-depth knowledge.

The Gemini is an end-user oriented Excel add-in that will let the user acquire data from a variety of data sources, including SSRS reports (SSRS KJ will expose reports as data feeds) and SharePoint lists, and load them in an Excel spreadsheet. The tool crunches data very fast even on a modest computer (the demo showed a notebook computer working with millions of rows) thanks to its ability to compress column-level data. This works because a dataset column would typically contain redundant data.

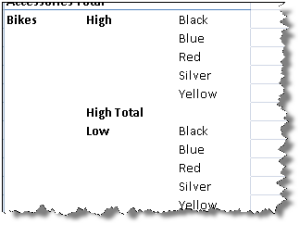

Once data is loaded in Excel, the tool will attempt to automatically determine the relationships between datasets (loaded in separate spreadsheets) to create a hidden dimensional model consisting of fact and dimension in-memory tables. The user will be able to manually specify the dataset relationships by telling the tool which column will be used to join the datasets (very much like joining relational tables). Moreover, the user will be able to define calculated columns using Excel-style formulas. Finally, as the add-in builds behind the scenes an in-memory cube, the user will be able to slice and dice data in a Pivot table report. So, no Analysis Services is needed if all the user wants is manipulating data on the desktop.

Where things are getting more interesting is deploying the models on the server. To do so, the end user would deploy the Excel spreadsheet to the MOSS Report Library. Note that MOSS is required for server-side deployment. When other users request the spreadsheet, an Analysis Services redirector will understand that this is a Gemini model and service the requests from a server cube. At this point is not clear how exactly the server cube will be built and whether it could be managed in SSMS. Once the cube returns data, Excel Services will kick in to return data in HTML. A Reporting Services client can also connect to the server cube by its URL. This is no different than connection to a regular cube as Reporting Services will launch the familiar MDX query designer.

So, where is the IT in the new Gemini world? IT will use a cool MOSS dashboard to understand who’s deployed what model and how the models are used, such as when the datasets were refreshed, what are the most popular models, what resources these models took on the server, etc.

What’s my personal take on Gemini? It’s not up to me to decide how useful it is since it’s a business-oriented tool, such as Report Builder 2.0. Business users will have the final word. Based on my personal experience though, the data analytics problems that I need to solve with traditional Analysis Services cubes surpass the Gemini capabilities by far. So, don’t throw your MDX knowledge out of the door yet. In my line of work, I can see Gemini being useful as a cube prototyping tool, especially in the early stages of requirement gathering where data can be typed in Excel and I can demonstrate to users what a cube can do for them. Of course, Microsoft plans for Gemini are much more ambitious than that. In the ideal world, all business users would upgrade to Office 2010 and create cool Gemini models to give IT folks a long-deserved break ;-). Or, so the fairytale goes….

To wrap up the day, I attended What’s New in Microsoft SQL Data Services presentation by Rick Negrin to find out that SQL Data Services is nothing more than SQL Server running on Microsoft data centers. SQL Data Services will support two application connectivity modes: a “code near” mode where the application (typically a web application) is deployed to Azure and “code far”, where the application will connect to SQL Server over Internet using the TDS protocol. Microsoft role is to provide scalability and failover. Not all SQL Server features will be available in version 1. For example, CLR will not make the cut.

A long and tiring day. I am off to the party now.