Prologika Newsletter Fall 2022

BI implementation shortcuts are tempting and disguise themselves as cost-effective. True, you can slap a Power BI dataset on top of your data (the cornerstone of self-service BI), but most BI implementations will greatly benefit from a datamart, irrespective of the propaganda of self-service BI vendors. I explain the benefits of datamarts vs Power BI datasets directly on data sources in my blog “Datasets vs. Datamarts”. This newsletter discusses a newly released Power BI feature that attempts to simplify the implementation of datamarts by business users.

Understanding Datamarts

Let’s define a datamart as a centralized repository for hosting clean and trusted data that is typically organized in a star schema, i.e. as a set of fact tables and dimension (lookup) tables. BI pros would typically host a datamart in a relational database. Nowadays, a cloud-based datamart, such as a datamart hosted in Azure SQL Database, Azure SQL Managed Instance, or Azure Synapse, is the norm. Once the datamart schema is designed, a BI pro would use a professional tool, such as Azure Data Factory (ADF) to load the datamart periodically, such as once every night.

As Microsoft announced here, Power BI datamarts are mean to democratize datamart implementations by business users. In my opinion, this is yet another premium feature, spearheaded with great vision and effort, but questionable practical value. In a nutshell, a Power BI datamart is a combo of Power BI Premium and a Microsoft-hosted Azure SQL Database aiming to simplify the implementation of a departmental datamart.

The Good

Unlike other vendors, such as Domo and their proprietary and overly expensive stack, Microsoft has decided to go with somewhat open solution consisting of tools that Power BI users already know: Power Query, Power BI Desktop (for the first time some of its modeling features, such as relationships and DAX measures, made it to the cloud), and SQL Server. Microsoft provisions the database for you although surrounds it with some red tape (more on this in a moment). Thus, a business users aiming for “no code, low code” experience will whip out Power Query dataflows that populate the database and then build a model (dataset) directly in Power BI Service. Obviously, the main goal is to simplify the experience as much as possible where all the action happens online.

It’s nice that Microsoft chose hosting the data in a SQL database instead of a “lakehouse”. Apparently, they learned some painful lessons from Power Query CDM folders. The database size is up to 100 GB which is not bad at all. I also like that some Power BI modeling features finally made it to Power BI Service, but unfortunately only in the confines of the datamart feature.

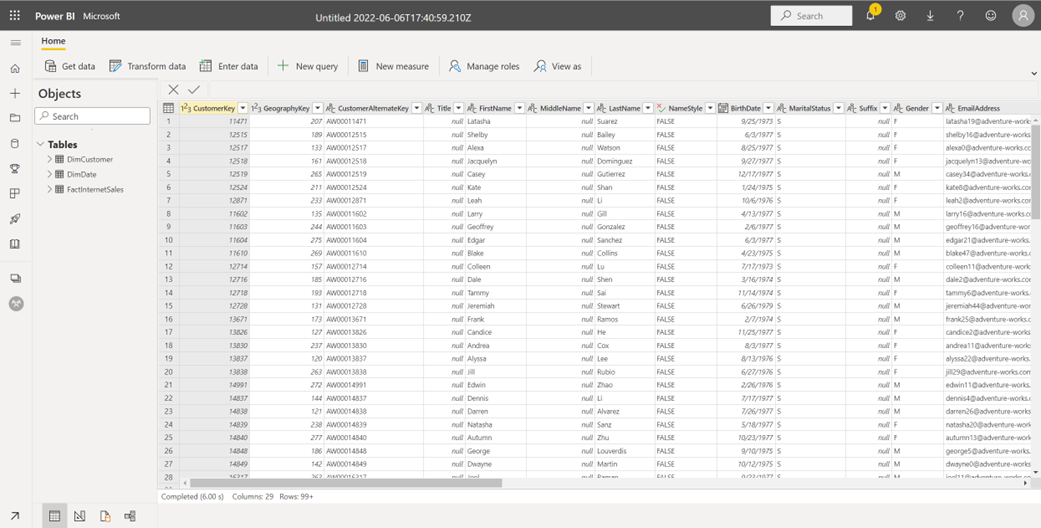

The screenshot below shows how a business user can use Power Query dataflow to transform and save the data into a Power BI datamart.

The Bad

From the announcement, “Best of all, IT doesn’t have to worry about getting all data into centrally governed data sources, thus providing discipline at the core and flexibility at the edge.” I failed to see how this will provide “discipline at the core” – a tenant that Microsoft learned from their own pain points after tilting too much toward self-service BI. I’ve also seen statements online that business users don’t have to “consult with IT anymore” when implementing datamarts. Really? What happened to managed self-service BI? I’m sure IT will be thrilled having corporate data in Microsoft-owned databases that they can’t manage and queries running amuck and consuming precious premium resources. Luckily, the admin portal has a switch to control who can create these datamarts. I hope at least we have a BYO (bring your own) database feature at some point.

The elastic Azure SQL database that Microsoft provisions is read-only, meaning that you can’t extend it. I’m a big fan of pushing calculations as much upstream as possible to SQL Server, such as by implementing SQL views, but we can’t do that. Instead, we would use Power Query (what else of course) for all data transforms. But I have serious reservations against Power Query dataflows – a tool that is known to cause performance issues without providing any troubleshooting and maintenance insights.

The Ugly

Do we really need this feature? I would argue that what was really needed was extending Power Query with “destinations” where the user can specify where the data would land. If that was implemented, IT could selectively let business users augment the infrastructure set up by IT with self-service ETL (more than likely temporary) that sinks the data into an IT-sanctioned database.

Further, it would have gotten us out of another proprietary mess that forces dataflows to save their output into CDM folders that make sense only to Microsoft (see my “Power BI Dataflows vs ADF Mapping Data Flows” blog for the gory details). Want to save dataflow data somewhere else? For now, you must use Power BI datamarts because this is the only way you can have your data in a (Microsoft-provided) relational database and nowhere else.

Conclusion

Recently, an enterprise client decided to migrate all self-service Alteryx flows to IT-governed ADF pipelines. More than likely, Power BI datamarts will be heading in that direction. Be very careful about any pure self-service features, as you might find yourself in a bigger mess that you tried to solve.