App Integration Tools and Patterns

“…That’s when the teardrops start, fella…

…Dirty deeds and they’re done dirt cheap”

“Dirty deeds done dirt cheap”, AC/DC

A few months ago, I did an assessment for a large organization seeking to modernize their data analytics processes. As a part of standardizing the toolset, the client was considering Salesforce MuleSoft for both application and data integration. After all, the vendor told them the tool can do “everything”. Indeed, taken from the vendor page, we learn that “MuleSoft is a platform that gives IT the tools to automate everything. This includes integrating data and systems, automating workflows and processes, and creating incredible digital experiences — all on a single, easy-to-use platform.”

Let’s parse this claim.

Defining integration types

Quick as a bunny and excited that I finally have one tool that does it all, we hop to the Gartner’s Magic Quadrant for Data Integration Tools. Surprise, Salesforce MuleSoft is not even there. Where is MuleSoft? It’s on the Gartner’s Magic Quadrant for Integration Platform as a Service and the Magic Quadrant for API Management. Confused? Let’s start with definitions.

Data integration (note the focus on data)

“Gartner defines data integration as the discipline that includes architectural patterns, tools, and methodologies for accessing, harmonizing, transforming, processing, and moving data across various endpoints and infrastructures. It ensures consistent access and delivery of data across different subject areas and structures to meet business and application needs.”

Integration Platform (note the focus on applications)

“Gartner defines integration platform as a service (iPaaS) as a vendor-managed cloud service that enables end users to implement integrations between a variety of applications, services and data sources, both internal and external to their organization.”

API Management (note the focus on APIs)

“Gartner defines the application programming interface (API) management market as the market for software that supports API life cycle stages such as planning and design, implementation and testing, deployment and operation, and versioning and retirement.”

So, should we use MuleSoft for ETL and data integration patterns typically involving large data volumes, such as to load a data warehouse? I say we don’t. You probably could, but it will be like trying to fit a square peg into a round hole. Did that client try it despite my advice against it? They did and came to the same realization. Should we consider MuleSoft for enterprise-level app integration, such as something happening in one system triggers something happening in another system by calling APIs? Probably, that’s what’s designed to do anyway. Let’s choose the right tool for the job.

Application integration done dirt cheap

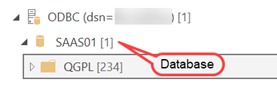

What about a true application integration scenario? In a current assessment, a client uses a third-party app which stores data in a SQL Server database. Each subsidiary has its own database that tracks invoicing locally through the app. However, a central NetSuite ERP system is used to consolidate invoices from all subsidiaries. So, the integration task at hand is to implement a bi-directional synchronization where each new local invoice (and its associated line items) is exported to NetSuite and the local invoice is then updated with the corresponding NetSuite transaction id (it’s more complicated than this but you get the idea).

Now this is where we can surely use “specialized” tools like MuleSoft, Boomi, Celigo, etc., right? And we surely pay a premium, to the likes of thousands of dollars per month. I don’t claim to know these tools but for the most part they appear to me as nice UI wrappers on top of the vendor APIs. They certainly avoid dealing with the actual API complexities, JSONs, etc, but should you lock yourself and break the bank for small scale app integration? Nowadays, everyone wants to do more with less.

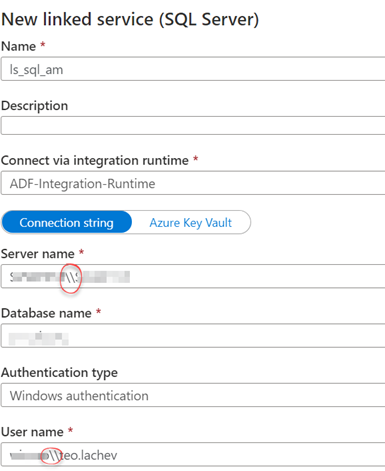

Therefore, knowing and using the Microsoft Azure stack, my personal choice is to first consider Azure Data Factory. True, it’s a generic data integration tool and I must learn how to call the vendor APIs and deal with JSONs but it’s not a big deal (AI to help here). ADF can even do webhooks. And it will save you tons of money.