Top 5 Lessons Learned from IBM Db2 Integration Projects

I’ve done a few BI integration projects extracting data from ERPs running on IBM Db2. Most of the implementations would use a hybrid architecture where the ERP would be running on an on-prem mainframe while the data was loaded in Microsoft Azure. Here are a few tips if you’re facing this challenge:

- IBM provides JDBC, ODBC, and OLE DB drivers. The JDBC driver is not applicable to the Microsoft toolset because none of the Microsoft BI tools run on Java runtime. Microsoft provides an OLE DB Provider for Db2.

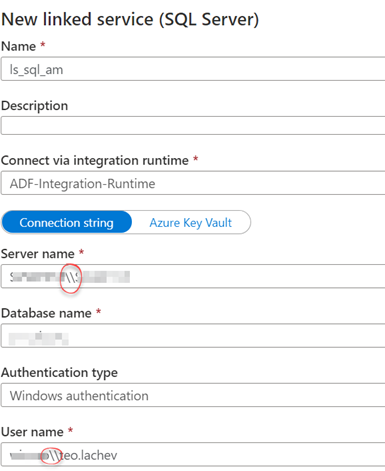

- If you use Azure Data Factory or Power BI Desktop, you can use the bundled Microsoft OLE DB driver for Db2. Here are the Azure Data Factory settings for setting up a linked service using the Db2 driver:

- Server name – specify the server name of IP address of the IBM Db2 server. For DB2 LUW the default port is 50000, for AS400 the default port is 446 or 448 when TLS enabled. Example: erp.servername.com:446 to connect to AS400.

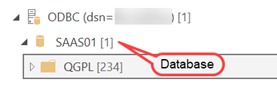

- Database name – that one is tricky as I’ve found that even the client doesn’t know it because the ODBC/JDBC driver hides it. The easiest way to obtain it is to connect Power BI Desktop to Db2 using the ODBC driver and look at the Navigator window.

- Package collection: This one can get you in trouble. If you get an error “The package corresponding to an SQL statement execution request was not found. SQLSTATE=51002 SQLCODE=-805”, enter NULLID as explained in the ADF documentation (see the link above).

- To use the IBM drivers, you need to install them on the on-prem VM hosting the ADF self-hosted runtime. Instead, consider using the Microsoft-provided drivers because they are bundled with the runtime and there is nothing for you to install.

- Based on my tests, all drivers have similar performance but do upgrade to the latest IBM drivers if you plan to use them. In one project, I’ve found that the latest IBM ODBC driver outperformed twice an older driver the client had.

- The read throughput is going to be mostly limited by the mainframe server itself and the connection bandwidth between the data center and Azure. In all projects, the mainframe read speed was by far slower than reading data from a modest SQL Server. For example, one project showed that it would take 2.5 minutes to read one million rows from the production mainframe, while it would take 40 seconds to read the same data from SQL Server.

While optimizing the mainframe performance will likely be outside your realm of possibilities, you could lobby to increase the connection throughput between the data center and Azure if you plan to transfer a lot of data. Aim for 500 Mbps or higher. For example, after 2.5 minutes to read aforementioned 1 million row dataset from the server, it took another 2.5 minutes to transfer the data (about 2.5 Gb) to Azure because the connection throughput for this project was only 100 Mbps.