How Filters Affect DAX Measures

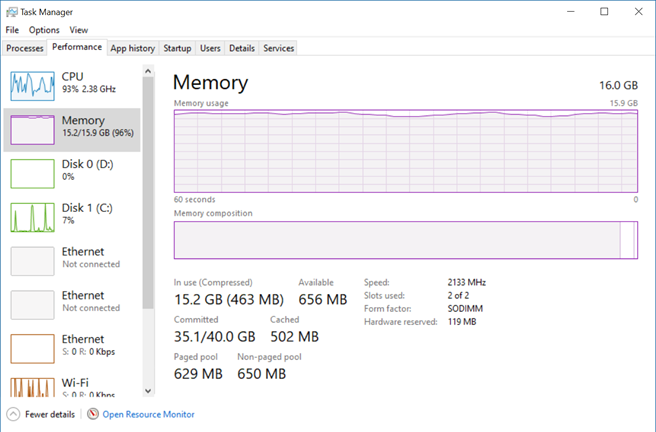

xVelocity (the storage engine behind Tabular, Power BI and columnstore indexes) is an in-memory columnar database, and it’s such it’s not suitable for detail-level reports, such as transaction-level reports. I wrote about performance implications with xVelocity and detail-level reporting here and here. In general, the lower the report grain and the more columns you add to the report (customer first name, last name, invoice number, etc.), the slower the performance will be as more and more columns would need to be scanned and cross-joined.

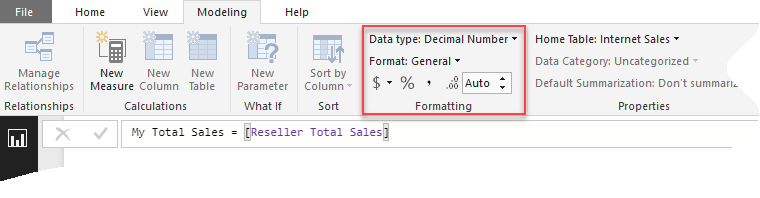

A recent Tabular project brought another twist. Most measures (about 250 total) were produced on top of a biggish snapshot table (250 million rows) and Customer table (12 million rows). As a best practice, base measures were created with common filters and then other measures would piggy back on the base measures like Russian dolls. It’s a best practice because if you must change a filter, you need to change only the base measures. For example, a [Number of Open Accounts] measure would filter the snapshot to find how many open accounts the customer has. Then, a [Number of Open Accounts: Banks] would apply another filter:

[Number of open accounts ever] (‘Creditor'[Subscriber Segment] = “Bank”)

Then, a web app will let the end user specify the customer identifiers and send a DAX query to the model to request all the 250 measures. The client complains that the query takes a long time and there isn’t much difference in the query execution for one customer or 100. To Microsoft credit, had the measures have the same filter, a “fusion cache” (introduced I believe in 2016 under “super DAX”) would kick in to reduce queries to the storage engine. For example, if the query requests SalesAmount, OrderQuantity, and other measures and they all have the same filters, this fusion cache will cache queries to the storage engine and help with the query execution. But the cache doesn’t work if measures have different filters irrespective if their definitions are nested or not. Consequently, each measure sends a separate query to the storage engine. So, if the query requests 100 measures for one customer, the storage engine will be queried 100 times and the 250 million snapshot table will be scanned 100 times for that one customer even if there are only a few transactions for that customer. This is a terribly inefficient way!

The first thing a database developer would do to solve this straight in SQL is to create a temporary table to extract the data for the selected customers before calculating the measures. But Tabular is not SQL Server. It would be great if at some point, it could introduce a caching mechanism to let the developer copy a subset of “rows” in the cache (in this case, extract rows from the Account Snapshot for the selected customers), and then transparently redirecting the snapshot queries to the cache (like how Power BI aggregations work today).

As things stand today, one way you can reduce the query execution time is to eliminate large joins. In this case, the DAX query filters the Customer table because some measures are produced from other tables. But you can split the query into multiple queries for the different tables and then apply the customer filter directly on the table bypassing the Customer table. So, instead of:

FILTER (VALUES (Customer[Customer ID] ), ‘Customer‘[Customer ID] IN { … }

We want to the filter for measures involving only the snapshot to be:

FILTER ( VALUES ( ‘Account Snapshot’[Customer ID] ), ‘Account Snapshot’[Customer ID] IN { … }

In this case, eliminating the Account Snapshot -> Customer join resulted in 50% improvement in the query execution time.

But you should also consider using the right tool for the job at hand and for detail-level reports this could be a relational database which reads data by rows and not columns.