SSRS Tabular Query Designer

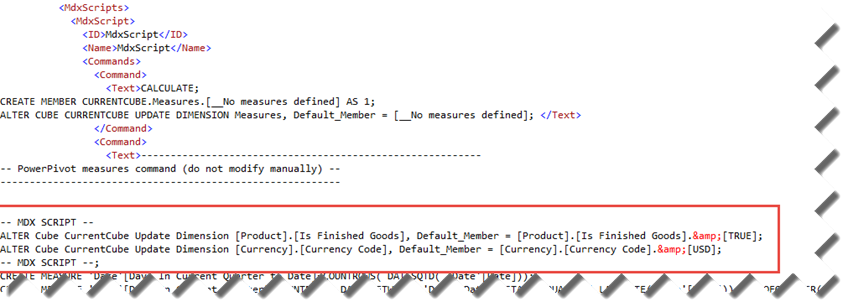

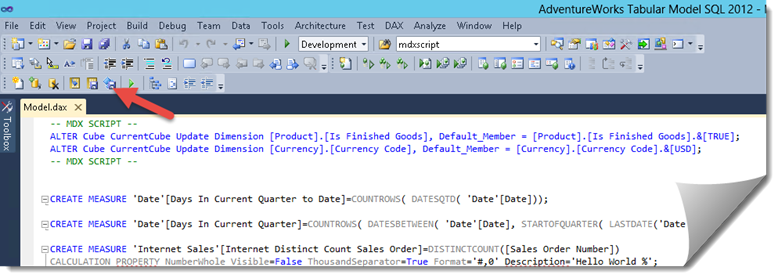

Analysis Services Tabular has gained a lot of momentum for implementing organizational semantic models but its toolset has been lacking. SSRS developers had to rely previously on the MDX Query Designer to send MDX queries to Tabular. If DAX queries were preferred (and they often are for better performance since DAX is the native Tabular query language), developers had to type in DAX manually. And if the query would take parameters then the ugly workaround was to use … the DMX Query Designer.

As Chris Finlan announced yesterday, this will all change starting with the newly released Report Builder, SSDT 17 (currently in release candidate state), and SSMS 17 (also in RC state), as they now feature a Tabular Query Designer. SSMS also includes DAX Query Editor, as Christian Wade explains here.

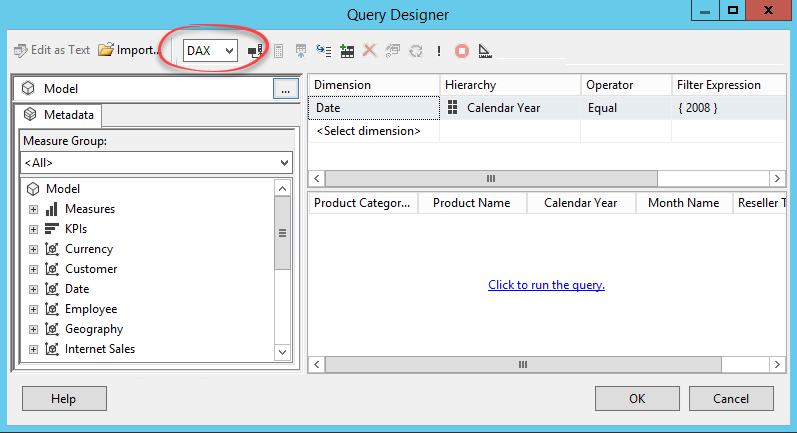

If you’re familiar with the MDX Query Designer, you already know how to use the Tabular Query Designer. When you connect to Tabular, the query designer will discover it and default to DAX query mode. The dropdown allows you to switch to MDX should you prefer to send MDX queries to Tabular.

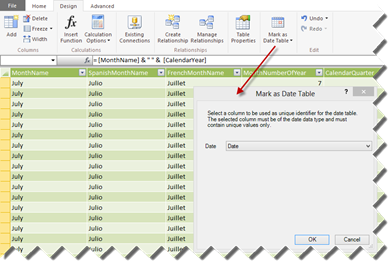

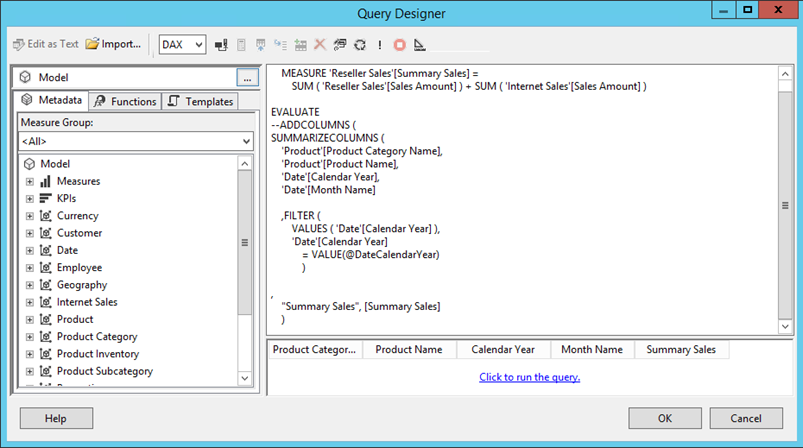

Similar to its MDX counterpart, in most cases you’d still need to know DAX to customize your queries. If you connect to Tabular version 2016 or above, you’d be able to drag and drop metadata to autogenerate the query. I think version 2016 or above is required because the designer relies heavily on the DAX SUMMARIZECOLUMNS function, which was introduced in 2016, when autogenerating queries.

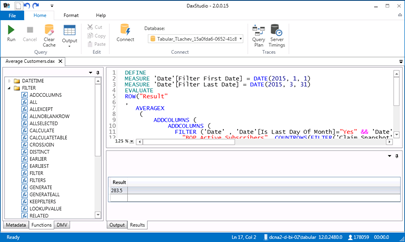

The Design Mode lets you examine the underlying DAX query and customize it as needed. Sorry, no color coding or syntax checking in SSRS, so you’d probably rely on DAX Studio to code and test your queries or the new DAX Query Editor in SSMS which has syntax checking and IntelliSense. The Calculated Member option is disabled and you’re on your own defining custom DAX measures. No big surprises here is it works exactly in same way as in MDX (of course you need DAX formulas, such as VALUE to convert to a numeric value).

It was about time for the Tabular Query Designer to appear to help us writing DAX when designing traditional SSRS reports connected to Tabular. Watch out for a bug where auto-generated parameter datasets error out when hierarchies are used. I attach two reports. Report.rdl demonstrated an auto-generated DAX query. ReportEx.rdl demonstrates a customized DAX query.