Working with Large Tables in SQL Server

Warning: This blog contains old tricks of an old dog.

Scenario: Suppose you have a large table in SQL Server, e.g. hundreds of millions or even a billion rows. DML operations (SELECT, INSERT, UPDATE, DELETE) take long time. How do you speed them up? Do you split the large table into multiple tables? Or, do you ask for better hardware? Or, do you start looking for a new job with less data?

Solution: It’s nothing new but I see clients struggle with this all the time because they don’t know any better.

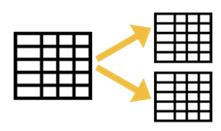

The solution is to partition the table and use partition switching that SQL Server has supported since time immemorial.

Cathrine Wilhelmsen has a great step-by-step blog covering different scenarios, but the process goes like this:

- Configure page compression for the large table (see benefits here).

- Partition the large table, such as by month.

- Create a not-partitioned staging table that has the same indexes and compression as the large table.

- Find the corresponding partition in the large table that will require DML, such as by using this script.

- If the data requires updates, switch out the affected partition to the staging table. Perform updates. For full loads where rows will be only inserted, you don’t have to switch out the partition (see the second scenario in Cathrine’s blog).

- Switch in the staging table into the corresponding partition of the large table. This should take a few seconds.

As a bonus, the SQL Server query processor could eliminate partitions for SELECTs, thus improving the query performance.