ADF Cross Environment Schema Compare Tool

Almost every BI project requires at least two environments, such as DEV and PROD. Unless I’ve missed something, one Azure Data Factory caveat irrespective of the code promotion path (manual or automated with Azure DevOps or Git CI/CD) is that it uses ARM template deployment where the template contains all the artifacts. The equivalent for database schema deployment would be to script the entire database schema which makes me nervous. Not to mention that ARM templates have their own gotchas, such as the one I discussed here when shared self-hosted integration runtimes are used.

So, I got on a quest to find an ADF schema comparison tool for a quick and dirty way to identify ADF code discrepancies between two environments and promote changes manually. Ideally, the tool would be something like SQL Server Database projects in Visual Studio.

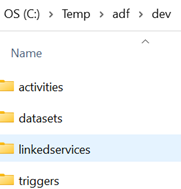

However, I wasn’t able to find such a schema comparison tool for ADF. The closest match was a PowerShell script by Riccardo Pericoto that extracts the ADF artifacts as files. My humble contribution was a few minor changes to the output folders, so that the artifacts from the ADF DEV service are saved in subfolders under a “dev” folder, while the production artifacts are saved under a “prod” folder. I’ve made this change to take advantage of a WinMerge feature that can scan subfolders. I’ve also added a line to delete all files in the target folders.

I enclose the script with the changes in bold:

# Install-Module -Name Az.DataFactory

# Credits: Riccardo Perico https://medium.com/riccardo-perico/comparing-azure-data-factories-exporting-all-the-components-929861ad2e00

Login-AzAccount

Get-AzSubscription -SubscriptionName 'MTX_Int_BI' | Set-AzContext -Name 'MyContextName'

Set-AzContext -Context $(Get-AzContext -Name "MyContextName")

$base_output_url = "C:\temp\adf\"

$pipeline_output = "activities"

$dataset_output = "datasets"

$linked_services_output = "linkedservices"

$triggers_output = "triggers"

$adfv2_rsg_name_tc1 = 'mtx-rsg-adf-dev'

$adfv2_rsg_name_tc2 = 'mtx-rsg-adf-prod'

$adfv2_name_tc1 = 'mtx-adf-dev'

$adfv2_name_tc2 = 'mtx-adf-prod'

#Delete all files

Get-ChildItem -Path $base_output_url -Include *.* -File -Recurse | foreach { $_.Delete()}

$js = (Get-AzDataFactoryV2Pipeline -ResourceGroupName $adfv2_rsg_name_tc1 -DataFactoryName $adfv2_name_tc1) | Select-Object "Name"

$js2 = (Get-AzDataFactoryV2Pipeline -ResourceGroupName $adfv2_rsg_name_tc2 -DataFactoryName $adfv2_name_tc2) | Select-Object "Name"

$js_dst = (Get-AzDataFactoryV2Dataset -ResourceGroupName $adfv2_rsg_name_tc1 -DataFactoryName $adfv2_name_tc1) | Select-Object "Name"

$js_dst2 = (Get-AzDataFactoryV2Dataset -ResourceGroupName $adfv2_rsg_name_tc2 -DataFactoryName $adfv2_name_tc2) | Select-Object "Name"

$js_lks = (Get-AzDataFactoryV2LinkedService -ResourceGroupName $adfv2_rsg_name_tc1 -DataFactoryName $adfv2_name_tc1) | Select-Object "Name"

$js_lks2 = (Get-AzDataFactoryV2LinkedService -ResourceGroupName $adfv2_rsg_name_tc2 -DataFactoryName $adfv2_name_tc2) | Select-Object "Name"

$js_trg = (Get-AzDataFactoryV2Trigger -ResourceGroupName $adfv2_rsg_name_tc1 -DataFactoryName $adfv2_name_tc1) | Select-Object "Name"

$js_trg2 = (Get-AzDataFactoryV2Trigger -ResourceGroupName $adfv2_rsg_name_tc2 -DataFactoryName $adfv2_name_tc2) | Select-Object "Name"

foreach ($pl in $js)

{

(Get-AzDataFactoryV2Pipeline -ResourceGroupName $adfv2_rsg_name_tc1 -DataFactoryName $adfv2_name_tc1 -Name $pl.Name).Activities | Sort-Object "Name" | Out-File $base_output_url"dev\"$pipeline_output"\$($pl.Name).txt"

}

foreach ($pl2 in $js2)

{

(Get-AzDataFactoryV2Pipeline -ResourceGroupName $adfv2_rsg_name_tc2 -DataFactoryName $adfv2_name_tc2 -Name $pl2.Name).Activities | Sort-Object "Name" | Out-File $base_output_url"prod\"$pipeline_output"\$($pl2.Name).txt"

}

foreach ($d in $js_dst)

{

(Get-AzDataFactoryV2Dataset -ResourceGroupName $adfv2_rsg_name_tc1 -DataFactoryName $adfv2_name_tc1 -Name $d.Name).Properties | Sort-Object "Name" | Out-File $base_output_url"dev\"$dataset_output"\$($d.Name).txt"

}

foreach ($d2 in $js_dst2)

{

(Get-AzDataFactoryV2Dataset -ResourceGroupName $adfv2_rsg_name_tc2 -DataFactoryName $adfv2_name_tc2 -Name $d2.Name).Properties | Sort-Object "Name" | Out-File $base_output_url"prod\"$dataset_output"\$($d2.Name).txt"

}

foreach ($l in $js_lks)

{

(Get-AzDataFactoryV2LinkedService -ResourceGroupName $adfv2_rsg_name_tc1 -DataFactoryName $adfv2_name_tc1 -Name $l.Name).Properties | Sort-Object "Name" | Out-File $base_output_url"dev\"$linked_services_output"\$($l.Name).txt"

}

foreach ($l2 in $js_lks2)

{

(Get-AzDataFactoryV2LinkedService -ResourceGroupName $adfv2_rsg_name_tc2 -DataFactoryName $adfv2_name_tc2 -Name $l2.Name).Properties | Sort-Object "Name" | Out-File $base_output_url"prod\"$linked_services_output"\$($l2.Name).txt"

}

foreach ($t in $js_trg)

{

(Get-AzDataFactoryV2Trigger -ResourceGroupName $adfv2_rsg_name_tc1 -DataFactoryName $adfv2_name_tc1 -Name $t.Name).Properties | Sort-Object "Name" | Out-File $base_output_url"dev\$triggers_output$($t.Name).txt"

}

foreach ($t2 in $js_trg2)

{

(Get-AzDataFactoryV2Trigger -ResourceGroupName $adfv2_rsg_name_tc2 -DataFactoryName $adfv2_name_tc2 -Name $t2.Name).Properties | Sort-Object "Name" | Out-File $base_output_url"prod\$triggers_output$($t2.Name).txt"

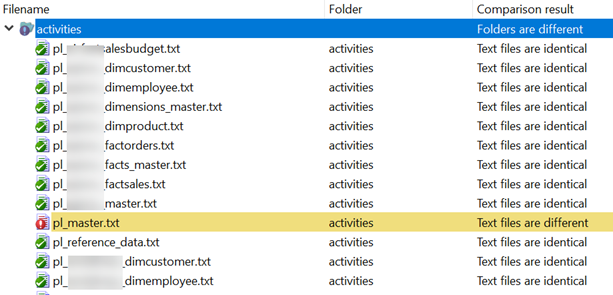

}Once the files are saved, the next step is to use WinMerge configured to scan subfolders to identify differences:

Then, you can manually copy the JSON payload of the changed artifact and replace its equivalent in the other environment. Long live ADF continuous integration and delivery!