Why Is SQL Server Not Using My Index?

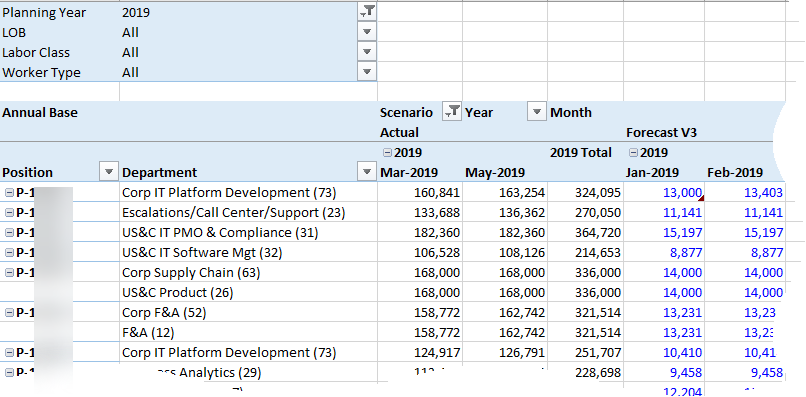

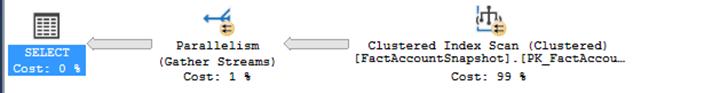

Scenario: A partitioned Tabular table generates SELECT statements that queries a massive range from the base table on a date column. The processing job is taking long time. The SELECT query plan shows Clustered Index Scan but there is an index on the date column.

Why is SQL Server not using the index? Surely, it will speed things up, right? This is where the blaming game starts…

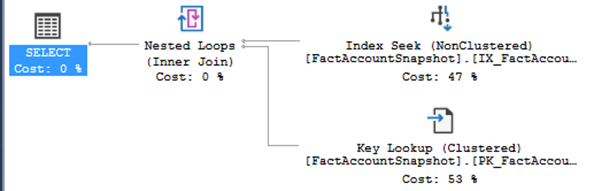

Analysis: SQL Server might ignore the index if the range is too wide. For example, these two queries will probably hit the index on the LastUpdated column in a 300 million rows table because the range is very narrow.

select * from FactAccountSnapshot with (index(IX_FactAccountSnapshot_LastUpdated)) where LastUpdated = ‘1/1/2019’

select * from FactAccountSnapshot with (index(IX_FactAccountSnapshot_LastUpdated)) where LastUpdated between ‘1/1/2019’ and ‘1/2/2019’

This query will probably not:

select * from FactAccountSnapshot where LastUpdated between ‘1/1/2019’ and ‘1/31/2019’

Why? Because the server might determine that is much faster to scan the entire table instead of doing many lookups against the index. To test this out, you can force the query to use the index:

select * from FactAccountSnapshot with (index(IX_FactAccountSnapshot_LastUpdated)) where LastUpdated between ‘1/1/2019’ and ‘1/31/2019’

Only the find out that it takes x5 more to fetch the data (in this example, 1:37 minutes versus 24 seconds with the table scan).

Conclusion: SQL Server is smarter that you might think. Indexes may benefit queries with narrow ranges. The wider the range, the less likely it will result in an index hit. And this is a good thing. BTW, in this particular case, the performance issue was not related to SQL Server at all.

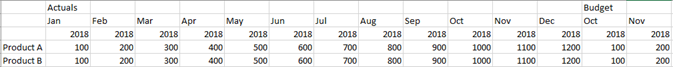

Tip: Assuming good hardware and proper conditions (good query throughput (no massive joins in views), good network throughput, narrow fact table, etc.), Analysis Services should be able to process 100,000 rows/sec on a single thread. So, a 300 million rows table should process in less than an hour. Even better, if you partition it, each partition will process in parallel so with five partitions (five parallel processing threads), you should aim at around 15-20 minutes to fully process this table. Even better, process only the partitions with data changes to bring the query processing window further down.

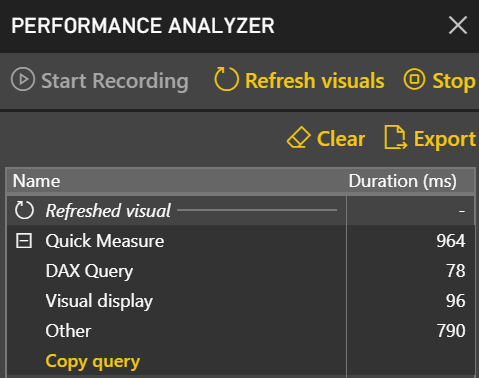

After learning how to model the data properly (the most important skill), DAX would be the next hurdle in your self-service or organizational BI journey. You won’t get far in Microsoft BI without DAX. This letter shares a tip on how to identify DAX performance bottlenecks by using a recent feature in Power BI called Performance Analyzer.

After learning how to model the data properly (the most important skill), DAX would be the next hurdle in your self-service or organizational BI journey. You won’t get far in Microsoft BI without DAX. This letter shares a tip on how to identify DAX performance bottlenecks by using a recent feature in Power BI called Performance Analyzer. Before I get to Performance Analyzer, I’m excited to announce my latest book:

Before I get to Performance Analyzer, I’m excited to announce my latest book: