BISM Tabular Dynamic Data Security Over Bridge Table

About four years ago, in my article Protect UDM with Dimension Data Security, I introduced an approach to secure dimension data in multidimensional cubes by using a factless bridge table. Since then, many BI practitioners have favored this approach because of its simplicity, reusability (transactional reports can join to the security table), and performance. Now that organizational tabular projects support data security, I wanted to try it with BISM Tabular. As it turned out, the factless bridge table approach worked out just fine.

Suppose that you’ve build a tabular project on top of the Adventure Works DW database and the SecurityResellerFilter table is the bridge table that stores the resellers that an employee is authorized to view. As shown in the diagram, SecurityResellerFilter has two relationships SecurityResellerFilter[EmployeeKey] and Employee[EmployeeKey] and SecurityResellerFilter[ResellerKey] and Employee[ResellerKey]. You’d probably want to hide the SecurityResellerFilter table so the end users can’t browse it.

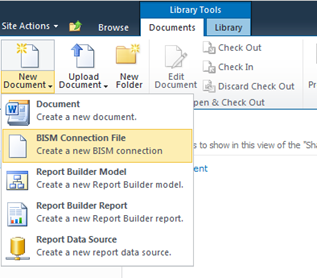

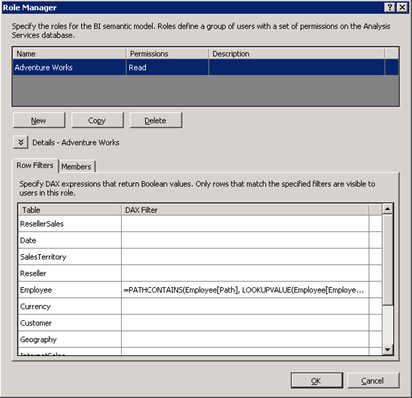

Given this model, implementing a dynamic data security over SecurityResellerFilter requires setting up a new role (click the Roles button in the Analysis Services toolbar) that uses a row filter.

A row filter defines a filter expression that evaluates which rows the role is allowed to see. To set up a row filter in the Role Manager, enter a DAX expression next to the table name. The DAX expression must be a Boolean expression that returns TRUE or FALSE. The Adventure Works role uses the following DAX expression for the row filter on the Employee table:

=CONTAINS(RELATEDTABLE(SecurityResellerFilter), SecurityResellerFilter[EmployeeKey], LOOKUPVALUE(Employee[EmployeeKey], Employee[LoginID], USERNAME()))

The LOOKUPVALUE function is used to obtain the employee key associated with employee’s Windows login which we get from the Username function. Because the row filter is set on the Reseller table, for each reseller the CONTAINS function attempts to find a match for the combination of the reseller key and the employee key. Notice the user of the RELATEDTABLE function to pass the current reseller. The net effect is that the CONTAINS function returns TRUE if there is a row in the SecurityResellerFilter table that matches the ResellerKey and EmployeeKey combination.

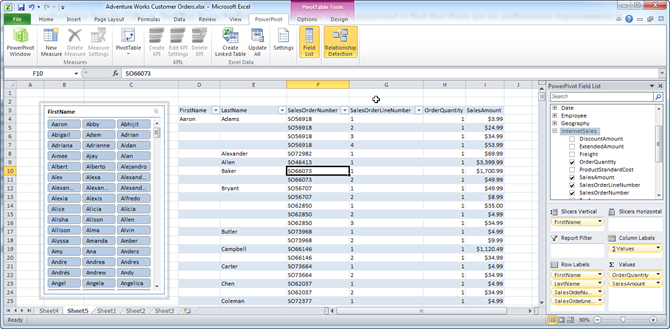

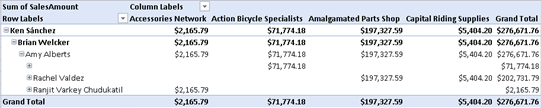

You can use the Analyze in Excel feature (click the Analyze in Excel button in the Analysis Services toolbar) to test the security changes. To verify that the user has access only to resellers the user is authorized to see, add the ResellerName field from the Reseller table in the Column Labels zone.

The report should show on columns only the resellers that are associated with the interactive user in the SecurityResellerFilter table.