SQL Server 2008 R2 November CTP (CTP3)

Microsoft announced release on SQL Server 2008 R2 November CTP (CTP3) with a build number of #1352.12. MSDN, TechNet Subscribers and TAP customers can download the SQL Server 2008 R2 November CTP today. Non-subscribers will be able to access the CTP on Nov. 11th. This should be a feature-complete build that includes all SQL Server R2 functionality.

Reporting Services

The new Reporting Services features include:

SharePoint Integration

SharePoint Integration has several new features that include the following:

- Support for multiple SharePoint Zones.

- Support for the SharePoint Universal Logging service.

- A new data extension.

- A query designer for SharePoint Lists as a data source.

- Support for right-to-left text with Arabic and Hebrew in the SharePoint user interface.

- In a report, the data extension supports include data from SharePoint lists for SharePoint Foundation 2010, SharePoint Server 2010, Windows SharePoint Services 3.0, and Office SharePoint Server 2007.

- Report Parts.

Shared Datasets and Cache Refresh Plans

Shared datasets are a new type of report server item that can retrieve data from shared data sources that connect to external data sources.

Cache refresh plans let you cache reports or shared dataset query results on first use or from a schedule.

New Data Visualization Report Items

The November CTP introduces several new report items that depict data:

- Sparklines and data bars are simple charts that convey a lot of information in a little space. These are often used in tables and matrices. Their impact comes from viewing many of them together and being able to quickly compare them, instead of viewing them singly.

- Indicators are minimal gauges that convey the state of a single data values at glance.

Enhancements to Reporting Services Features

The November CTP provides the following enhancements to Reporting Services features that were released in earlier SQL Server 2008 R2 CTPs or SQL Server 2008:

- Configuring a Map Layer—The Map wizard displays data from both the spatial data source and the analytical data source to help you choose the field on which to match.

- Calculating Aggregates of Aggregates—You can create expressions that calculate an aggregate of an aggregate.

- Enhanced Support for Expressions—New globals and a new property for report variables provide support for overall page numbering, naming pages, and information that is specified in the renderer.

- Rotating Text 270 Degrees—Text boxes can now be rotated 270 degrees.

- Report Pagination—Page breaks on tablix data regions (table, matrix, and list), groups, and rectangles give you better control of report pagination.

- Naming Excel worksheet tabs—You can set properties on the report to name worksheet tab when you export the report to Excel.

Business Intelligence Development Studio Support for

SQL Server 2008 Reports and Report Server projects

Business Intelligence Development Studio supports working with both SQL Server 2008 and SQL Server 2008 R2 reports, and with Report Server projects in the SQL Server 2008 R2 version of Business Intelligence Development Studio.

New Web Service Endpoint

The report server includes a new management endpoint named ReportService2010.ReportingService2010. This endpoint merges the functionalities of both the ReportService2005.ReportingService2005 and ReportService2006.ReportingService2006 endpoints, and can support management operations of the report server in both native mode and SharePoint integrated mode. It also includes new features that are introduced in SQL Server 2008 R2, such as shared datasets and cache refresh.

Analysis Services

The Analysis Services new features are centered around Gemini and include:

PowerPivot for Excel

PowerPivot Field List Enhancements

Numerous enhancements have been added to the PowerPivot field list. These include the following:

- Automatic detection of relationships when columns from unrelated tables are used in a PivotTable.

- Support for searching fields by name.

- Support for named sets.

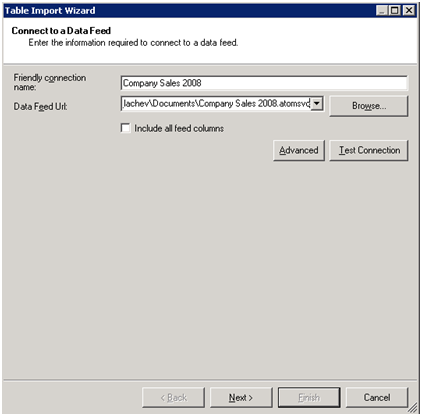

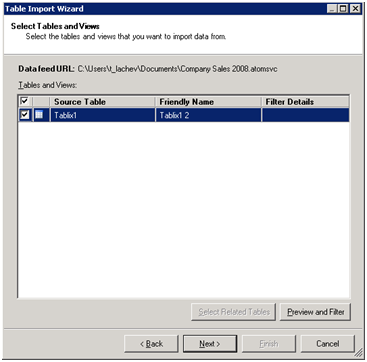

PowerPivot Data Source Editing

Support is available for the following data source editing tasks:

- Refreshing data for table, for all tables from a data source, or for all the tables in the PowerPivot window.

- Editing data source information such as server name, database name, and credentials.

- Editing table settings such as data source table or query used, columns imported, and filters.

Support for More Types of Data Sources

Targeted support has been introduced for importing data from the following sources:

- Microsoft Access 2003, 2007, 2010

- Microsoft SQL Server 2005, 2008, 2008 R2 Analysis Services

- PowerPivot workbooks published to Analysis Services running in SharePoint Integrated Mode

- Text files

- Excel 97-2003, 2007, 2010

- Microsoft SQL Azure

- Oracle 9i, 10g, 11g

- Teradata V2R6, V12

- Sybase

- Informix

- IBM DB2 relational databases 8.1

Enhanced Data Analysis eXpressions (DAX)

DAX functionality has been significantly expanded in the following ways:

- User interface enhancements include better propagation of errors and easier creation of DAX formulas.

- Several functions have been added, including a set of functions that operate on textual data, as well as those that provide common Date and Time manipulations.

- Several performance and functional capabilities have been introduced, including automatic recalculation.

- Support for handling referential integrity violations between related tables has been introduced.

- Automatic selection of data types for expressions is available.

- For more information, see the topic “Data Analysis Expression Language Reference” in SQL Server 2008 R2 Books Online.

DAX is also documented in the online Help of the PowerPivot for Excel add-in. For more information, see the Help file that is installed with the add-in.

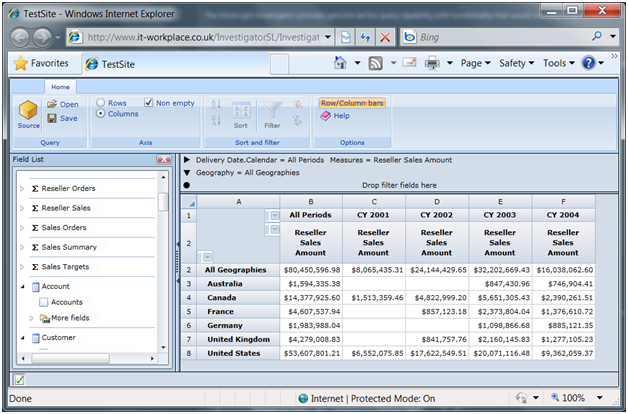

PowerPivot for SharePoint

Management Dashboard

A management dashboard for IT has been introduced. This dashboard provides visibility into PowerPivot use on a SharePoint farm that includes information about the following areas, as well as a rich PowerPivot workbook that can be used to build custom reports:

- Published PowerPivot workbooks, including number of queries executed, number of users and size per workbook in the dashboard.

- Hardware resource utilization for the PowerPivot service, including CPU and memory, is reported on a daily basis to the dashboard.

- Data refresh activity is reported for all PowerPoint workbooks that are accessed through the same PowerPivot service application.

For more information about this feature, see the topic “PowerPivot Management Dashboard” in SQL Server 2008 R2 Books Online.

Enhanced Infrastructure

The following enhancements to infrastructure are available:

- Claims-aware support to enable a user’s identity to flow between SharePoint components in a secure manner.

- Parallelized refresh of PowerPivot data.

- Performance optimizations.

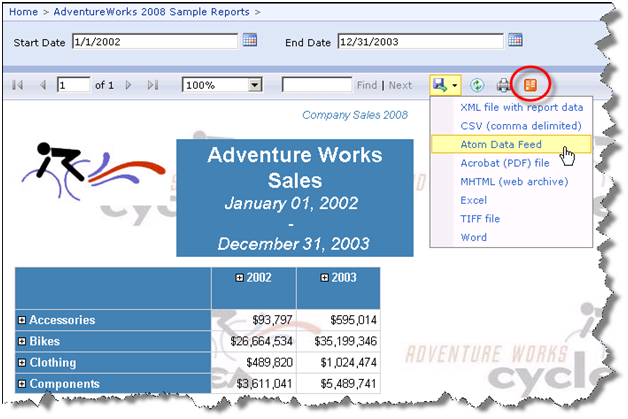

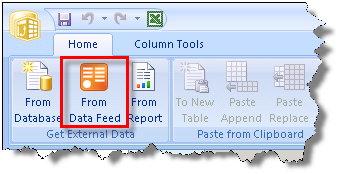

on the web page demonstrates.

on the web page demonstrates.